|

|||||||||||||||||

|

|

|||||||||||||||||

|

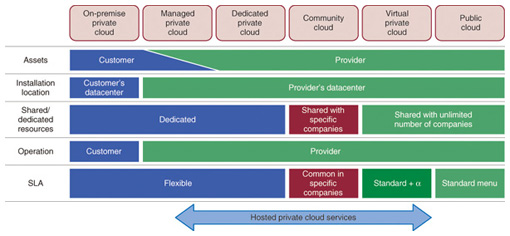

Feature Articles: ICT Infrastructure Technology Underlying System Innovation Vol. 10, No. 11, pp. 33–39, Nov. 2012. https://doi.org/10.53829/ntr201211fa7 ICT Infrastructure Technology Underlying Business & Service InnovationAbstractThis article explains how information and communications technology (ICT) infrastructure technology will help corporations overcome the new challenges facing them as the business management environment changes rapidly and how it will support business and service innovation. Examples of actual projects handled by NTT DATA are given and future technological prospects are discussed. 1. IntroductionThe information and communications technology (ICT) strategies of companies are closely intertwined with their business strategies, and ICT is now indispensable to a wide range of business activities. The business management environment is currently undergoing various changes including shorter product life cycles in response to more diverse and complicated market demands, rapid globalization due to changes in the domestic and international economic situations, stricter legal compliance, business continuity plans for the aftermath of the Great East Japan Earthquake, and energy saving efforts. To keep up with such changes, it is essential to update the ICT systems that support business management. It is even said that the speed of ICT system updates determines whether a business is successful or not. However, the capabilities of current ICT systems are insufficient to meet these corporate demands. Although business growth and customer expansion have been becoming a higher priority than cost reduction in both domestic and international business since last year, cost and business processes improvements still remain as important issues for many companies. While global corporations place higher priority on developing new products and services (innovation), Japanese corporations regard the cultivation of new markets and business expansion to wider areas as important. This trend in Japan suggests that a global rollout is urgently required to support the international business of Japanese corporations [1]. 2. ICT infrastructure technology to overcome business challengesKey concepts to help corporations overcome their challenges are streamlining, agility, globalization, and value creation [2]. To implement these concepts, NTT DATA is focusing on the following three ICT infrastructure technologies. (1) Cloud computing technology (2) Robotics integration technology (3) Communication advancement technology Cloud computing technology features resource sharing, on-demand self-service, and speedy scalability, all of which lead to streamlining and agility. It is also helpful for accelerating globalization because it is designed to be used via a wide range of network access methods. Communication advancement technology is expected to contribute to globalization by bridging communication gaps caused by remote locations and different languages. It will also create new value by enabling not only person-to-person communication but also person-to-machine communication. Robotics integration technology is related to the Internet of Things (IoT) and controls the actual machines by capturing changes in the business environment in real time through advanced sensing technology. This is called M2M2A (machine to machine to actuator), an outgrowth of M2M (machine to machine). Robotics integration technology not only gathers real-world information, but can also provide feedback to the real world based on the collected information. This should lead to the creation of new value. 2.1 Cloud computing technologyPrior to the Great East Japan Earthquake of 2011, it was generally believed that core business systems should remain within private clouds because of security and service level agreement (SLA) issues, while peripheral business could be migrated to public cloud services. However, this earthquake changed opinions: more and more corporations are now transferring their existing business applications to public clouds, and not only non-core applications but also business-to-consumer (B2C) applications and developmental test environments are more frequently being migrated to infrastructure as a service (IaaS) or platform as a service (PaaS) environments. Many companies are performing server integration of their intra-company ICT systems through virtualization for efficient resource usage. However, such attempts tend to end up with mere hardware integration, so the load of operation management tasks is not reduced. They even complicate isolating the problem in the virtual environment. A solution would be a private cloud with enhanced flexibility, speed, and operability. However, such a cloud requires additional investment and new skills to handle new technologies. This is why migration to private clouds is limited to advanced corporations. Private clouds have generally been on-premise*1 deployments so far, but new types of private cloud services, in which users can choose the location of facilities and whether or not to share resources, are now available (Fig. 1). Such new private clouds overcome concerns related to the conventional type of private clouds. In the future, corporations will be able to use both private and public clouds either on their own premises or hosted elsewhere for different purposes in the most suitable manner. Furthermore, this mixed usage of private and public clouds will develop into an inter-related hybrid cloud.

Four of the five companies shown in the leaders quadrant by Gartner in its Dec. 2011 report “Magic Quadrant for Public Cloud Infrastructure as a Service” [3] use a cloud infrastructure built using proprietary software*2. On the other hand, IaaS, a new public cloud based on an open source software (OSS) cloud infrastructure such as NTT Communications’ Cloudn and HP’s Cloud Services, came into full service in the latter half of 2011. Functions offered by the OSS-based cloud infrastructure are becoming mature, and its governance model is also shifting towards a community-driven one from one led by a single company. The OSS-based cloud is getting ready to be used for commercial purposes. Anticipating that private cloud services will also be based on OSS, since the foundation of the public cloud IaaS infrastructure is evidently moving from proprietary software to OSS, NTT DATA is directing its business efforts towards establishing OpenStack, which is OSS-based cloud infrastructure software, and its support services. We are also working together with NTT’s research and development laboratories to establish a proof of concept of a cloud built entirely with open source technology—including the hardware, storage, network, and monitoring functions (Fig. 2).

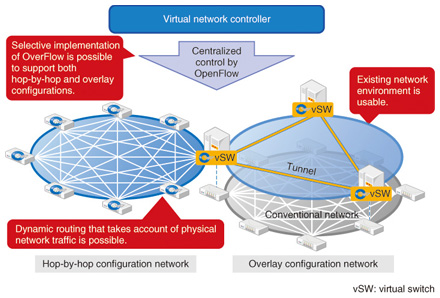

Requirements for datacenter networks have changed as follows as server virtualization technology has become common. (1) When multiple tenants use a cloud, they share the physical networks. Therefore, the networks themselves need to be virtualized and the virtual local area network (VLAN), virtual routing and forwarding (VRF), virtual firewall, and virtual load balancer must be set up interdependently on network devices. (2) Because virtual machines can be moved among physical servers, the machines’ virtual network settings must also be dynamically changeable. (3) To provide an on-demand cloud service, both virtual machines and their virtual networks must be centrally controlled and managed. Mapping between physical and logical network configurations is also necessary because they are different entities. (4) Conventional datacenter networks do not allow a loop structure. This limitation means that the network structure must be a series of tree structures, resulting in an inflexible and inefficient network. New technology must be introduced to enable traffic to take different routes to create a highly efficient and flexible network. Two possible candidates that might meet these requirements are an SDN/OpenFlow-based network (SDN: Software Defined Networking) and a VLAN-based network (Table 1). SDN is an approach that enables network configurations and behavior programmable, and OpenFlow is a candidate protocol that implements SDN. On the basis of these technologies, we have developed a Virtual network controller, which is an OpenFlow-based controller, that can be used within a datacenter and between datacenters by supporting both the hop-by-hop and overlay configurations [4] (Fig. 3). We are currently testing interconnectivity of this controller with switches from major manufacturers through standardization activities.

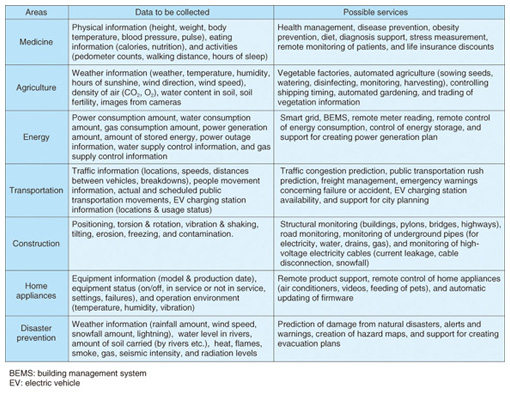

2.2 Robotics integration technologyAs sensor technology and network technology develop, a computer system (or a machine) is becoming able to collect information about the real world by communicating with other machines without any help from human beings. This advance materializes concepts such as IoT and M2M, which improve efficiency, convenience, and sustainability by utilizing such real-world information. In conventional M2M systems, servers for collecting and analyzing information from machines were developed individually. Now, virtualization and cloud technologies have enabled the M2M cloud, in which all the functions such as communication with various devices and the collection, storage, and analysis of data are managed centrally. Examples of M2M clouds are NTT DATA’s Xrosscloud and NEC’s CONNEXIVE. These are expected to be used for various applications (Table 2). M2M clouds have the following features.

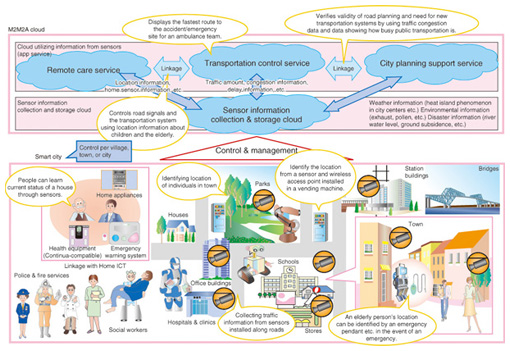

(1) Data collection and storage: An M2M cloud has functions for communicating with devices, collecting and storing data, and managing devices. A data collection mechanism is ready within a short time. Moreover, a combination of multiple data sources can be analyzed easily because the large amount of information can be stored across the cloud. (2) Data analysis: The M2M cloud offers statistical calculations such as multivariate analysis as a service. Users benefit from distributed computing technologies such as the Hadoop Distributed File System (HDFS) and MapReduce*3 for conducting data analysis without a large capital investment. Since this cloud also allows data trading, it will be possible in the future for a corporation to analyze its own data together with data from others. The current M2M system is used to collect and analyze data from devices and to visualize the analysis results or utilize the results for data mining. In the future, this system will probably develop into an M2M2A system where the system acts on the real world through devices (actuators) on the basis of information about the real world gathered from other devices. To make an M2M2A system, we need to add four functions to the current M2M cloud in order to upgrade it into an M2M2A cloud. - A function for analyzing information from devices and determining how to act on the real world on the basis of the analysis - A function for choosing which actuators and robots to activate - A function for creating an optimum command set for delivery to the actuators and robots - A function for controlling the actuators and robots We are working to overcome various challenges to achieve a smart society supported by the M2M2A system (Fig. 4).

2.3 Communication advancement technologyApps (applications) with a voice-based interface on smartphones have recently been appearing, including NTT DOCOMO’s Shabette Concier (talking concierge) service and call interpretation service and Apple’s Siri. Furthermore, communication between things and people is now possible. Such apps are made possible by media analysis technology—such as voice recognition and machine translation—and the functions that the cloud possesses, including processing capability, massive databases used for voice recognition and translation, and service coordination according to the analysis results. As the corporate environment changes, there are demands to reduce linguistic barriers to help domestic corporations expand their business overseas or manage offshore outsourcing. To help such corporations, NTT DATA is currently developing a tool for creating design documents in Japanese for offshore outsourcers to reduce the burden of creating Japanese documents and improve document quality and a global meeting support system to provide smooth communication in multi-language meetings.

3. Future prospectsICT systems are expected to contribute to value creation, providing efficiency and speed. To create services with new value, everyone involved in the business including developers and operators must cooperate. This idea has generated the word DevOps [5]. To repeat development and operation in a short cycle, it is necessary to establish a DevOps infrastructure that enables the distribution of applications. We will continue our research and development to create an environment where service providers can offer any services without being aware of the ICT infrastructure itself. References

|

|||||||||||||||||