|

|||||||||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||||||||

|

Regular Articles Vol. 11, No. 7, pp. 34–41, July 2013. https://doi.org/10.53829/ntr201307ra1 Multichannel Audio Transmission over IP Network by MPEG-4 ALS and Audio Rate Oriented Adaptive Bit-rate Video CodecAbstractThis article describes an experiment of lossless audio transmission over an Internet protocol (IP) network and introduces a prototype codec that combines lossless audio coding and variable bit rate video coding. In the experiment, 16-channel acoustic signals compressed by lossless audio coding (MPEG-4 Audio lossless coding (ALS) standardized by the Moving Picture Experts Group) were transmitted from a live venue to a café via the IP network. At the café, received sound data were decoded losslessly and then appropriately remixed to adjust to the environment at that location. The combination of high-definition video and audio data enables fans to enjoy a live musical performance in places other than the live venue. This experiment motivated us to develop a new prototype codec that guarantees high audio quality. The developed codec can control the bit rates of both audio and video signals jointly, and it achieves high audio and video quality. 1. IntroductionNetwork quality has improved recently, and the storage size has also been rapidly expanding. The Next Generation Network (NGN) can provide high quality, secure, and reliable services [1]. This higher bit rate, almost error-free and low-delay communication network makes it possible to transmit high-definition content almost in real time [2]. In this environment, the use of lossless codecs (coders/decoders) is widespread these days [3]–[7]. Audio lossless coding can perfectly reconstruct the signal from the bit stream. Users are able to choose not only the efficiency of lossy coding, at the sacrifice of quality, but also the reliability of acoustic signals by lossless coding because the network bit rate and disk space are also increasing. However, since the size of bit streams encoded by a lossless coder depends on the characteristics of the input signals, the lossless coding results in a variable bit rate that cannot be controlled. This is in contrast to lossy coding such as MPEG*1-2/4 advanced audio coding (AAC) that is applied for portable music players and broadcasts [3], [4], [8]–[10]. One of the most promising applications of broadband networks including NGN is high-quality video and audio transmission. Digital cinema is one potential application of this, as well as content distribution from popular theaters and music halls. We sometimes refer to such a content delivery system as other digital stuff or online digital sources (ODS) [11], [12]. In Japan, several musical artists such as the Takarazuka Revue Company [13], X-Japan, and L’Arc~en~Ciel [14] have provided live performances in real time to fans located in other places such as movie theaters. Video signals are normally compressed by ITU-T H.264/MPEG-4 AVC*2, and audio signals by AAC to save the bit rate. Even though these transmissions are of music content, a lossy codec has been used for sound data. In addition, multichannel audio signals from the live stage are sometimes mixed down to two channels, and then the processed stereo data are transmitted to the other venue. Lossy compression and down-mixing are reasonable when the speaker settings are defined and the acoustic characteristics are the same in each place of delivery, but obviously such assumptions are not realistic. Consequently, there is room to improve the audio quality of ODS. One feasible idea is to transmit the sound of the musical instrument as-is (i.e., without down-mixing or lossy coding), and to down-mix at each local site. To achieve a way to provide high-quality music, we carried out an experiment of lossless transmission of sound data. MPEG-4 Audio lossless coding (ALS) [15]–[17] was used to losslessly encode the 16-channel musical instrument data because it is an international standard technology and supports multichannel audio signals. The compressed audio data were transmitted from a live music venue (sender) to a café via an Internet protocol (IP) network. Although the bit rate of lossless audio became larger than that of the lossy one, we did not have to worry about degradation of sound waveforms. At the café, the transmitted sound data were decoded without any loss and appropriately remixed to adjust to the environment of the site. Lossless audio coding allowed the listeners in the offsite venue to enjoy the concert with customized audio data. The experimental result from this trial motivated us to develop a new prototype codec that can control the bit rate of video and audio. Audio quality is guaranteed, and higher video quality is achieved by making use of extra bits saved by lossless audio compression. The remainder of this article is as follows. We report on the lossless audio transmission experiment in section 2 and introduce the prototype codec in section 3. Finally, we conclude the article in section 4.

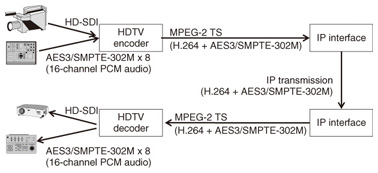

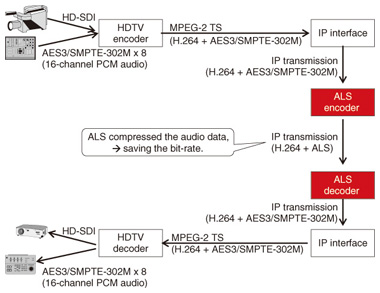

2. Investigation of lossless compression efficiency in demonstrative trial2.1 ConfigurationAs described in the previous section, we carried out an experiment of lossless transmission of sound data to provide high-quality music. We used the high-definition television (HDTV) encoder/decoder (HV9100 series by NTT Electronics Corp. (NEL)) and the IP interface (NA5000 by NEL) as shown in Figs. 1 and 2. The HDTV encoder output an MPEG-2 transport stream (TS), which included eight pairs of AES3/SMPTE-302M*3 (i.e., 16-channel pulse-code modulation (PCM) audio signals) bitstreams that were input from a digital mixer and an H.264 bitstream that consisted of the encoded high-definition serial digital interface (HD-SDI) data from a high-vision camera. The MPEG-2 TS bitstream was converted to IP packets by the IP interface. Audio and video data were transmitted over the IP network. After transmission, an IP interface and HDTV decoder carried out the reverse operations. Finally, we obtained high-vision data and 16-channel PCM audio signals.

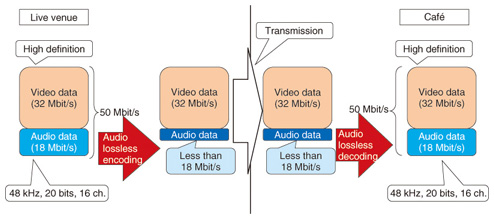

Although this existing setting (Fig. 1) can provide high-quality sound, it wastes network resources because there are redundant audio signals. From an ecological and economical viewpoint, it would be preferable to reduce the cost of the network without any degradation of audio quality. For this experiment, we added the ALS encoder/decoder to save the bit rate, as shown in Fig. 2. Before IP transmission, the ALS encoder losslessly compressed eight pairs of the AES3/SMPTE-302M bitstreams. After the ALS decoder had received the encoded IP packets, it reconstructed the original IP packets and sent them to the IP interface. Then, the IP interface and HDTV decoder operated as if no processing had taken place. The total bit rate was set to 50 Mbit/s (Fig. 3). The audio bit rate was about 18 Mbit/s because 16-channel signals were digitized at 48 kHz and 20 bits (four more bits were required for AES3/SMPTE-302M). Therefore, 32 Mbit/s was needed for the target bit rate of H.264 (video), which was not varied during the experiment. Then, the audio data were losslessly encoded by ALS, and the bit rate of the ALS bitstream was reduced to less than 18 Mbit/s, depending on the input signals. The transmitted ALS bitstreams were perfectly decoded to PCM audio signals.

2.2 Experimental resultsThis experiment was conducted on March 19, 2009 from 7:00 to 10:00 PM. There were about 80 listeners at the live venue and around 100 at the café. The sender and receiver sites are shown in Figs. 4 and 5, respectively.

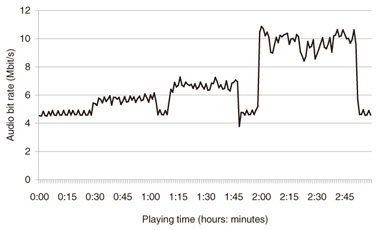

The bit rate of the ALS bitstreams on average for each minute is shown in Fig. 6. The audio signals are losslessly compressed to 21% (3.78 Mbit/s)–60% (10.87 Mbit/s) of the original data size. The bit rate increased as the performance progressed because the number of musicians (i.e., musical instruments) also increased. In summary, the ALS was able to save about 11.2 Mbit/s on average, so the total bit rate became around 40 Mbit/s.

Sound data were appropriately remixed for adjustment to the environment of the receiving site. In this experiment, it was easy for the mixing engineers (also known as public address or sound reinforcement engineers) at the café to carry out their remixing tasks because the original sound data came from the live venue. We received positive feedback from the invited guests, and we believe that assigning a higher bit rate for multichannel audio signals was key in the success of the experiment. Our experiment suggests that the combination of high-definition video and audio data, especially ODS with lossless audio coding such as the ALS, will enable fans to enjoy live musical performances from offsite locations. We think this trial is a good example of a content delivery service with high-quality audio. As far as we know, this is the first experiment on real-time lossless audio transmission (especially ALS) with HD video via an IP network.

3. Prototype of audio rate-oriented adaptive bit-rate video codec3.1 Concept of the developed codecThe results of the experiment described in the previous section indicate that we can reduce the bit rate of audio signals by approximately half by using ALS. For real-time streaming, we should nevertheless retain the worst-case bit rate (i.e., PCM rate) for audio. We developed a prototype of an audio bit-rate-oriented adaptive bit-rate video codec to determine whether we could make efficient use of the reduced bit rate, as shown in Fig. 7. The bit rate saved by using ALS can then be contributed to H.264.

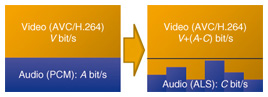

Let V be the bit rate of video, A be that of audio, and C be that of losslessly compressed audio (i.e., C≤A). As shown in Fig. 8, the conventional codec needs V + A bit/s to transmit the data. By contrast, the developed codec can provide the additional bits for the video codec. Therefore, the video codec can use V + (A - C) bit/s, and the audio codec needs only C bit/s. The video quality is therefore enhanced. The total bit rate for the TS is the same, V + A bit/s, and the audio quality is also the same because we use lossless coding.

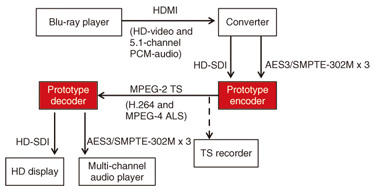

3.2 Preliminary experiment with the developed codecThe prototype encoder supports. - HD-SDI signal input → H.264 bitstream output - Three AES3/SMPTE-302M inputs (i.e., up to 6 channels) → MPEG-4 ALS bitstream output. The decoder supports. - H.264 bitstream input → HD-SDI signal output - MPEG-4 ALS bitstream input → three AES3/SMPTE-302M outputs. In the preliminary experiment with the developed codec, the TS rate was set to 25 Mbit/s because we assumed 30 Mbit/s for the NGN operation for IP transmission (which is reasonable for users) and because forward error correction usually requires 10% of the bit rate. Content from an opera performed and provided by a famous Japanese opera company was used as input signals. A diagram of the settings for the preliminary experiment is shown in Fig. 9. A Blu-ray player output an HDMI (high-definition multimedia interface) signal, and the converter divided it into HD-SDI and AES3/SMPTE-302M signals. The prototype encoder produced the H.264 bitstream from the obtained HD-SDI signal, of which the target bit rate depends on the bit rate of audio data compressed by the ALS, and produced the ALS bitstream from the AES3/SMPTE-302M signals. The decoder then output the HD-SDI signal and reconstructed the AES3/SMPTE-302M signals losslessly.

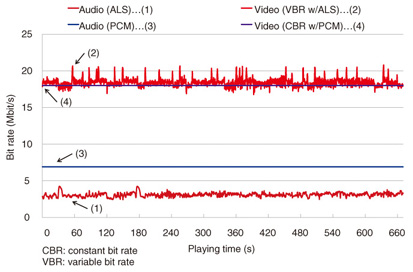

We captured TS bitstreams and analyzed them in order to evaluate the performance of the fabricated codec. Without lossless audio coding, the bit rate of audio requires 6.9 Mbit/s (48 kHz x 24 bits x 6 channels), which means that the remaining bit rate is only 18 Mbit/s for video. In contrast, as shown in Fig. 10, the bit rate of video can use more than 18 Mbit/s (statistically 18.40 (mean) ± 0.55 (standard deviation) Mbit/s) because the bit rate of audio is compressed to around 3 Mbit/s (statistically 3.10 (mean) ± 0.26 (standard deviation) Mbit/s). In summary, video quality is improved by utilizing lossless audio compression.

This codec is still a prototype, so we decided to use low-risk, low-return settings for the bit rate and adaptive bit-rate control. We can achieve better quality by fine-tuning these settings. 4. ConclusionWe conducted an experiment of lossless audio transmission via an IP network. Multichannel audio signals compressed by MPEG-4 ALS and high-definition video signals were transmitted from a live venue to a café to provide high-quality music for an off-site audience. This experimental result motivated us to develop a prototype codec that controls the bit rate between video and audio. Audio quality is guaranteed, and higher video quality is achieved by making use of extra bits saved by the lossless audio compression, while the video encoder is able to use the remaining bits subject to the constant total bit rates. After the prototype codec is refined, we will be able to transmit higher quality content such as operas, musicals, and concerts. Japanese broadcasting standards support MPEG-4 ALS for downloading 22.2-channel high-definition audio [18], and the developed audio/video codec was used to transmit the high-quality multichannel audio signal of a Takarazuka Revue performance [19]. Thus, the described technologies are expected to be widely used in the near future. AcknowledgementsWe thank all of the engineers who assisted with the development and experiments. References

|

|||||||||||||||||||||||||||||||||||||||||||||