|

|||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||

|

Feature Articles: Video Technology for 4K/8K Services with Ultrahigh Sense of Presence Vol. 12, No. 5, pp. 30–36, May 2014. https://doi.org/10.53829/ntr201405fa6 Next-generation Media Transport MMT for 4K/8K Video TransmissionAbstractMPEG Media Transport (MMT) for heterogeneous environments is being developed as part of the ISO/IEC (International Organization for Standardization/International Electrotechnical Commission) 23008 standard. In this article, we overview the MMT standard and explain in detail the MMT LDGM (low-density generator matrix) FEC (forward error correction) codes proposed by NTT Network Innovation Laboratories. We also explain remote collaboration for content creation as a use case of the MMT standard. Keywords: MPEG Media Transport, Low-density Generator Matrix Codes, ISO/IEC 1. IntroductionIt has been about 20 years since the widely used MPEG-2 TS (MPEG-2 Transport Stream) standard*1 was developed by ISO/IEC (International Organization for Standardization/International Electrotechnical Commission) MPEG (Moving Picture Experts Group). Since then, media content delivery environments have changed. While video signals have been diversified, 4K/8K video systems have been developed. Moreover, there are a wide variety of fixed and mobile networks and client terminals displaying multi-format signals. The MPEG Media Transport (MMT) standard [1] is being developed as part 1 of ISO/IEC 23008 for heterogeneous environments. MMT specifies technologies for the delivery of coded media data for multimedia services over concatenated heterogeneous packet-based network segments including bidirectional IP networks and unidirectional digital broadcasting networks. NTT Network Innovation Laboratories has developed 4K transmission technologies for digital cinema, ODS (other digital stuff)*2, super telepresence, and other applications. We have contributed to the standardization of MMT in order to diffuse 4K transmission core technologies such as forward error correction (FEC) codes and layered signal processing.

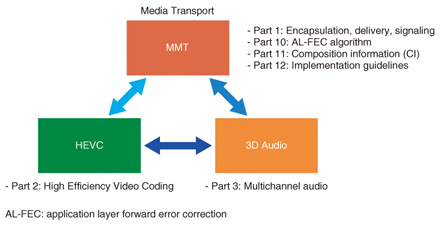

2. MMT overviewMPEG-2 TS provides efficient mechanisms for multiplexing multiple audio-visual data streams into one delivery stream. Audio-visual data streams are packetized into small fixed-size packets and interleaved to form a single stream. This design principle means that MPEG-2 TS is effective for streaming multimedia content to a large number of users. In recent years, it has become clear that the MPEG standard is facing several technical challenges due to the emerging changes in multimedia service environments. One particular example is that the pre-multiplexed stream of 188-byte fixed size MPEG-2 TS packets is not quite suitable for IP (Internet Protocol)-based delivery of emerging 4K/8K video services due to the small and fixed packet size and the rigid packetization and multiplexing rules. In addition, it might be difficult for MPEG-2 TS to deliver multilayer coding data of scalable video coding (SVC) or multi-view coding (MVC) via multi-delivery channels. Error resiliency is also insufficient. To address these technical weaknesses of existing standards and to support the wider needs of network-friendly transport of multimedia over heterogeneous network environments including next-generation broadcasting system, MPEG has been developing transport and synchronization technologies for a new international standard, namely MMT, as part of the ISO/IEC 23008 High Efficiency Coding and Media Delivery in Heterogeneous Environments (MPEG-H) standard suite. An overview of the MPEG-H standard is shown in Fig. 1. The MMT standard consists of Parts 1, 10, 11, and 12.

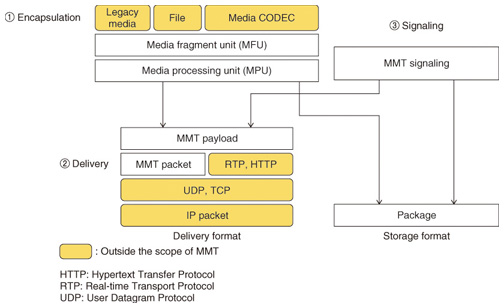

The protocol stack of MMT, which is specified in MPEG-H Part 1 [2] is shown in Fig. 2. The white boxes indicate the areas in the scope of the MMT specifications. MMT adopts technologies from three major functional areas. 1) Encapsulation: coded audio and video signals are encapsulated into media fragment units (MFUs)/media processing units (MPUs). 2) Delivery: an MMT payload is constructed by aggregating MPUs or fragmenting one MFU. The size of an MMT payload needs to be appropriate for delivery. An MMT packet is carried on IP-based protocols such as the User Datagram Protocol (UDP) or Transmission Control Protocol (TCP). It is a variable-length packet appropriate for delivery in IP packets. Each packet contains one MMT payload, and MMT packets containing different types of data and signaling messages can be transferred in one IP data flow. 3) Signaling: MMT signaling messages provide information for media consumption and delivery to the MMT client.

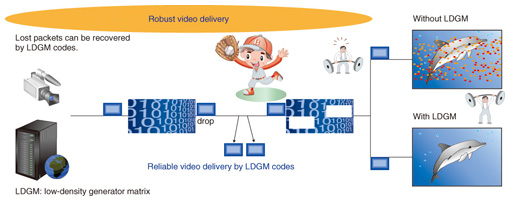

A client terminal identifies MPUs constituting the content and their presentation time by processing the signaling message. The presentation time is described on the basis of Coordinated Universal Time (UTC)*3. Therefore, the terminal can consume MPUs in a synchronized manner even if they are delivered on different channels from different senders. MMT defines application layer forward error correction (AL-FEC) codes in order to recover lost packets, as shown in Fig. 3. MPEG-H Part 1 specifies an AL-FEC framework, and MPEG-H Part 10 [3] specifies AL-FEC algorithms. Furthermore, MPEG-H part 11 defines composition information (CI), which identifies the scene to be displayed using both spatial and temporal relationships among content. These functions mentioned above are the novel features of MMT, and are not shared by MPEG-2 TS.

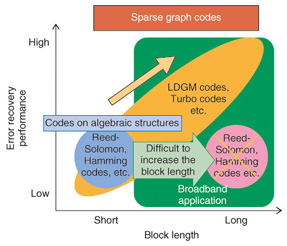

3. Powerful FEC codesMPEG-H Part 10 defines several AL-FEC algorithms, including Reed-Solomon (RS) codes*4 and low-density generator matrix (LDGM) codes proposed by NTT Network Innovation Laboratories. Each AL-FEC code has advantages and disadvantages in terms of error recovery performance and computation complexity. RS codes are based on algebraic structures. They give an optimal solution in terms of MDS (minimum distance separation) criteria. However, an RS code makes it difficult to increase the block length because the decoding process features polynomial-time decoding. It cannot reach Shannon’s capacity*5, which gives the theoretical limit. This can result in low coding efficiency and excessive computation overhead. Unlike RS codes, LDGM codes can handle block sizes of over several thousand packets because of their very low computation complexity. In particular, LDGM codes are suitable for the transmission of huge data sets such as 4K/8K video (Fig. 4).

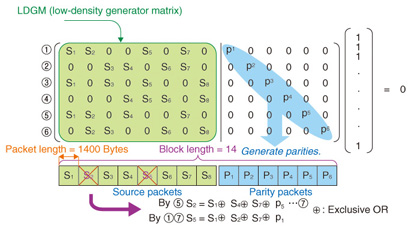

LDGM codes are one type of linear code; the party check matrix H*6 consists of a lower triangular matrix (LDGM structure) that contains mostly 0’s and only a small number of 1’s. An example of LDGM encoding and decoding procedures is shown in Fig. 5. Using a sparse parity generator matrix makes it possible to process large code blocks of over a thousand IP packets as a single code block, which offers robustness to packet erasure error in networks. Our proposed LDGM codes provide good error recovery performance while keeping the computational complexity low because the sub-optimal parity generator matrix is used when applied to the message passing algorithm (MPA). Furthermore, the proposed LDGM codes can use irregular matrices that provide good error recovery performance for both MPA and MLD (maximum likelihood decoding).

Furthermore, the specified LDGM codes can support the following two schemes. One is a sub-packet division and interleaving method [4]. Generally, LDGM codes provide superior error recovery performance for large code-block sizes. However, the error recovery performance for short code-blocks is inferior to that of the RS codes. The proposed sub-packet division and interleaving LDGM scheme solves this problem. This scheme increases the number of symbols in one block size and increases the error recovery performance. The other is a Layer-Aware LDGM (LA-LDGM) scheme [5]. The structure of conventional LDGM codes does not support partial decoding. When the conventional LDGM codes are used for scalable video data such as JPEG2000 created by JPEG (Joint Photographic Experts Group) or SVC code streams, performance is low and scalability is lost. The LA-LDGM scheme maintains the corresponding relationship of each layer. The resulting structure supports partial decoding. Furthermore, the LA-LDGM codes create highly efficient parity data by considering the relationships of each layer. Therefore, LA-LDGM codes raise the probability of recovering lost packets.

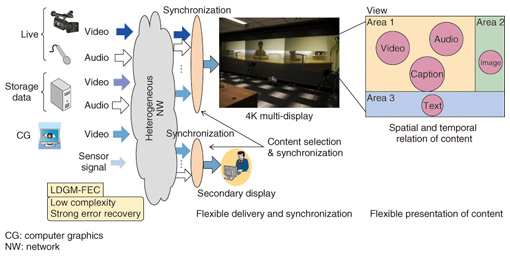

4. Use cases of MMT standardMMT has various functions. Several representative MMT functions are shown in Fig. 6. Reliable 4K/8K video transmission via public IP networks is one of the typical features of MMT due to its high error recovery capability. Synchronization*7 between the content on a large screen and the content on a second display can also be realized by MMT. Users can enjoy public viewing while displaying alternative camera views on their second display. Different content selected by a user can be simultaneously transported via different networks. Hybrid delivery of content in next-generation Super Hi-Vision broadband systems can also be achieved. Applications such as widgets can be used to present information together with television (TV) programs. In particular, the large and extremely high-resolution monitors needed for displaying Super Hi-Vision content would be able to present various kinds of information obtained from broadband networks together with TV programs from broadcasting channels.

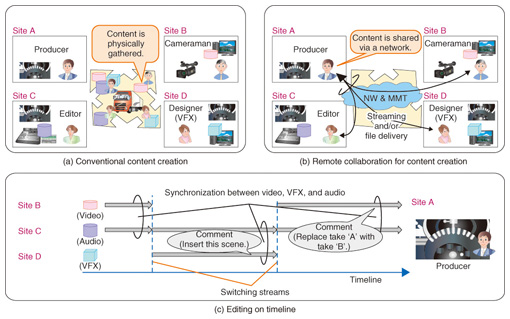

5. Remote collaboration for content creation [6]High quality video productions such as those involving films and TV programs made in Hollywood are based on the division of labor. The production companies for video, visual effects (VFX), audio, and other elements of a production all create their content in different locations. Post-production is the final stage in filmmaking, in which the raw materials are edited together to form the completed film. In conventional program production, as shown in Fig. 7(a), these raw materials are gathered physically from the separately located production sites. An overview of remote collaboration for content creation and editing actions on a timeline is shown in Fig. 7(b) and (c). By using the MMT standard, multiple forms of content such as video, VFX, and audio are shared via the network, and the selected content can then be synchronized based on the producer’s request. All the staff members at each location perform their tasks and share their comments simultaneously. A remote collaboration system that uses MMT enhances the speed of decision-making. As a result, we can increase the efficiency and productivity of content creation. This is an example use case of the MMT standard. Various other services are expected to emerge with the full implementation of MMT standard technologies.

6. Future directionNTT Network Innovation Laboratories will continue to promote innovative research and development of reliable and sophisticated transport technologies such as stable video transmission on shared networks consisting of multi-domain networks and virtual network switching to achieve dynamically configurable remote collaboration. References

|

|||||||||||||||||||||||||||