|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

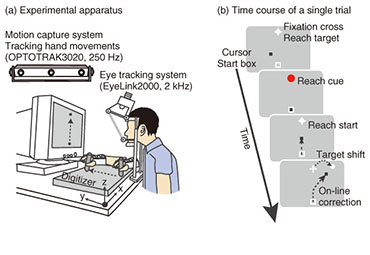

Regular Articles Vol. 12, No. 7, pp. 30–37, July 2014. https://doi.org/10.53829/ntr201407ra1 Understanding the Coordination Mechanisms of Gaze and Arm MovementsAbstractWe report on the coordination mechanisms of gaze and arm movements in the visuomotor control process. The brain information-processing mechanisms underlying the eyes, arms, and their coordinated movements need to be understood in order to design sophisticated and user-friendly interactive human-machine interfaces. In this article, we first review the experimental research on visuomotor control, primarily from the viewpoints of eye-hand coordination and online feedback control. Then, we introduce our recent experimental studies investigating the eye-hand coordination mechanism during online feedback control. The experimental results provide evidence that reaching corrections that are rapidly and automatically induced by visual perturbations are influenced by changes in gaze direction. These results suggest that an online reach controller closely interacts with gaze systems. Keywords: visuomotor control, eye-hand coordination, online feedback control 1. IntroductionWe can make arm movements to grasp a cup, manipulate a computer with a mouse, or hit a moving fastball. These motor functions are achieved entirely by our brain’s information processing system. Gaining a deep understanding of the brain mechanisms of human motor behaviors is fundamental in order to design user-friendly interactive human-machine interfaces using future information technologies. Humans perform various visually guided actions in daily life. To perform movements that involve reaching the arm toward a visual target, we have to look at the target and then detect its location relative to the hand. In this situation, gaze behavior should be coordinated with hand movements in a spatiotemporally appropriate manner. Furthermore, visual information that falls on the retina is integrated with other sensory information related to gaze direction, head orientation, or hand location, so as to be transformed into desired muscle contractions. These cooperative aspects of gaze and hand systems are referred to as eye-hand coordination (discussed in detail in section 2.1). In addition to eye-hand coordination, one important aspect of our daily actions is dynamic interaction with the external world. Consider playing tennis as an example. Tennis players have to run and hit the ball even though its flying trajectory irregularly changes. In this dynamic situation, the players must correct their reaching trajectory as quickly as possible in response to unpredictable perturbations such as a sudden shift of the target or body movement. The reaching correction of movement midflight is mediated by an online feedback controller (discussed in detail in section 2.2). Based on the fact that the hand and eye always move cooperatively in our daily lives [1], both motor systems seem to be tightly coupled with each other during online feedback control. However, until now this issue had not been sufficiently addressed in related research fields despite its importance. In this article, we review recent studies on eye-hand coordination and online feedback control in section 2. In section 3, we introduce our experimental studies on eye-hand coordination during online feedback control. We conclude the article in section 4. 2. Background2.1 Eye-hand coordinationWe discuss eye-hand coordination in terms of two aspects. The first aspect is the spatiotemporal coordination of gaze and reaching behaviors. These behaviors were observed in an experimental task where participants looked at and reached toward a designated target. The arm movements in such a task are typically preceded by a rapid movement of the eye to the target, called a saccade. Saccadic eye movement before the hand reaction can provide some crucial information on the hand motor system, for example, visual information, target representation, or motor commands [2]. Indeed, several studies have found that eye movements affect concurrent hand movements such as reaction time, initial acceleration, or final position [3]–[6]. In addition, saccades and hand reaction times are temporally correlated with each other, suggesting that both motor systems share a common neural processing [7], [8]. Meanwhile, it is known that arm-reaching movements can also affect gaze systems. The reaction time of a saccade differs between when it was made with an arm-reaching movement and when it was made without such a movement [9], [10]. In addition to this temporal effect, arm-reaching movement affects the landing point of each saccade, while saccades track unseen arm-reaching movements from the participant [11]. These findings suggest that the hand and eye motor systems interact with each other in visually guided reaching actions. The second point regarding eye-hand coordination is coordinate transformation from visual to body space. When making an arm-reaching movement, the brain should code the locations of both the target and the hand in a common frame of reference so as to compute the difference vector from the hand to the target. The classical ideas on this topic suggest that body-centered coordinates are utilized for this common reference frame [12], [13]. That is, visual information about the target location on the retina is transformed into body-centered coordinates by integrating the target location on the retina with the eye orientation in the orbit, the head rotation relative to the shoulder, and the arm posture. However, more recent behavioral, imaging, and neurophysiological studies have revealed that the locations of the target and hand are represented in a gaze-centered frame of reference, and the posterior parietal cortex (PPC) is involved in constructing this representation [14]–[17]. The difference vector between hand and target is also computed using this gaze-centered representation [18]. The gaze-centered representation of the target and hand should be updated quickly depending on each eye movement, and this updating process is called spatial remapping. This spatial remapping was found to occur predictively before the actual eye movement using oculomotor preparatory signals [19]. 2.2 Online feedback controlOnline feedback control in visuomotor processing has been investigated using a visual perturbation paradigm since the 1980s [20]–[22]. In this experimental paradigm, the location of the reaching target changed unexpectedly during a reaching movement. The results showed that participants can adjust their reaching trajectories rapidly and smoothly in response to such shifting targets. Interestingly, the reaction latency of this online correction is 120–150 ms after the target shift, which is much quicker compared to that for voluntary motor reactions to a static visual target (more than 150–200 ms) [23]. Furthermore, this correction can be initiated even when the participant is not aware of the target shift [24]. These results suggest that the online reaching correction to the target shift is mediated by reflexive mechanisms that differ from the mechanism underlying the voluntary motor reaction to a static visual target [25]. Patient [26], [27], transcranial magnetic stimulation [28], and imaging [29], [30] studies have found that the PPC plays a significant role in producing this rapid and reflexive online reaching correction. As mentioned in the Introduction, we have to respond not only to external changes (i.e., target shifts) but also to our own body movements. When such body movements occur, the eyes on the head frequently receive background visual motion that is opposite that of the body movement. This can be easily understood if we consider a hand-held video camera. Since our hands usually shake during recording, the visual image on the screen of the camera moves in the opposite direction from our hand movements. This fact suggests that visual motion can be utilized to control reaching movements against the body movement. Indeed, this theory is supported by several studies [31]—[34]. In these studies, a large-field visual motion was presented on a screen during a reaching movement. The results showed that the reaching trajectory shifted rapidly (100–150 ms) and unintentionally toward the direction of visual motion. This is known as a manual following response (MFR). Several studies have shown the functional significance of the MFR by observing the reaching accuracy when visual motion and actual perturbations to the participants’ posture were introduced simultaneously [32], [35]. The computational and physiological mechanisms underlying the MFR have not yet been fully elucidated; however, it is thought that some cortical areas related to visual motion processing such as the middle temporal or the medial superior temporal areas contribute to generating the MFR [36]. 3. Eye-hand coordination in online feedback controlAlthough eye-hand coordination has been examined widely as described in the previous section, the experimental task used in those studies was restricted to a reaching movement from a static posture toward a stationary target. Therefore, the previous studies focused mainly on the coordination mechanism during the motor planning process. However, during the execution of reaching, it is necessary to correct reaching trajectories rapidly in response to unexpected perturbations. Visually guided online corrections would be mediated by the reflexive mechanism described in section 2.2. In this section, we describe our recent experimental studies, which focused on the eye-hand coordination mechanism during online visuomotor control. 3.1 Eye movements and reaching corrections with a target shiftWe observed eye movements and online reaching correction when a target was shifted during the reaching movement [37]. The experimental apparatus is shown in Fig. 1(a). Participants (n = 17) made a reaching movement in a forward direction on a digitizer while holding a stylus pen. The pen location was presented on the monitor as a black cursor. At the start of each trial, participants placed the cursor into the start box (a square at the bottom of the monitor) while maintaining eye fixation on the central fixation cross (Fig. 1(b)). Then, a reaching target was presented over the fixation cross, cuing participants to initiate reaching (distance: 22 cm, duration: 0.6 s). In randomly selected trials, the target shifted 7.6 cm rightward (32/96 trials) or leftward (32 trials) 100 ms after the reaching initiation. In target-jump trials, participants were required to make smooth online reaching corrections to the new target location as quickly as possible. In the remaining 32 trials, the target was kept stationary, and participants continued to reach toward the original target location.

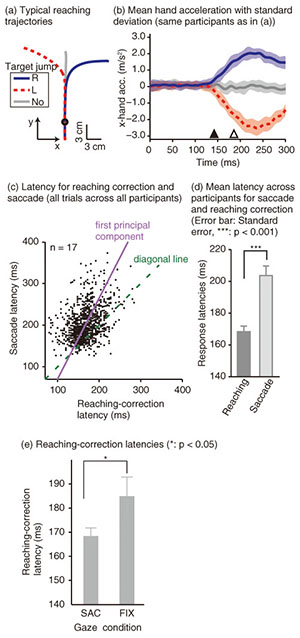

To examine the effect of gaze behavior on the reaching correction, we conducted this reaching task under two gaze conditions: saccade (SAC) or fixation (FIX). In the SAC condition, participants had to make the reaching correction with a saccadic eye movement to the new target location, whereas in the FIX condition, participants made the reaching correction while maintaining eye fixation on the central fixation cross (i.e., the original target location). Each gaze condition was run in separate blocks of 48 trials. Reaching trajectories obtained by a typical participant in the SAC condition are shown in Fig. 2(a). The trajectory deviated smoothly during a reach according to the direction of the target shift. To evaluate the initiation of the reaching correction in more detail, we calculated x-hand accelerations (the axis along the target shift), which are temporally aligned at the onset of the target shift (Fig. 2(b)). The hand response corresponding to each direction of the target shift rapidly deviated about 150 ms after the target shift. The response latency of the reaching correction and saccade is indicated by a filled (143 ms) and open triangle (187 ms), respectively. This temporal relationship (reaching correction that preceded saccade initiation) was obtained for all the participants. Hand and eye latencies for all trials across all participants are plotted in Fig. 2(c). Most of the data (81.6%) fell above the diagonal line, indicating that the reaching correction was usually initiated prior to the onset of the eye movement. This temporal difference was statistically significant in a paired t-test (p < 0.001), as shown in Fig. 2(d). The hand-first and eye-second pattern observed in this study indicates that the online reaching correction can be initiated by peripheral visual information before the eye movement. This temporal order differs completely from that reported in conventional eye-hand tasks. The eye-first and hand-second pattern in the conventional task indicates that the reaches initiated from a static posture rely on the central visual information. A recent imaging study has shown that compared with central reaching, peripheral reaching involved an extensive cortical network including the PPC [38]. Thus, these findings again support the idea that distinct brain mechanisms are involved between motor planning for the voluntary reaching initiation and online feedback control during the motor execution. We next investigated the dependence of the reaching correction on saccadic eye movements. Firstly, we observed that the initiation of the reaching correction was temporally correlated with saccade onset (correlation coefficient = 0.39, p < 0.001, Fig. 2(c)). Correlation was significant (p < 0.05) in 13 and marginally significant (p < 0.1) in 1 out of 17 participants. Secondly, we found that the latency of the reaching correction changed according to the gaze conditions. The reaching correction was significantly (p < 0.05) faster for the SAC than for the FIX condition, as shown in Fig. 2(e).

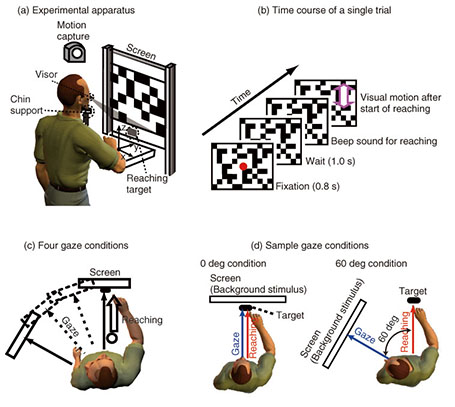

The correlation finding indicates that the hand and eye control systems do not act independently; rather, they share common processing at some stage. Furthermore, the dependence of the reaching correction on the gaze condition suggests that the hand and eye motor system interacts closely even during the online feedback control. Since a saccade was not yet initiated when the reaching correction started, the change in hand latency cannot be ascribed to any changes in visual or oculomotor signals obtained after the actual eye movements. Our findings imply that an online reaching controller interacts with oculomotor preparation signals before the actual eye movements. 3.2 Gaze direction and reaching corrections to background visual motionThis study focused on reaching corrections induced by visual motion (MFR). In this case, visual motion that was applied during the reaching did not induce explicit eye movements. Thus, to address the mechanism of eye-hand coordination, we examined the effect of gaze direction on MFR [39]. Gaze direction relative to the reaching target is known to be a key feature in constructing gaze-centered spatial representation, as described in section 2.1. The experimental apparatus is shown in Fig. 3(a). Participants (n = 6) were seated on a chair in front of a back-projection screen. The participants were asked to make a reaching movement in a forward direction (distance of about 39 cm) toward a 1 cm2 piece of rubber. The target and the participant’s hand were completely occluded from view. The time course of a single trial is shown in Fig. 3(b). At the start of each trial, participants touched the target location to confirm its location. Then, participants pressed a button followed by the presentation of a stationary random checkerboard pattern and a fixation marker. After the eye fixation marker was presented, beep sounds were made to cue participants to initiate a reaching movement while maintaining the eye fixation. In randomly selected trials, a background visual stimulus moved upward (16/48 trials) or downward (16 trials) for 500 ms shortly after the reaching initiation. In the remaining 16 trials, the visual stimulus was kept stationary. Participants were asked to reach toward the target location regardless of whether or not the visual motion was presented. Participants performed this reaching task under four gaze-reaching configurations (0, 20, 40, and 60°), as shown in Fig. 3(c). In the 0° condition (left panel in Fig. 3(d)), the head was oriented straight ahead, and the screen was located in front of the participants. In this condition, the gaze direction matched the target location. In the 60° condition (right panel in Fig. 3(d)), the head was rotated 60° to the left, and the screen location changed so that the visual stimuli on it were presented to participants in the same way in the four gaze conditions. In this condition, the gaze direction was away from the reaching target. Thus, in the 20° and 40° conditions, the rotation angle was 20° and 40°, respectively. In all the conditions, participants made the same reaching movements toward the identical target location. Therefore, this paradigm allows us to examine the effect of gaze-reach coordination on the online manual response to the visual motion.

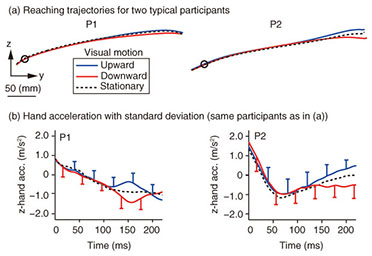

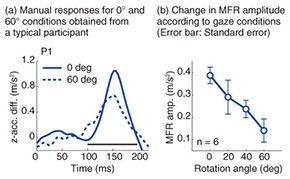

Typical reaching trajectories in the 0° condition (two participants: P1 and P2) are shown in Fig. 4(a). When the background visual stimulus moved during a reaching movement, the reaching trajectory deviated in the direction of visual motion (blue line for upward and red line for downward). This reflexive MFR was observed in all participants. To analyze the response in more detail, we calculated the hand accelerations (acc.) along a z-axis (the direction of visual motion) that were temporally aligned at the onset of visual motion (Fig. 4(b) with the same participants as in Fig. 4(a)). We obtained the difference in the hand acceleration between the upward and downward visual motions. This temporal difference for the 0° and 60° conditions is shown in Fig. 5(a) (data for P1). Interestingly, the manual response was larger for the 0° condition than for the 60° condition even though the identical visual motion was applied in both conditions. We quantified the response amplitude by estimating the mean response between 100 and 200 ms after the onset of visual motion (black solid line in Fig. 5(a)). A comparison of the response amplitudes averaged across participants for all gaze conditions (0, 20, 40, and 60°) is given in Fig. 5(b). The MFR was largest for the 0° condition, and the response amplitude significantly decreased as the gaze direction deviated from the reaching target (ANOVA (analysis of variance), p < 0.05).

These results indicate that the automatic manual response induced by visual motion is modulated flexibly by the spatial relationship between gaze and reaching target. This spatial relationship is computed by the gaze-centered target representation, which is constructed in the PPC during reach planning. The MFR gain modulation that is based on the gaze-reach coordination can be associated with our natural behavior, namely, that we usually gaze at the reach target when highly accurate reaching is required. Thus, we infer that visuomotor gain for the online reaching correction is functionally modulated by the gaze-reach coordination. 4. ConclusionWe investigated mechanisms of eye-hand coordination during online visual feedback control. When a target shift or background visual motion is applied during reaching, rapid and reflexive online corrections can occur. Our studies provide experimental evidence that the online controller for arm reaching interacts closely with gaze systems. The first study showed that the reaching correction to the target shift was temporally correlated with saccade onset and changed according to the gaze behavior that started after the initiation of reach correction. This suggests that an online reaching controller interacts with gaze signals related to planning eye movements. The second study revealed that the amplitude of the reaching correction to the visual motion changed according to the spatial relationship between gaze and the reach target. This suggests that the visuomotor gain for the reflexive online controller is functionally modulated by the eye-hand coordination. Eye-hand coordination is a basic aspect of visually guided motor actions that occur frequently in our daily lives. In addition, quick online motor corrections are one of the bases supporting our skillful motor actions in dynamic environments. Thus, we believe that understanding the brain mechanisms underlying these visuomotor functions will provide important guidelines on how to develop user-friendly human-machine interfaces in the near future. References

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||