|

|||||||||

|

|

|||||||||

|

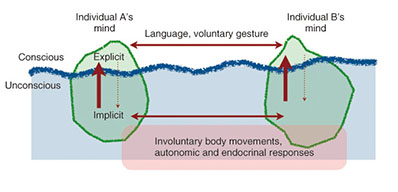

Feature Articles: New Developments in Communication Science Vol. 12, No. 11, pp. 31–36, Nov. 2014. https://doi.org/10.53829/ntr201411fa6 Reading the Implicit Mind from the BodyAbstractRecent studies in cognitive science have repeatedly demonstrated that human behavior, decision making, and emotion depend heavily on the implicit mind, that is, automatic, involuntary mental processes that even the person herself/himself is not aware of. We have been developing diverse methods of decoding the implicit mind from involuntary body movements and physiological responses such as pupil dilation, eye movements, heart rate variability, and hormone secretion. If mind-reading technology can be made viable with cameras and wearable sensors, it would offer a wide range of usage possibilities in information and communication technology. Keywords: man-machine interface, physiological signals, eye movement 1. IntroductionIn daily life, people often infer other people’s feelings and intentions to some extent—even if they are not expressed explicitly by language or gesture—by taking the appearance of the person and the situation into account. This ability, often referred to as mind reading, is an essential characteristic that supports smooth communication among people. A majority of current information and communication technology (ICT) devices, on the other hand, do not function without receiving explicit commands, which are entered using predetermined methods such as typing, pressing buttons, using one’s voice, and making specific gestures. If ICT devices had a mind-reading ability, the relationship between such devices and users would be more flexible and natural. Ultimately, users would not be aware of the existence of ICT devices. In other words, mind-reading technology would make ICT devices transparent to users. 2. Reading the mind from the bodyMind-reading technology has been a topic of extensive research in recent years. In fact, remarkable progress has been made in brain-computer interfaces (BCIs), which decode a person’s brain activity to identify the content of the person’s consciousness, such as a category of a perceived object, or a button to be pressed among multiple alternatives. Our approach, however, is essentially different from BCI in two aspects. The first difference concerns the method of measurement. In BCI, brain activity is measured using such technologies as electroencephalograph or functional magnetic resonance imaging, whereas in our mind reading approach, we measure physiological changes on the body surface, including eye movements, pupil diameter changes, heart rate variations, and involuntary body movements. These signals can be measured with relatively simple devices such as a camera or surface electrodes. In contrast, BCI requires large-scale specialized measurement equipment. At present, measurement of body surface signals is not completely unconstrained, meaning that the movement of the person being measured is somewhat restricted. However, it will be even less constrained and more transparent in the near future, when sophisticated wearable sensors are developed. The second difference concerns the decoding target. BCI tries to categorize the content of consciousness, such as types of perceived visual objects (e.g., a face vs. a house) and a button to be pressed (e.g., left or right). The system learns the statistical correspondence between the categories and brain activity patterns in advance. Based on the learning, it judges which category the observed pattern belongs to. However, it should be noted that consciousness is only a fraction of the whole mind. Recent findings in the field of cognitive science repeatedly demonstrated that human behavior, decision making, and emotion depend not only on conscious deliberation, but they also depend heavily on the implicit mind, that is, automatic, fast, involuntary mental processes that even the person herself/himself is not aware of (Fig. 1) [1]. This implicit mind is the target of our mind reading.

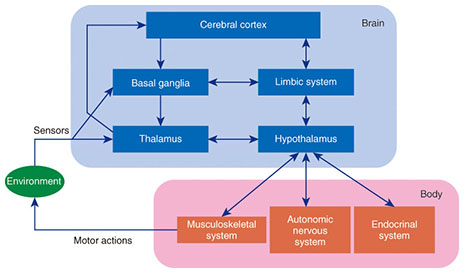

Many experimental studies have shown strong interactions between the implicit mind and the body. In a sense, they are inseparable, like two sides of a coin. Such a tight relationship reflects the complex loops of the brain, body, and environment (Fig. 2). If some event happens in the environment, the states of one’s body such as the autonomic, endocrinal, and musculoskeletal systems change so that the person can react to the event appropriately. The information about the event is also sent to the cerebral cortex, where the event is recognized through complicated information processing. These changes in body states start prior to, and thus deeply affect, the processing in the cerebral cortex. This is why people often lose control of their mind and body, no matter how well they understand what to do. For example, if they had to make a speech in front of some eminent people, they might inadvertently make some uncharacteristic mistakes due to extreme nervousness.

Moreover, in interpersonal communication, unconscious body movements of partners interact with one another, creating a kind of resonance. The resonance, in addition to explicit language and gesture, may provide the basis for understanding and sharing emotions (Fig. 1). An experiment we conducted recently demonstrated that the unconscious synchronization of footsteps between two people who had met for the first time and were walking side by side for several minutes enhanced the positive impressions that they had of each other. The mind is, in one aspect, a dynamic phenomenon that emerges through the interaction mediated by bodies. Thus, measuring the body surface instead of the brain not only has practical merit, but is also essential in mind reading. The next section introduces an example of our experiments. 3. Reading the familiarity and preference for music from the eyes3.1 Measuring microsaccadesAs the saying goes, “The eyes are more eloquent than the mouth.” In the context of mind-reading technology, gaze direction has been used extensively as an index of visual attention or interest. However, what is reflected in the eyes is not limited to mental states directed to or evoked by visual objects. We are studying how to decode mental states such as saliency, familiarity, and preference for sounds based on the information obtained from eyes, namely, a kind of eye movement called a microsaccade as well as changes in pupil diameter. In the experiment, the measurement is conducted using a high-precision eye camera (sampling rate = 1000 Hz, spatial resolution < 0.01°), installed in a downward frontal position to the participant, whose head movement is restricted by a chin rest (Fig. 3). Although technical problems remain to be solved before we can achieve completely unconstrained measurement in the real world, it has been shown that the eyes can provide more diverse information than previously thought.

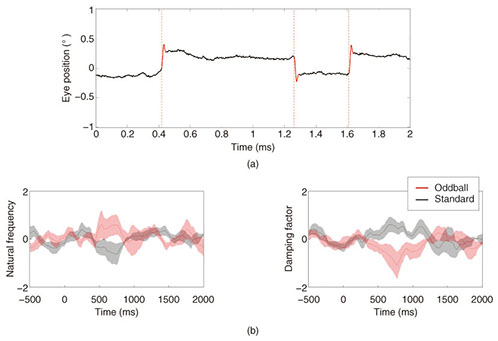

Microsaccades are small, rapid, involuntary eye movements that typically occur once every second or two during a visual fixation task (Fig. 4(a)). We have revealed a previously unexplored relationship between auditory salience and features of microsaccades by introducing a novel model of eye-position control [2]. In short, the presentation of a salient (i.e., unusual or prominent, and therefore easily noticeable) sound among a series of less salient sounds induced a temporal decrease in the damping factor of microsaccades, which is an indicator of the accuracy in position control, and a temporal increase in the natural frequency of microsaccades, which is an indicator of the speed of position control (Fig. 4(b)).

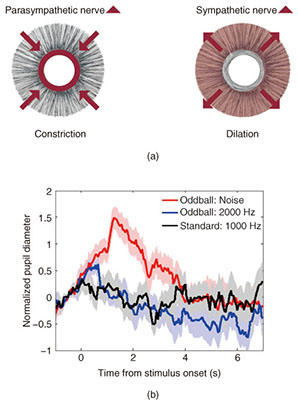

3.2 Studying pupillary responsesOne more thing we focus on is pupillary responses. The primary function of the pupils is to control the amount of light entering the retina, just as the diaphragm of a camera does. However, pupil diameter is also modulated by emotional arousal and cognitive functions such as attention, memory, preference, and decision making (Fig. 5(a)). This is because pupil diameter is controlled by the balance of sympathetic and parasympathetic nervous systems, and reflects, to some extent, the level of neurotransmitters that control cognitive processing in the brain. We have demonstrated that pupil dilation, as well as microsaccades, can be used as a physiological marker for certain aspects of auditory salience [3]. Temporary pupil dilation occurs when a salient sound is presented among a series of less salient sounds (Fig. 5(b)). Such pupil dilation responses depend on various factors including acoustic properties, context, and presentation probability, but not critically on voluntary attention to sounds.

We applied these basic findings in some attempts to estimate a listener’s familiarity and preference for a tune based on the features of microsaccades and pupillary responses while listening to the tune. In addition to the physiological signals obtained from the eyes, we also analyzed the acoustic/musical properties of the tune. For example, we have developed a novel surprise index, which represents the extent of unpredictability of the musical data at a given moment in a tune, given the data up to that point. We consider this index useful because a typical tune consists of regularity (predictability) and deviation from it (unpredictability or surprise), and the balance between the two seems to be one of the critical factors that contribute to familiarity and attractiveness of the tune. At NTT Communication Science Laboratories Open House 2014, we conducted a demonstration in which each participant listened to one of 15 tunes from various music genres including classical, rock, and jazz for 90 seconds. The participant’s familiarity and preference ratings for the tune were then estimated based on 12 features of microsaccades and pupillary responses, together with several features of the tune including surprise. Prior to the demonstration, the decoding system had learned the mapping between those features and subjective ratings (7-point scale each) of familiarity and preference for 23 participants. In the demonstration, the differences between the actual and estimated ratings were 2 or smaller in more than 80% of the trials for nearly 200 participants. (Note that this was only an informal demonstration and not a rigorous test.) The demonstration was designed to estimate subjective (that is, not implicit) ratings, but could be extended to implicit mind (or behavior), once objective behavioral data are available, such as which tune each participant decided to buy and when and how many times the tune was played back. 4. Future directionsIn addition to the work described in this article, we are conducting diverse lines of research concerning the responses of the brain and autonomic nervous systems, hormone secretion, and body movements. In one such project, we developed a method to measure the concentration of oxytocin (a hormone considered to promote trust and attachment to others) in human saliva with the highest accuracy to date (in collaboration with Prof. Suguru Kawato of the University of Tokyo). This has enabled us to identify a physiological mechanism underlying relaxation induced by music listening. Listening to music with a slow tempo promotes the secretion of oxytocin, which activates the parasympathetic nervous system, resulting in relaxation [4]. Analysis of hormone concentration is not fast enough for direct use in ICT devices. However, when the relationship between physiological signals that are quickly measurable and the relevant hormone concentration is revealed by laboratory experiments, the knowledge will be beneficial for mind reading. In evaluating the quality of video or audio, for example, it would be possible to capture the differences in quality of experience that are not apparent in subjective ratings. As wearable sensor technology advances, mind reading will be applied more widely. It is especially attractive in sport-related areas. For example, measurement of heart rate, respiration rate, and muscle potential at various parts of the body using sensors woven into underwear would make it possible to report mental and physical states of players during a game, or to develop effective training methods that combine monitoring and sensory feedback. The study of reading the implicit mind has just begun. For the moment, basic research is necessary to understand the mechanisms of the complex loops of mind and body. Needless to say, careful consideration should be given to ethical issues such as privacy and safety. AcknowledgmentsPart of this research is supported by CREST from Japan Science and Technology Agency and SCOPE (121803022) from Ministry of Internal Affairs and Communications. References

|

|||||||||