|

|||||||||||||||||||||||

|

|

|||||||||||||||||||||||

|

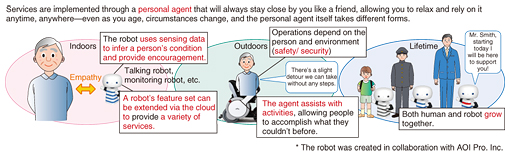

Feature Articles: The Challenge of Creating Epoch-making Services that Impress Users Vol. 13, No. 7, pp. 11–16, July 2015. https://doi.org/10.53829/ntr201507fa3 Personal Agents to Support Personal GrowthAbstractWe at NTT Service Evolution Laboratories have been focusing on implementing a personal agent that will co-exist with us in the real world, empathize with us, encourage us to stay active, always be at our side to support our personal growth, and even grow on its own. In this article, we introduce sensing technology for understanding people and their environment; multimodal interaction technology for supporting people and helping them to grow; and virtualization technology for implementing device features that are used to continuously support people in any place or at any time. Keywords: vital signs sensing, multimodal interaction, M2M 1. IntroductionOver the course of our lifetimes, we can acquire new skills, maintain our current skills even as we age, and be energized and motivated to action. We use the term personal agent to refer to an entity that understands our personal situations and helps us to grow. Although a personal agent is a lifelong assistant, we want it to push us to improve rather than become a source of our complete dependence. Our research and development efforts at NTT Service Evolution Laboratories have been focused on implementing just such a personal agent. If we can build a personal agent like the one we just described, it will allow us to provide agent services that support and push people to improve over the course of their lifetimes, as shown in Fig. 1.

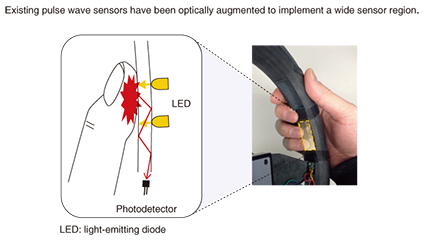

In order to do this, we need to understand the environment around the person being supported (using sensing technology) and to continuously support that person in his or her activities over a lifetime (using multimodal interaction technology). These, in turn, require a mechanism for taking in differences between the robots and other devices that serve as the physical manifestation of the personal agent, effectively accommodating and controlling a vast number of sensors, and providing an abstraction layer for applications that use these devices (device feature virtualization). 2. Sensing technology for inferring internal human statePulse rates and electrocardiograms are useful biological data for understanding a person’s psychological and physiological state. This kind of data is generally collected by means of sensors attached to the fingertips, arms, ears, and other parts of the body. An alternative method of naturally and unobtrusively collecting data is to use hitoe™,*1 a smart fabric jointly developed by NTT and Toray Industries, Inc. By simply donning a hitoe shirt, the wearer can provide acceleration data and information on the electrical activity of his or her heart [1]. We have also developed a more conventional pulse wave sensor surface for people who would find it inconvenient to wear or attach sensors in advance. Unlike existing pulse wave sensors that take measurements at a single point, our sensor has been augmented with optical technology so that it can take measurements anywhere on a surface [2]. These expanded capabilities allow it to take measurements even when it is only lightly touched. For example, we could combine this sensor with a mouse or steering wheel to respectively measure a person’s pulse wave data during ordinary computer use or while driving, as shown in Fig. 2.

However, because hitoe and surface-based pulse wave sensors take measurements during everyday activities, movement and other factors introduce noise into the data and thus make it more difficult to analyze than data measured by stationary, dedicated devices. To grapple with this problem, we are researching technologies that will remove noise from the measured data and very precisely quantify features such as heartbeats; we are also researching technologies that will estimate mental fatigue, changes in cognitive performance, sleep quality, balance and posture while walking, movement, and other properties from the sensor data and its characteristics. We can also expect to roll out sports and healthcare solutions by employing these technologies for fatigue risk management, productivity improvements, sleep treatments, and activity tracking.

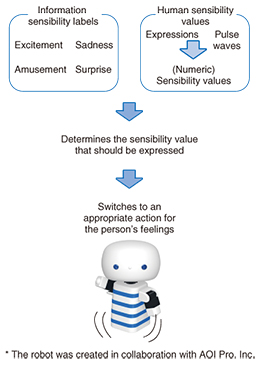

3. Multimodal interaction technology for helping people growIn order for a personal agent to provide active encouragement that is based on personal and surrounding circumstances, it is important for the agent to use expressions and give feedback in ways that are appropriate to the time and place as well as each individual person. Multimodal interaction utilizes a combination of sensing, voice recognition, speech synthesis, and interactive dialogue to understand human expressions and conditions; it then verbally or physically responds in kind through a robot or some other gadget, thus presenting information and content to the user. This makes it possible to put the user at ease, evoke emotions, and assist the user in his or her personal growth. Here, we must also pause to consider how a person’s age, generation, location, and situation will affect the type of robot or electronic gadget that he or she will come into contact with. Even if the type of robot or gadget changes, its control procedures and methods for interacting with humans will be implemented as a data model that can be personalized and stored for each user; this allows any robot or gadget to appropriately and seamlessly reassure, empathize with, and otherwise support the personal growth of the user. One specific use case that we are considering is an intelligent robot*2 that provides active encouragement to patients in their own homes as well as in nursing homes. As shown in Fig. 3, the robot would first use biological sensors to come to an understanding of the patient’s physical condition; then, on the basis of that condition, it could use speech, gestures, and other forms of emotional expression to encourage conversation and keep dementia in check [3].

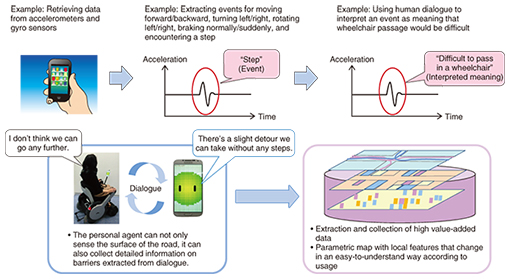

We are also considering ways to support people who are disabled or have physical impairments, in anticipation of futuristic wheelchairs and the spread of other personal mobility technologies. We are striving to allow people to enjoy going outside—even if they cannot walk about freely—with the help of a personal mobility vehicle, which will have its own personality and thus provide an easy, interactive way for anyone to learn how to drive it as well as providing directions to a destination. In order to collect subjective information that is difficult to interpret through sensors (such as physical sensations and anecdotes), we are considering methods for prompting the user to recall information during the course of natural conversation. By accumulating and extracting this information along with map data, we will be able to provide an optimal navigation experience for everyone, as shown in Fig. 4.

We are also exploring multimodal interaction technologies based on neuroscience and psychology in the field of sports and child development. Specifically, we anticipate a need for assistance with skill acquisition in sports and are therefore researching the possibility of a coaching suit that will detect muscle movements via biological sensors and then use force feedback, visual feedback, and audio feedback to teach each individual wearer appropriate form. After internalizing proper muscle and joint movements through sports practice done while wearing the coaching suit, athletes should be able to reproduce proper form even without the suit on.

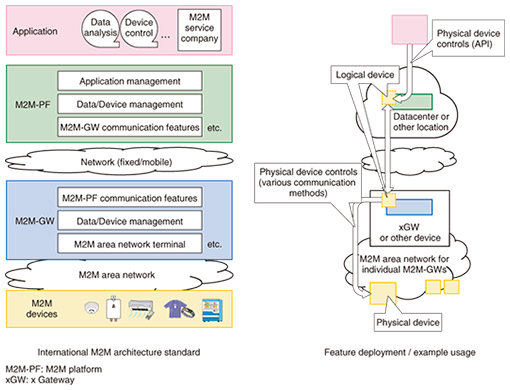

4. Device feature virtualization to support personal agent functionalityTo implement the aforementioned technologies, it will be important to efficiently manage robots and other gadgets (or a wider range of devices) along with the data they collect. It will also be important to make it easy for device functionality to be used over a network (via the cloud). The basic architecture for implementing all of this must make it easy to control each device and make use of data without being aware of the differing protocols for each individual device and industry domain. In many cases, practical data applications and device management policies have common requirements. Furthermore, services that are provided and implemented with a common architecture can be used in cross-sector applications and are thus desirable in terms of efficiency during system development. As we examined this architecture, we also paid attention to trends related to the international standardization of machine-to-machine (M2M)*3 technologies because the goal of international standardization is in line with our own efforts: to establish specifications for service platforms and system architectures applicable in many different industries [4]. oneM2M*4 is an international standards organization for M2M technologies that is jointly run by telecommunications standards bodies in Japan, the United States, China, Korea, and Europe. The first version of an international standard—with prescriptions for architecture implementations—was officially released in January 2015. We have been referring to this standardized M2M architecture as we explore basic architectures for virtualizing device features. The standardized M2M architecture and an example application of device feature virtualization are shown in Fig. 5.

In device virtualization, the international M2M architecture standard is used for reference in defining physical device features as a logical device in a datacenter via an M2M-GW (gateway)*5, which can then be controlled by applications via an application programming interface (API)*6. To get the most use out of this architecture, we are moving forward with a oneM2M standard proposal that will give common device model specifications for a variety of different industries. In the future, we will augment devices with wearable sensors, robots, and other technology; support a wide variety of M2M area networks*7; and explore even more opportunities that will allow us to apply this technology to a wide range of services.

5. Future workPersonal agents will coexist with us in the real world—empathizing with us, encouraging us to act, growing together with us, and pushing us to take small steps in areas where we may have given up before. In this way, we believe that personal agents will enrich our lives. In order to make personal agents a reality, we need a diverse collection of technologies: video processing to act as an agent’s eyes; speech and language processing to act as an agent’s mouth; artificial intelligence and big data analysis to act as an agent’s brain and memories; and a robot to act as an agent’s body. In addition to making use of the technologies being researched at NTT, we would like to partner with a large number of companies. Through co-innovation, we hope to create a personal agent that will help people grow. References

|

|||||||||||||||||||||||