|

|||||||||||||||||||

|

|

|||||||||||||||||||

|

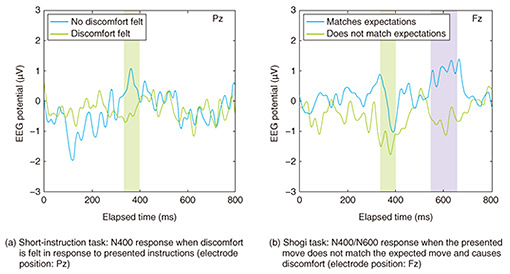

Feature Articles: Efforts to Implement Digital Twin Computing for Creation of New Value Vol. 21, No. 4, pp. 20–25, Apr. 2023. https://doi.org/10.53829/ntr202304fa2 Applied Neuroscience Technology for Enabling Mind-to-mind CommunicationAbstractThis article introduces the following four technologies we are developing that apply neuroscience for creating a world in which people with different sensibilities can understand and respect one another: (i) technology for decoding the sense of discomfort or satisfaction from electroencephalogram data, (ii) technology for making brain states containing sensory information perceptible as representations in the brain, (iii) neural-coupling technology for enhancing mutual understanding, and (iv) mental-image-reconstruction technology for reproducing the images in the mind. Keywords: mind-to-mind communication, digital twin, brain tech 1. Neuroscience for enabling mind-to-mind communicationMind-to-mind communication using Digital Twin Computing aims to achieve mutual understanding that transcends differences in individual characteristics, such as experience and sensibility, in a manner that creates a world in which mutual respect is promoted and cooperation and creativity are enhanced. We aim to create new communication media that enable us to directly understand one another’s feelings, such as understanding how we perceive and feel things in our minds, and enable mind-to-mind communication by handling human-brain information that contains sensory information, subjective perception (e.g., discomfort and acceptance), and states of mind (e.g., emotions, and cognitive states). We are currently focusing on scalp electroencephalogram (EEG) data, which can be applied to the field of communication because it is inexpensive, non-invasive, and portable, and engaged in various research and development projects using brain information obtained from EEG data. 2. Brain-decoding technology for detecting subjective perception for adviceReactions, such as discomfort or acceptance, in regard to another person’s words are important subjective perceptions in communication. However, it is not easy to convey these reactions to others in an appropriate manner through current communication. For example, in situations in which instructors give advice to a student, sometimes, the receiver is unable to appropriately convey their feelings of discomfort or the receiver is not satisfied even after following the advice, and these communication discrepancies are thought to hinder effective instruction. Considering those discrepancies, we have been researching brain-decoding technology for detecting subjective perception, such as discomfort and acceptance, through EEG measurement. Previous studies have reported that a specific brain response (event-related potential*1) is generated by the discomfort that occurs when presented with extremely unnatural writing [1, 2]. The event-related potentials associated with discomfort are known as N400*2 responses that occur when a person experiences a feeling of discomfort. However, the sentences presented in these studies were not natural sentences as used in communication; rather, they were obvious semantic errors (e.g., “He put a sock on the warm bread.”) and world knowledge errors (e.g., “Dandelions are black.”). Understanding the subjective perceptions of others is also extremely important not only in verbal communication, as reported in the above studies, but also in nonverbal communication. In this study, we investigated the brain responses of participants performing (i) a task in which they were presented with short instructions written in more natural sentences than those of the previous studies and (ii) a task in which they were presented advice on moves in shogi (Japanese chess). The results of the short-instruction task (Fig. 1(a)) indicate that (i) even when instructions in more natural sentences than previous studies were presented, the same N400 response was observed as reported in a previous study when the receiver felt the instruction uncomfortable, and (ii) the N400 response was stronger when the receiver felt uncomfortable and chose not to accept the instruction, i.e., when the receiver showed strong rejection of the instruction. The results of the shogi task (Fig. 1(b)) indicate that the same N400 was observed as in the short-instruction task when the receiver felt uncomfortable because the move did not match the expected move, but N400 was attenuated when the receiver accepted the move even though it did not match their opinion. We also observed an apparent N600 response along with N400. Since N600 has been reported to occur during the process of finding solutions to contradictions in logical thinking and understanding of rules, it is likely that, in this case, it was caused by a cognitive process of trying to interpret a move that felt uncomfortable.

These new findings, which expand on previous research, suggest the possibility of using EEGs to estimate the presence or absence of discomfort and whether agreement can be reached in general verbal-communication situations in which natural sentences are exchanged as well as in nonverbal-communication situations. For future work, we will investigate the precise conditions under which each response occurs and work toward achieving real-time brain decoding that detects states of mind from EEG data.

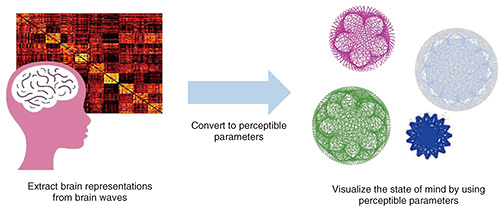

3. Brain-representation visualization technology for enabling the perception of brain expressionsThrough conventional verbal and nonverbal communication, it is impossible to convey individual’s states of mind such as emotions, and cognitive states to others with 100% accuracy. It is not easy to fully understand the state of mind, even within ourselves. Most studies on sensory communication have focused only on emotions, which are the most well-known and easy to handle types of states of mind. Emotions are mostly evaluated in terms of several categories or in two dimensions, and it is difficult to fully express new emotions or detailed differences in emotions. However, we believe that various states of mind can be expressed by visualizing the state of the brain activity during a certain emotion. Therefore, we developed brain-representation visualization technology that enables real-time perception of brain activities in a multi-dimensional representation. To enable the transmission of various states of mind, this technology extracts brain information (i.e., brain representations) related to subjective perceptions and states of mind from EEG data, compresses those representations into seven dimensions, and uses them as parameters for perceptibility. It then draws various geometric figures in accordance with the state of mind and displays them as animations in real time (Fig. 2).

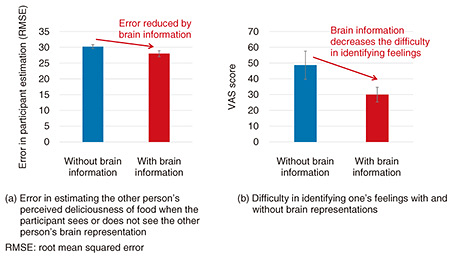

Considering individual differences in brain representations, an individual model of brain representations is created using EEGs obtained in advance. The EEGs used for model creation determine the type of sensory information to be included according to the purpose, for example, EEG data are acquired while recalling various emotions or tasting various dishes. A geometric figure called Rose of Venus*3 is used to express various states of mind in terms of the size, color, and pattern shape of that figure. We experimentally verified the effectiveness of this technology through a task in which a participant estimated using the visual analogue scale (VAS) whether another person felt a type of food was delicious. The results indicate that the estimation error was reduced by watching the other person’s brain representation; in other words, the brain representation facilitated understanding of the other person’s preference (Fig. 3(a)). We conducted another task in which we asked participants to describe their feelings through the VAS after an emotional episode while looking at their own brain representations. The results from this task indicate that the effect of looking at their brain representations was to reduce the difficulty of identifying their feelings; that is, it enabled them to identify their feelings through their brain representations (Fig. 3(b)). Our future work includes investigating changes in the accuracy of reading brain representations when this technology is used continuously in communication situations, verifying its long-term effectiveness, and studying more appropriate visualization methods. We will also continue our efforts in generating facial expressions from brain representations of people with various diseases such as ALS (amyotrophic lateral sclerosis) and in situations where facial expressions cannot be made (such as virtual reality environments).

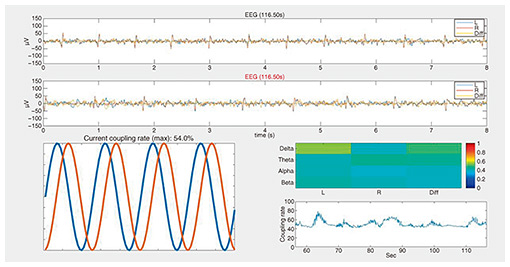

4. Neural-coupling technology for synchronizing the brain and promoting empathy and cooperationWe are striving to create an environment of mutual understanding and cooperation as well as detect states of mind and make subjective perceptions communicable. A previous study reported that a synchronous phenomenon was observed in the brain activities of two people when they were empathizing with each other or performing cooperative tasks [3]. Given that finding, we thought that it might be possible to create a state of easy empathy and cooperation by inducing a synchronized state through intervention. We believe that neurofeedback*4—which provides feedback on the current state of brain-wave synchronization—may induce a synchronized state of brain waves. Accordingly, we are researching neural-coupling technology to increase the quality and quantity of communication, thus making shared tasks smoother. This technology executes neurofeedback by separating EEG data into four frequency bands (i.e., δ, θ, α, and β waves), calculating the coupling rate of each frequency band in real time, and outputting the calculation results. Therefore, it is possible to perform cooperative tasks and communicate while viewing the current coupling rate and its overall trend on a screen (Fig. 4).

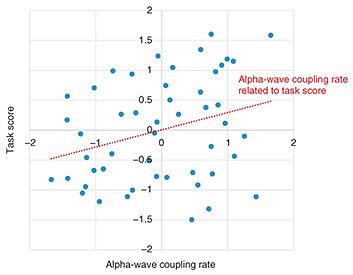

In an experiment to verify the effectiveness of this technology, participants played a cooperative video game as a cooperative task. From the result, we confirmed that there is a correlation between the coupling rate of alpha waves in brain waves and the efficiency of cooperative work (Fig. 5). For future work, we will (i) confirm the effects of improving the coupling rate on the quality and quantity of communication and on task efficiency during cooperative work and (ii) investigate appropriate intervention methods to increase the coupling rate.

5. Mental-image-reconstruction technology for reproducing mental imagesIt is difficult to accurately express the images in one’s mind (i.e., mental images), and this difficulty directly leads to the problem of being unable to accurately convey the mental image one wants to convey to the other person in a conversation. With the recent developments in artificial intelligence in image generation, various image-generation techniques using textual information have been developed; however, generating mental images from textual information alone is limited. Referencing previous studies [4, 5] on functional magnetic-resonance imaging (fMRI) and intracranial electroencephalography (electrocorticography, ECoG) for decoding mental images, we are now striving to attain mental-image decoding using EEG, which is easier to measure than using fMRI and ECoG. As a first step in our efforts, we used a category-classification task, which has been used in previous studies, to experimentally verify estimating the content of perceived and imagined images. The estimation accuracy concerning the three categories of images (landscape, vehicle, and human face) used in this experiment exceeded the chance level for perceived images, but there is room for improvement for imagined images. For future work, we will attempt to improve the accuracy of category estimation by studying models optimized for decoding perceived and imagined images and selecting EEG features. We will also aim to achieve mental-image reconstruction by studying decoding other than decoding of categories. 6. Concluding remarksBy using the various technologies that apply neuroscience introduced in this article, we aim to enable (i) communication that promotes awareness of one another’s differences, empathy, and compassion and (ii) communication that enables mutual understanding by making it possible to express states of mind that were not possible until now. To achieve these aims, we will continue to work toward a world in which we can understand and cooperate with one another by making it possible to share the subjective perceptions and states of mind necessary for mutual understanding and mutual respect rather than by transmitting all our true feelings and inner thoughts. References

|

|||||||||||||||||||