|

|||||||||||||||||

|

|

|||||||||||||||||

|

Feature Articles: International Standardization Trends Vol. 22, No. 1, pp. 43–49, Jan. 2024. https://doi.org/10.53829/ntr202401fa5 Standardization Trends Related to Application- and Service-related TechnologiesAbstractThis article describes the standardization trends in the International Organization for Standardization/International Electrotechnical Commission Joint Technical Committee 1 (ISO/IEC JTC 1)/Subcommittee 29 (SC 29), and International Telecommunication Union - Telecommunication Standardization Sector (ITU-T) Study Group 16 (SG16) as well as ISO/IEC JTC 1/SC 27 and ITU-T SG17. Both SC 29 and SG16 deal with multimedia coding and transmission and are shaping the future of entertainment and business communication. Both SC 27 and SG17 work on standardization related to information security and communication security and are helping to strengthen the reliability and privacy protection of the rapidly evolving digital society. Keywords: security, multimedia, international standardization 1. Standardization trends related to multimedia codingThe International Organization for Standardization/International Electrotechnical Commission Joint Technical Committee 1 (ISO/IEC JTC*1 1)/Subcommittee 29 (SC*2 29) previously had two Working Groups (WGs)*3: WG 1, commonly known as JPEG (Joint Photographic Experts Group) and WG 11, commonly known as MPEG (Moving Picture Experts Group). JPEG developed coding schemes, formats, and transmission methods for still images, while MPEG developed coding schemes, formats, and transmission methods for video and audio. Both WGs periodically produced media codecs and transmission methods, such as JPEG and Advanced Video Coding (AVC)/H.264*4, thus contributed to the growth of digital media. However, the variety of media handled by MPEG has grown so diverse and the number of participants in MPEG has become so large (several hundred) that it became inefficient for MPEG to continue working as a single WG. To solve this problem, in July 2020, SC 29 created seven WGs based on the subgroups that existed within WG 11, each handling respectively video, audio, systems, and others; three Advisory Groups (AGs)*5, which support these WGs in working together as a single MPEG team; and one AG, which supports cooperation between MPEG and JPEG. This reorganization was an important step to keep pace with evolution in technology and changes in industries and to ensure rapid and efficient standardization in this era when the technical domains of the video, still image, audio, and other media industries overlap. This reorganization has enabled SC 29 to address a broader and more diverse range of applications and develop standards not only in the areas of video, audio, and still images but also in new areas such as artificial intelligence (AI), genomics, and medical imaging. Although WG 11 ceased to exist, the name MPEG continues to be used as the common name for an across-the-board organization consisting of WG 2 through WG 8 and AGs 2, 3, and 5 and as the generic name for the standards created by these WGs. The international meeting that these organizations hold four times a year is also termed the MPEG Meeting. The current WG structure of SC 29 and the name and activities of each WG are described below. 1.1 JPEG

WG 1 works on compression coding and formats of still and other images. It is standardizing still-image codecs, such as JPEG XL, which offers excellent compatibility for web applications, and JPEG XS, which provides low-latency lossless encoding for industrial applications. WG 1 is also developing standards for the JPEG Pleno series, which includes codecs for high-dimensional media such as light fields, point clouds, and holography. It is actively incorporating AI technology and studying learning-based tools for JPEG AI and JPEG Pleno Point Cloud. 1.2 MPEGAs mentioned above, the seven WGs, from WG 2 through WG 8, cooperate to develop common standards under the name of MPEG. The latest series is ISO/IEC 23090 MPEG-I, which targets immersive applications, such as virtual reality (VR) and augmented reality (AR), and covers a wide range of media, including video, audio, and three-dimensional (3D) point clouds.

In preparation for starting new standardization activities, WG 2 is conducting preliminary discussions, such as those on collecting use cases and identifying requirements. After discussions have made a certain amount of progress, each activity will move to a more specialized WG, where technical proposals will be solicited through Call for Proposal (CfP) and the standardization work will proceed in earnest.

WG 3 standardizes the formats of media that are coded with a range of codecs, various metadata used by applications, and transmission methods. It is currently standardizing MPEG-I Part 14: Scene Description for MPEG Media, a system for describing scenes composed of multiple media using an integrated approach.

WG 4 standardizes video codecs. It is currently standardizing MPEG-I Part 12: Immersive Video, which involves depth information for 3-degrees of freedom (3-DoF)/6-DoF representation of video and NNC (MPEG-7 Part 17: Compression of Neural Networks for Multimedia Content Description and Analysis), which compresses neural networks. WG 4 will soon begin the standardization of Video Coding for Machines (VCM) for coding video and images that are used in machine-learning tasks.

WG 5 collaborates with ITU-T Study Group 16 (SG*616) to standardize video codecs. Since high-compression video codecs are of great industrial importance, ITU-T SG16 and ISO/IEC JTC 1/SC 29 have formed a joint working team and developed new standards on an approximately 10-year cycle. The current working team, known as Joint Video Expert Team (JVET), completed the first version of VVC (MPEG-I Part 3: Versatile Video Coding) in 2020, a higher-compression, multifunctional standard that succeeded HEVC/H.265 (MPEG-H Part 2: High Efficiency Video Coding). As the name “versatile” implies, VVC was designed to support a wide range of applications. For example, its first version already supports high dynamic range, 360°, and screen content, which HEVC supported as extensions of its second version. In 2022, WG 5 completed the second version, which features bit-depth extensions for industrial applications. It will continue to further improve compression efficiency and introduce learning-based tools.

WG 6 standardizes audio and acoustic codecs. In 2019, it completed the standardization of MPEG-H Part 6: 3D Audio, an object-based audio codec. It is currently working on the standardization of MPEG-I Part 4: Immersive Audio with the aim of completing it in 2025.

WG 7 standardizes codecs for point clouds, haptic data, and other media that use 3D representations. The codecs for point clouds include V-PCC (MPEG-I Part 5: Video-based Point Cloud Compression), which has been customized to represent dense, time-varying point clouds (such as humans and small objects), such as those used in VR, G-PCC (MPEG-I Part 9: Geometry-based Point Cloud Compression), which can represent large-scale and sparse objects and scenes, such as LiDAR (light detection and ranging) data, and a standard that was derived from G-PCC. V-PCC converts 3D positions and the attribute information, such as color information, of a point cloud into an image and encodes it with any type of video codec. The first version was completed in 2021. The standardization of V-DMC (MPEG-I Part 29: Video-based Dynamic Mesh Compression), which extends the coding target to a dynamic mesh, is underway. G-PCC represents the positions of a point cloud in a tree structure and applies predictive encoding to the attribute information of the point cloud. The first version was completed in 2022. The second version, which extends the coding target to dynamic scenes and larger scenes, is being standardized. NTT is actively submitting proposals for the second version of G-PCC. Since July 2023, WG 7 has been preparing to issue a CfP for AI-based 3D Graphics Coding, which uses AI for compression. Other standards being studied by WG 7 include MPEG-IoMT (Internet of Multimedia Things), which is an application programming interface (API) for handling multimedia content with Internet of Things (IoT), and MPEG-I Part 31: Haptics Coding, a codec for haptic data.

WG 8 standardizes the MPEG-G series, which cover codecs for genome data and various metadata and APIs for genome data analysis. It completed a series of standards in 2020 and is studying further functional expansion. 1.3 AGs

As described above, SC 29 works on standardization of a wide range of multimedia, from codecs, formats, and systems for multimedia to representation of neural networks used for media processing. SC 29 plans to expand the range of media it handles and develop codecs that make full use of AI technology, and these aspects are attracting attention.

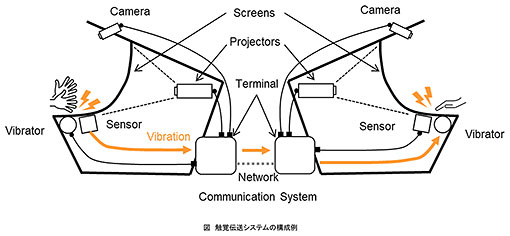

2. Standardization trends related to multimedia transmissionInternational standardization is also advancing in the field of applications that transmit multimedia content to remote locations. As described above, ITU-T SG16 promotes multimedia coding in collaboration with ISO/IEC JTC 1/SC 29. It also studies standardization of Internet-protocol television (IPTV), digital signage, and other systems that transmit to and play multimedia content at remote locations, as well as technologies for live transmission of multimedia elements besides video and audio to enable ultra-realistic experiences, enabling people at remote locations to feel as if they are actually present where the particular event is unfolding. In its early days, digital signage was normally used to distribute images on fixed displays in commercial buildings and train stations. It has also recently been mounted on autonomous robots connected to a network, such as delivery robots, which function as moving digital signage. Digital signage is also used as telepresence*7 terminals, terminals in virtual spaces, and terminals for communication that coveys ultra-realistic sensations. The latter is discussed below. SG16 standardizes the requirements for multimedia functions that are needed in the above applications. Standardization is in progress for a technology that makes more advanced use of video-transmission technology to implement services in which users can experience an event as if they were actually present at the event site in real time, rather than services in which users are simply watching the screen, as with conventional television. This technology, called immersive live experience (ILE), transmits 3D images that appear life size from the event site to a remote location (presentation side) in real time, enabling the audience to experience a sense of ultra-high reality that exceeds the limits of what can be reproduced with flat images and sound, as is the case with a television set. Generating a sense of immersion on the presentation side involves technologies, such as VR and AR (recently often referred to as XR (extended reality)). It also involves a series of processes, from the sensing on the event side (corresponding to video capture with a camera) to real-time transmission and processing and reproduction on the presentation side (corresponding to video display). Therefore, it is necessary to consider this series of processes as a system and standardize the system architecture and protocols necessary for generating an ultra-realistic experience in real time. Another important role of standardization is to ensure international interoperability so that this ultra-realistic experience can be delivered across national borders. Furthermore, ITU-T SG16 has recently begun to study a technology for transmitting tactile information. The first recommendation for this technology was developed and agreed upon at the SG16 meeting in July 2023 under the leadership of NTT and is expected to be published by the end of FY2023 after passing through the approval process. An example of how this technology is used is shown in Fig. 1. SG16 is expected to further develop recommendations related to using tactile information to further enhance the ultra-realistic experience of ILE and will study further extension of ILE by transmitting other information besides tactile information. A Focus Group (FG)*8 related to the metaverse, which has been attracting attention, has been established in ITU. The FG might study the relationship between the metaverse and ILE.

3. Standardization activities in the security fieldISO/IEC JTC 1/SC 27 and ITU-T SG17 are de jure standardization organizations that deal with security. This section introduces and briefly compares these two organizations. 3.1 ISO/IEC JTC 1/SC 27ISO/IEC JTC 1/SC 27 deals with methods, technologies, guidelines, etc. related to information security, cyber security, and privacy protection. It has five WGs, from WG1 through WG5:

The WGs of SC 27 have been basically static, and the scope of each WG has not changed significantly, although there have been some additions and minor changes in names. 3.2 ITU-T SG17ITU-T SG17 (Security) deals with the overall aspect of security. While security issues specialized in specific fields are handled by other SGs, SG17’s role is to coordinate the security issues handled by various SGs in ITU-T. This role is quite similar to that of ISO/IEC JTC 1/SC 27. However, SG17 differs from SC 27 in that it has been covering directories, object identifiers, and technical languages in addition to security. A representative recommendation made by SG17 is X.509, which defines a standard format for digital certificates. The syntax notation ASN.1 (Abstract Syntax Notation One), which was standardized by SG17, is also well-known. As of July 2023, SG17 has five Working Parties (WPs), from WP 1 through WP 5, and handles 12 Questions. Each Question belongs to one of the WPs. Thus, the structure of WPs and Questions is more dynamic than that of WGs in SC 27. For example, Questions are added or merged as discussions or technologies advance (that is why some Question numbers are skipped). The scope of a particular Question may be expanded and WPs and Questions may be extensively reorganized or rearranged at regular intervals. (1) WP 1 (Security strategy and coordination)

(2) WP 2 (5G, IoT and ITS security)

(3) WP 3 (Cybersecurity and management)

(4) WP 4 (Service and application security)

(5) WP 5 (Fundamental security technologies)

3.3 Comparison between ISO/IEC JTC 1/SC 27 and ITU-T SG17The differences in the organizational structure between ISO/IEC JTC 1/SC 27 and ITU-T SG17 are reflected in the differences between the corresponding Japanese national committees. The Japanese national committee that corresponds to SC 27 does not conduct technical studies. Technical studies are primarily carried out by the national subcommittees. There are five national subcommittees from 1 to 5, corresponding to international WGs. In contrast, the Japanese national committee that corresponds to SG17 conducts technical discussions on all relevant matters. There are no subcommittees that correspond to the international WPs. (Of course, within the national committee, a person is selected to be in charge of each issue discussed.) Considering the aforementioned differences between the two international organizations, one having a static structure and the other a dynamic structure, it seems reasonable that the Japanese national committees are organized in this way. It is not surprising that SC 27 and SG17 deal with many common technical issues, but their work on these issues complements each other in more than a few cases. Regarding public key cryptography, for example, SC 27 defines cryptographic algorithms and digital signature schemes, while SG17 defines the standard format and verification algorithms for public key infrastructure (PKI). The technologies defined by the two organizations are essential for the use of public key cryptography. Of course, many issues concerning guidelines and frameworks for security management and network security that are discussed in the two organizations are deeply related. Therefore, there are liaison teams (a group of people who participate in the meetings of the two organizations and stimulate the exchange of information between them) in both directions between SC 27 and SG17, enabling the two organizations to work closely in undertaking standardization. 3.4 Activities in ISO/IEC JTC 1/SC 27/WG 2This section outlines the work trends in SC 27/WG 2, in which the authors are actively involved, focusing on the standardization of a technology, called secure computation, for analyzing (computing) data in encrypted form. Let us consider a case in which a client collects data on a cloud server and analyzes the data. Ordinary cryptographic technology encrypts (1) the data that are exchanged between the client and cloud server and (2) the data that are stored on the cloud server for protection. However, during analysis, it is necessary to decrypt the data. In contrast, secure computation allows data analysis to be done without decryption. In other words, in addition to protecting (1) and (2), secure computation protects (3) the data being analyzed on the cloud server by keeping the data encrypted. Secure computation can analyze personal data of individuals or trade secrets of companies without decrypting them. To put it simply, secure computation enables us to utilize data without seeing its content. Secure computation is now attracting much attention not only because it allows secure data processing but also because it makes it possible to bring together data that would otherwise be difficult to disclose to other organizations and use the data for applications that transcend the boundaries of companies and industries. It has been listed in Gartner’s top trends in the strategic technology field as a technology group called privacy enhancing computation (PEC). Considering the growing interest in secure computation, NTT pushed standardization of secure computation, and SC 27/WG 2 began the standardization work of the ISO/IEC 4922 series in 2020. The series consists of two parts: ISO/IEC 4922-1, which covers overall aspects of secure computation, and ISO/IEC 4922-2, which covers secret sharing-based secure computation. NTT is playing a leading role in the development of both ISO/IEC 4922-1 and 4922-2, with its employees serving as the editors for these standards. Secret sharing-based secure computation uses a method, called secret sharing, which converts data into special fragments. Secret sharing has been standardized as the ISO/IEC 19592 series. ISO/IEC 4922-1 was published in July 2023, and ISO/IEC 4922-2 is in the final stage and is expected to be published soon. There have been moves to standardize other methods of secure computation, a sign that secure computation will continue to attract interest. 3.5 Other security-related standards discussed in ISO/IEC JTC 1/SC 27/WG 2In addition to secure computation, SC 27/WG 2 is discussing various cryptographic schemes, from basic ones (block encryption and hash functions) to those with more advanced functions and properties, such as anonymous authentication and post-quantum cryptography. In particular, post-quantum cryptography, which is said to be unsolvable even by quantum computers, has been actively discussed. Since it is known that quantum computers can theoretically decipher RSA (Rivest–Shamir–Adleman) signatures and other cryptographic schemes, advances in quantum computers have led to the standardization work of this form of cryptography. WG 2 is actively discussing post-quantum cryptography. The standardization of post-quantum cryptography is strongly affected by the results of the quantum cryptography competition held by the National Institute of Standards and Technology (NIST). This is why WG 2 is still at a stage of releasing a document, called standard document (SD), which describes the content of discussions in the WG, as a preliminary step toward standardization. Since the NIST competition ended in July 2022, the standardization work at WG 2 is expected to advance in the coming years. |

|||||||||||||||||