|

|||||||||||

|

|

|||||||||||

|

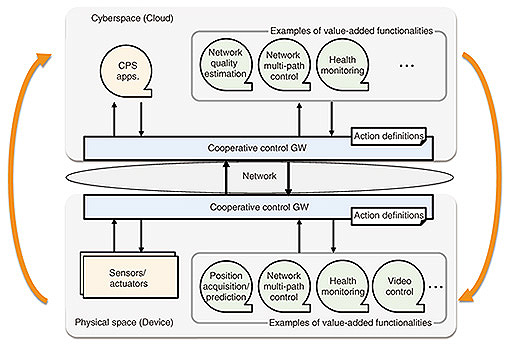

Feature Articles: Network Architecture in the 6G/IOWN Era: Inclusive Core Vol. 22, No. 12, pp. 40–46, Dec. 2024. https://doi.org/10.53829/ntr202412fa4 Cooperative Infrastructure Platform to Accommodate Mission-critical CPS ServicesAbstractThis article introduces the Cooperative Infrastructure Platform. We aim to establish this platform as a foundation for promoting social implementation of cyber-physical system (CPS) services, which hold promise for addressing various social challenges arising from factors such as population decline. In addition to the concept and overall architecture, we discuss the key technologies that contribute to mission-critical video transmission required for remote monitoring of Level 4 autonomous driving allowing for fully automated driving under specific conditions—a prime example of a CPS service use case. We also present the results of real-world demonstrations that integrate these technologies. Keywords: IOWN, cyber-physical systems, autonomous driving remote monitoring 1. Toward social implementation of cyber-physical systemsRecent advancement of information and communication technology, such as 5th-generation mobile communication system (5G) and 6G, has paved the way for cyber-physical systems (CPSs), which were previously challenging to implement. A CPS is a system that collects a wide variety of data in physical space, executes diverse types of analysis in cyberspace on the basis of the collected data, then provides control instructions and actions to physical space on the basis of the results of the analysis. Examples of CPS services include automated driving of vehicles (passenger cars, logistics trucks, agricultural machinery, etc.) and their remote monitoring, smart cities, and smart factories. CPS services hold immense potential for addressing two key challenges: labor shortages due to an aging population and a shrinking workforce and the need for increased productivity and efficiency. By automating tasks and enabling data-driven insights, CPS services can streamline operations, optimize resource allocation, and lead to higher added value. Because CPS services have characteristics that are quite different from those of conventional web applications, the requirements for a platform that accommodates the services are also different. For instance, CPS services interact directly with the physical space, meaning that failures can have tangible consequences. In remote driving scenarios, for example, a delayed stop instruction from cyberspace could result in an inability for the vehicle to halt in time. Therefore, the entire system comprising a CPS service must exhibit high reliability and real-time performance, ensuring that processes are completed within predetermined deadlines. Robust fault detection and mitigation mechanisms are also crucial. These systems should be capable of identifying any anomalies or failures and implementing corrective actions to minimize their impact on the overall system functionality. As CPS services are distributed systems spanning both cyberspace and physical space, a unified system for information exchange is required. A platform should facilitate the seamless sharing of sensing data from the physical space and actuation commands directed back into it. The versatility of CPS services, with their wide array of applications, necessitates a platform capable of accommodating diverse control applications, sensors, and actuators. Ideally, this platform should allow for easy customization and fine-tuning of processing workflows to suit specific use cases. To meet the unique demands of accommodating CPS services, NTT Network Service Systems Laboratories is developing the Cooperative Infrastructure Platform. This cutting-edge platform is aimed at facilitating the widespread adoption and social implementation of CPS services. The Cooperative Infrastructure Platform is being developed as an early version of the Inclusive Core for seamlessly integrating and coordinating cyberspace with physical space. This article provides an overview of the platform and explores the key technologies enabling remote monitoring of Level 4 autonomous vehicles, which allows for fully automated driving under specific conditions—a prime example of a CPS service use case. The concept and overall architecture of the Cooperative Infrastructure Platform are described in the following section. 2. Concept and architecture of Cooperative Infrastructure PlatformThe Cooperative Infrastructure Platform tightly integrates device-side functions, cloud-based information processing, and network connectivity to create a robust platform capable of supporting mission-critical CPS services [1]. The overall architecture of the Cooperative Infrastructure Platform is illustrated in Fig. 1. A cooperative control gateway (GW) is deployed in both physical space (device side) and cyberspace (cloud side). This GW provides the essential functions for accommodating CPS services.

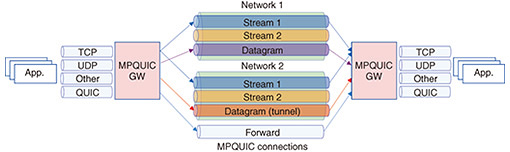

The three primary functions provided by the cooperative control GW are (1) accommodation of diverse components, (2) information exchange between devices and the cloud, and (3) flexible customization of control processing tailored to specific use cases through integration with value-added functions. This GW is based on Robot Operating System 2 (ROS2), the de facto standard in robot development. It enables information exchange with various ROS2-based sensors and actuators without any coding. It also partially supports communication protocols such as HTTP (Hypertext Transfer Protocol), gRPC (gRPC Remote Procedure Calls), and MQTT (Message Queuing Telemetry Transport). The GW also handles communication between the device and cloud. This allows for seamless data exchange over wide-area networks without requiring any special configuration on either the device or cloud side. The GW also enables users to define actions triggered by received information from accommodated components. These actions can be described in a file, which enables a wide range of functionalities beyond simple data exchange, such as decision-making based on received information, data processing, and conditional branching. Users can flexibly customize these actions in accordance with specific use cases and environments. Beyond these core functionalities, the Cooperative Infrastructure Platform offers a variety of valuable features essential for CPS services. These include log monitoring for each component and device-location tracking/prediction capabilities. By combining these features with the action description mechanism, CPS service providers can significantly streamline the development process and reduce time-to-market. Thus, the Cooperative Infrastructure Platform leverages both the basic functions provided by the cooperative control GW and value-added functions to support CPS services with the unique requirements previously mentioned. We have been demonstrating scenarios for advanced Level 3 autonomous driving of agricultural machinery, achieving fully unmanned operation under remote supervision. These scenarios include smooth wide-area automated driving while switching between multiple access networks (regional broadband wireless access and carrier 5G). Another scenario involves safe stopping triggered by network-quality-degradation detection [2]. We are now expanding our use cases to achieve Level 4 automated driving. The following section introduces key technologies that provide value-added functions for reliable remote monitoring of vehicles. 3. Enabling remote vehicle monitoring for Level 4 autonomous driving: key technologiesThis section focuses on key technologies for enabling remote vehicle monitoring. This capability is crucial for achieving Level 4 autonomous driving. Remote video monitoring (both inside and outside the vehicle) is required for Level 4 autonomous driving. However, when a vehicle uses mobile communication at high speeds, network quality can vary significantly due to numerous interference factors. This results in video interruptions and degradation, impacting the stability and continuity of monitoring. To enable stable remote vehicle monitoring even in unstable network conditions, we combine several key technologies for robust and real-time data transmission. We introduce three key technologies: the Multi-path QUIC Gateway (MPQUIC GW), Quality Index Map (QIM), and video control technology. 3.1 MPQUIC GWThe MPQUIC GW [3] handles both user traffic between devices and multi-access edge computing as well as control traffic for the Cooperative Infrastructure Platform. The MPQUIC GW transparently forwards any Transmission Control Protocol (TCP), User Datagram Protocol (UDP), or other communication using stream and datagram frames from multi-path extension for QUIC (MPQUIC) [4], a cutting-edge technology currently under standardization (Fig. 2). It provides various network control functionalities for optimized packet transmission, including network selection based on both predicted and actual network quality measurements across multiple networks. This ensures low latency and reliable communication even if one network exhibits degradation.

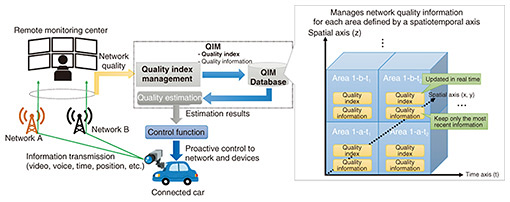

3.2 QIMTo ensure stable data transmission over networks with fluctuating wireless access, we need to predict and address significant network quality degradation before it impacts communication. This might involve actions such as switching the network by the MPQUIC GW. To proactively detect network degradation, we introduce QIM [5] (Fig. 3). This technology represents and stores network quality characteristics as probability distributions within a spatiotemporal domain to estimate network quality. QIM passively assesses network quality by analyzing the service data (e.g., video information from vehicles) and continuously updates a quality index reflecting the observed network qualities. This approach enables highly accurate estimations of fluctuating network quality.

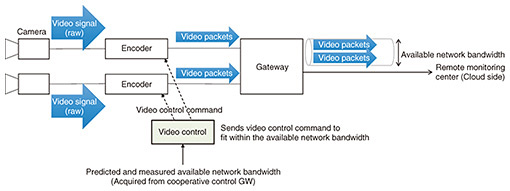

3.3 Video controlThe video control function adjusts the video encoding method to dynamically manage the amount of video data transmitted on the basis of network conditions (such as available bandwidth). By adapting the video data rate to the available bandwidth, this function ensures smooth video transmission with minimal latency, even under challenging network conditions by accepting some degree of video quality reduction to maintain uninterrupted streaming (Fig. 4).

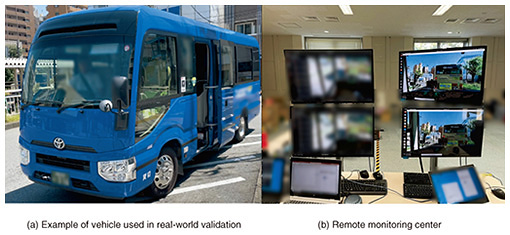

The video control function determines optimal encoding parameters on the basis of both predicted and measured network quality. It communicates these parameters to the encoder, instructing it on the appropriate encoding method. The function dynamically adjusts the video bitrate on the basis of network conditions. When network quality is good, it instructs the encoder to transmit at a higher bitrate. Conversely, if poor network quality or limited bandwidth is predicted, it reduces the bitrate to ensure uninterrupted streaming. The system also continuously monitors real-time network performance. If a sudden drop in quality is detected, it promptly instructs the encoder to lower the bitrate (Fig. 4). By leveraging both predicted and real-time network quality data, the video control function optimizes video transmission. This ensures smooth, real-time video streaming for vehicle remote monitoring while preserving as much video quality as possible. 3.4 Cooperative control among key technologiesThe Cooperative Infrastructure Platform leverages the synergy of the various key technologies described earlier. The goal is to achieve real-time, highly reliable, and low-latency video transmission essential for remote vehicle monitoring. The MPQUIC GW optimizes packet transmission by combining predicted network quality from the QIM with real-time network quality measurements. This allows for intelligent selection of the best network path for each packet, minimizing delay and maximizing bandwidth even under fluctuating conditions. Network quality information is also shared with the video control function. This enables dynamic adjustment of video-traffic volume to match the available bandwidth, preventing interruptions and latency issues. By enabling network and application layer functions to work together instead of independently, this approach enables the implementation of a stable video transmission system. The Cooperative Infrastructure Platform provides a versatile framework for integrating and coordinating various value-added functions. This is achieved through the cooperative control GW, which acts as a central hub. By offering this platform to CPS services, the platform enables the development of tailored control systems for diverse use cases. 3.5 Real-world validationTo demonstrate the effectiveness of the Cooperative Infrastructure Platform for autonomous-vehicle remote monitoring, we are conducting real-world tests by driving vehicles on public roads. The results of the verification are shown in Fig. 5. Assuming remote monitoring of a vehicle, a camera, encoder, and Cooperative Infrastructure Platform components were mounted inside the vehicle, and the vehicle ran in a specific area (Fig. 5(a)). The video data captured with the camera in vehicle were transmitted and displayed on the remote control system in real time (Fig. 5(b)).

Verification results indicate that when the Cooperative Infrastructure Platform is not implemented, frequent and prolonged communication outages occur, depending on the vehicle’s location and time of day. However, implementing this platform significantly improves connectivity. We are continuing verification efforts to identify real-world challenges and further enhance the system’s functionality. 4. Future developmentWe presented an overview of the Cooperative Infrastructure Platform we are developing to accelerate the social implementation of mission-critical CPS services. We also discussed various enabling technologies and demonstrated how this platform will enable remote monitoring of autonomous vehicles. We aim to further develop and refine the core technologies of this platform, expanding its application to a variety of use cases requiring highly reliable wireless communication. These include not only autonomous driving but also smart factories and advanced smart agriculture enabled by the collaboration and coordination of diverse devices such as drones. References

|

|||||||||||