|

|

|

|

|

|

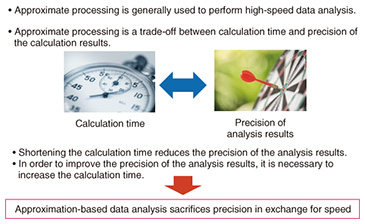

Rising Researchers Vol. 22, No. 12, pp. 14–19, Dec. 2024. https://doi.org/10.53829/ntr202412ri1  The Coming Era of Large-scale Data Calls for a Fast and Accurate Data Analytics PlatformAbstractThe All-Photonics Network, a component of the IOWN (Innovative Optical and Wireless Network) concept, envisions a future in which large amounts of data will be collected. A low-latency All-Photonics Network will enable users to acquire the knowledge and information they need, even if they do not have the computational resources or data at hand, by providing high-speed access. NTT Distinguished Researcher Yasuhiro Fujiwara is pursuing high-speed and accurate data analysis technology rather than using approximate solutions. We spoke with him about the unchanging core of his research and the parts where he is flexible in studying the external environment and taking action. Keywords: database, machine learning, pruning Databases and machine learning: Research into fast and accurate large-scale data analytics platform—Please tell us how you came to start your current research. I am currently conducting database-centered research on a high-speed data analytics platform for large-scale data. Looking back on my own research, I joined a database research team about 20 years ago, where I worked on presenting data content to users in an easy-to-understand, summarized form. For example, presenting information based on user preferences from a large amount of data, or searching for data that is close to the search criteria specified by the user. In the database field in the 1990s, before I started my research, the focus of research was on static data. However, when I began my research in the 2000s, devices such as sensors had developed, and research into dynamic data such as data streams (e.g. data transmitted in chronological order from the Internet) had become more and more popular. I researched data streams as it was trendy. But from around 2010, research into graph databases, which represent data as graphs and had a high affinity with popular services and applications at the time, such as social networks and the Web, became a popular research topic in that field. With this in mind, I too began researching graph databases. When I looked at technical issues and research papers from the perspective of applications that use databases, I found that machine learning could potentially be applied to many tasks, such as recommendations. At that time, I felt that perhaps the era of machine learning had arrived, and so I shifted my focus to research on large-scale analytics platforms designed with machine learning in mind. I also feel that research in the database field has changed in recent years due to the impact of machine learning. A database is a middleware that sits between an application and the operating system (OS). It plays a supporting role in storing and processing data. Twenty years ago, database research focused on data analysis that was very basic, such as similarity searches to find similar data. However, since the concept of big data emerged about 10 to 15 years ago, there has been a surge in interest in so-called “analytical techniques based on machine learning,” which perform sophisticated analysis on large amounts of data and present information hidden in the data that users could never have imagined. However, I think that the position of the database between the OS and applications has not changed. The database should maintain its position as a middleware that analyzes large amounts of collected data and provides the results to users. But we are also conducting research into a large-scale data information processing platform that connects databases and machine learning to create high added value. —Why is analytics platform research necessary in the era of large-scale data? Working with large-scale data is the real thrill of database research. The idea of database researchers is simple: we focus on “processing massive amounts of data quickly.” To put it bluntly, we have no interest in small data. This is nothing new. One of the oldest international database conferences, which has been held since the 1970s, is VLDB, which stands for Very Large Data Bases. Database research has long been based on the vision of “processing large amounts of data quickly,” and I think my research is a modern adaptation of that vision. In this era of large-scale data, where low-latency networks make it possible to collect massive amounts of data, I believe there will be greater interest than ever before in accurate, high-speed data analytics technologies that are necessary for utilizing the collected data. Machine learning will likely become even more important as a method for analyzing the collected data. There are a great many people both in Japan and internationally researching machine learning, but most of that research tends to be interested in increasing precision. To be honest, there are not that many people whose primary research focus is on increasing processing speed. This is because in order to pursue faster speeds, you need to know not only machine learning but also techniques in fields such as databases, which can be difficult to get started on. My research topic is this analytics platform that focuses on speed. In this era of large-scale data, it is becoming increasingly important to process data that is imperceptible to humans so that it can be converted into information that humans can perceive. To achieve this, I believe that research is necessary not only to improve precision, but also to build a platform for machine learning to analyze and process large amounts of data accurately and quickly. —Is this research related to the All-Photonics Network, a component of the IOWN concept? The Innovative Optical and Wireless Network (IOWN) concept envisions a future in which large amounts of data will be collected through the All-Photonics Network (a network with low power consumption, high speed, large capacity, and low latency transmission achieved by introducing photonic (optical)-based technology in everything from terminal devices to the network and providing end-to-end optical wavelength paths). I believe that this is highly compatible with the vision of “processing massive amounts of data at high speed” in database research. Therefore, I believe that my research into data analytics platforms can contribute to actualizing the IOWN concept. To be more specific, when low latency becomes the norm through the All-Photonics Network, there will no longer be a need to have computer resources or data at hand. Various types of processing will be possible by accessing the computer resources and data on a server at the other end of the low-latency network. In addition, once the All-Photonics Network is up and running as part of IOWN, it will be possible to collect large amounts of data. My research is into a high-speed data analytics platform for large amounts of data, which will lead to obtaining “unexpected knowledge and information contained in the massive amounts of data” stored on servers connected via a network. I am conducting research aimed at “high added value in networks” by building an ultra-high-speed, low-latency IOWN All-Photonics Network and a data analytics platform that will create value from the data collected there. —What are the features of a high-speed data analytics platform for large-scale data? Please tell us how it differs from other processing technologies and what its key features are. The big difference between our research and other typical database research is that we aim for both speed and accuracy, rather than an approximate solution (a solution that prioritizes speed and tolerates error). I mentioned that when I first started researching databases, research into data streams was trendy. At the time, the common understanding in the database field was that processing cannot keep up with data flows, so an approximate solution is sufficient. As a young man in my twenties, I was uncomfortable with this idea of how a database should be, and wanted to do something about it (Fig. 1).

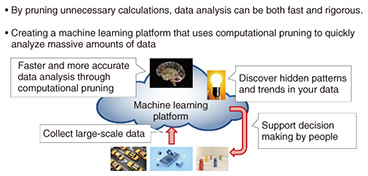

I temporarily left the research lab and worked at NTT WEST for about two years. At the time, the development of the Next Generation Network (NGN) was gaining momentum, but the challenge was what kind of services to develop next after the development of the NGN was completed. Creating new uses for networks such as FLET’S Group Access was one way to go, but I felt that the service I was in charge of at the time was meaningful, as it supported existing services with new technology and contributed to existing businesses. To be more specific, the service extended the life of the radio relay network provided by NTT, which had become difficult to maintain due to deteriorating manufacturers’ equipment, by developing new equipment that would provide the same interface from the customer’s perspective. At the time, we thought that providing existing services under the same operating conditions but replacing the backend with a new one could become one of the services that a company offers. Although the functions available to users remain the same, we have been thinking that providing intangible value, such as faster and more reliable operation, could also be a major innovation. If users were to replace their current system with an alternative system, they would be happier if the processing results were accurate rather than approximate, and if the required computing resources were reduced while speed was increased and initial (implementation) and running costs were reduced. With this in mind, I am focusing on research into fast and accurate methods that incorporate pruning technology, rather than the fast approximate solution method that is mainstream in the database field but that I find problematic. I believe that this high-speed, accurate data analytics technology will underpin other functions within IOWN’s vision, and by improving processing speed and costs even slightly, this will become an advantage of IOWN itself (Fig. 2).

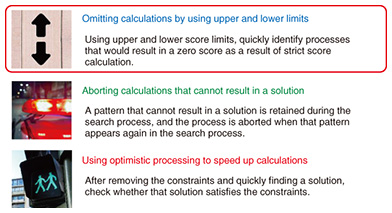

—Please tell us about pruning technology, the key to increasing speed. In the database world, there is a concept called pruning, which is both a research concept and one of the central ideas behind the methodology. There are several pruning techniques, but the three most common are omitting calculations by using upper and lower limits, aborting calculations that cannot result in a solution, and using optimistic processing to speed up calculations (Fig. 3).

To put it simply, pruning technology is characterized by basically only considering how to “slack off.” It speeds things up by thinking about how it can slack off on calculations and figuring out what it doesn’t need. For example, when searching for the word “pencil” in a dictionary, not many people would start from the beginning and turn each page one by one. A common technique for databases is to first find the page with the letter “p” and then flip through pages that look like they might have the letter “e.” This kind of coarse-to-fine search is repeated, gradually refining the search. It’s the same as searching for things in your daily life. If you lose your keys, you don’t go searching through the whole house, do you? You remember where you last saw them, and then you look for them around there. The idea behind pruning is to eliminate places where the item is obviously not and look for places where it might be, which helps to eliminate wasted effort. I am conducting research into applying this idea to mathematical formulas that can be processed by computers. The challenge of keeping up with ever-changing technology—Please tell us about any challenges you have faced in carrying out this research and any specific things you keep in mind. I think what makes me different from other researchers is that, for better or worse, I don’t specialize in a particular field. Many researchers specialize in a particular field, but my research is based on the idea of pruning in order to speed up calculations, so I am not necessarily specialized in a particular field. Therefore, my constant challenge is to keep up with the latest data processing needs and emerging technological trends, and to see if there are any areas in which I can contribute. A researcher I previously supervised once told me, “What you do is similar to the performance of changing parts on a car while it is running on two wheels.” Just like all researchers, I have to keep changing parts while in constant motion. In my case, I have one foot in the research field of database pruning, and the other foot in new research fields such as machine learning. Deep learning (a technology in which computers autonomously analyze large amounts of data and extract features from the data), which is currently a hot topic in research, may be replaced by a different technology in 5 to 10 years. If that happens, I think I’ll have to keep up and study the technology that will be in demand at that time. Always study and do research. —Please tell us about the prospects for this research. Recently, while talking with people from various fields, the topic of deep learning keeps coming up. About 10 years ago, when deep learning first emerged, the first choice was whether or not to do deep learning. At the time, there were quite a few researchers who refused to do deep learning, but times have changed and their numbers have decreased significantly. Many young researchers today are researching deep learning. However, deep learning is a process that just keeps plugging away at calculations, and there aren’t many places where you can slack off on calculations, so I wasn’t entirely enthusiastic about deep learning. However, now that more than 10 years have passed since the emergence of deep learning, the technology has diversified and many related technologies have emerged. Even with large language models (LLMs), we are now in an era where knowledge is generated using not only LLMs but also external data, and I think that this is gradually creating an opportunity to slack off on calculations. The more data that is linked with external sources in deep learning, the more I feel there is potential for my pruning technology to be put to good use. Furthermore, since “searching for something that matches certain conditions” is likely an essential process when humans handle data using computers, I believe it will be important in all sorts of research that will emerge. I think that in the future, research will continue to focus on high-speed searching.

—Finally, do you have a message for researchers, students, and business partners? Generally speaking, I think the ideal thing in one’s work is to strike a balance between what you can do, what you should do, and what you want to do. When you’re a student, what you want to do carries a lot of weight, and I think many people don’t know what they can do. In my case, I went to a for-profit company to turn what I want to do into a job, but I realized that the work there wasn’t necessarily what I can do. In the same way, when you’re young, you don’t know what you can do, and often what you can do doesn’t match what you want to do, so I think you often feel betrayed by your feelings about what you want to do. Therefore, I think it’s a good idea, especially when you’re young, to try a variety of jobs first, without being picky, to find out what you can do. Furthermore, as an information researcher, I believe that with the increasing use of computers and Internet technology in the world today, I am blessed with the opportunity to see what I want to do and what I can do become what I should do, something that the world needs. I think it’s important to find your field as a researcher among the various research options available. NTT is conducting a wide range of research in the information field, so there are many people working on research outside their own specialization, especially since there are more than 2000 researchers. Many of NTT’s researchers are wonderful people, so it’s an environment where it’s easy to make friends and gather information. Furthermore, because NTT has many for-profit companies, there are also paths to becoming an engineer, data analyst, etc., in addition to being a researcher. I would encourage those who are interested in working in this industry to take advantage of the wide range of options available, make contact with a wide range of fields with interest, and take a positive approach with a long-term perspective. The researchers currently active at NTT were not necessarily those who had an impressive track record as researchers since their student days. Many of them have blossomed through perseverance and dedication in their research. I too didn’t start working on research in earnest until my 30s, when I returned to a research lab from a for-profit company. If you persevere and keep at it, the right people will take notice. When I was young, and my first paper was accepted, I felt like I was on the top of the world for about a month. I think students and young researchers will also feel a sense of satisfaction when they look at a paper that emerged from the ideas they wrote down in their notes. Even before your paper is accepted, it gives you a special sense of accomplishment when the ideas in your head become reality. Successful researchers all have their own distinct perspective. If the world has a certain way of looking at something, but you insist on a different perspective, then you might be suited for this industry. We now live in an age where science and engineering researchers are creating a new world through technology. I hope that there will be more young researchers who have their own distinct perspective. ■Interviewee profileYasuhiro Fujiwara graduated from the Department of Electrical, Electronic and Information Engineering, Faculty of Science and Engineering, Waseda University in 2001 and completed master’s course in electrical engineering at the Waseda University Graduate School of Science and Engineering in 2003. In the same year, he joined Nippon Telegraph and Telephone Corporation. In 2011, he completed his doctoral studies in electronic and information engineering at the Graduate School of Information Science and Technology, the University of Tokyo and obtained a Ph.D. (Information Science and Technology). He is engaged in research and development in areas such as data engineering, data mining, and artificial intelligence. He has received numerous awards, including the 2017 Minister of Education, Culture, Sports, Science and Technology Young Scientists’ Award in the field of science and technology, the Telecommunications Advancement Foundation’s 27th Telecom System Technology Award, and the Information Processing Society of Japan’s 2015 Nagao Special Researcher Award. |