|

|||||||||||||

|

|

|||||||||||||

|

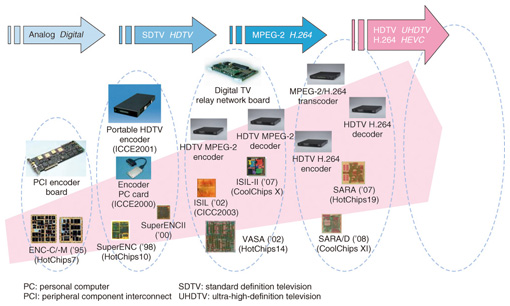

Feature Articles: Video Technology for 4K/8K Services with Ultrahigh Sense of Presence Vol. 12, No. 5, pp. 13–18, May 2014. https://doi.org/10.53829/ntr201405fa3 HEVC Hardware Encoder TechnologyAbstractFor 4K and 8K video services that are capable of providing high definition pictures with an abundant sense of presence, the latest H.265/MPEG-H video coding standard, also known as HEVC (High Efficiency Video Coding), should allow the encoding of huge quantities of video data to be performed effectively and with high compression efficiency. This article introduces HEVC hardware encoder technology that is capable of encoding 4K video in real time. Keywords: HEVC, 4K/8K, hardware encoder 1. IntroductionIn recent years, advances in device technologies have resulted in the rapid proliferation of devices such as cameras, displays, and tablets that are capable of capturing and displaying 4K video, which has higher resolution than high-definition television (HDTV). With the proliferation of these devices, expectations are growing with regard to next-generation video services for the distribution of HD video via broadcasting and network delivery. In particular, 4K TVs are becoming increasingly popular in ordinary households, with many models now available from major TV manufacturers. It is also becoming possible to experience this technology at 4K live viewings and event broadcasts, and at movie theaters and other venues equipped with 4K projectors. However, systems that use the H.264/MPEG-4 AVC (Advanced Video Coding) (also known as H.264) international video coding standard—the current mainstream technology—tend to be overwhelmed by the large amount of data (bit rate) needed for 4K video, and thus, there is a strong need for systems that support the latest international video coding standard H.265/MPEG-H, also called HEVC (High Efficiency Video Coding), which offers about twice the coding efficiency of H.264. The development of HEVC systems with high compression efficiency is also an important requirement for making 4K content viewable in every home and for delivering video using a variety of transmission media. 2. The need for real-time encodersTo broadcast and deliver HD content that is best appreciated live, for example, high-profile sporting events and live concerts, rather than pre-recorded content such as movies and dramas, it is essential to have a mechanism for encoding and transmitting video in real time. For transmission applications where video contributions are needed during program production, we require high-performance equipment that not only operates in real time but is also compact and has low latency, low power consumption, and faithful color reproduction. We therefore need a hardware encoder that can stably encode and deliver video while maintaining real-time performance. 3. Recent advances in video encoding hardware technologyAt NTT laboratories, we have been researching and developing technologies to realize real-time broadcasting and communication services for high-quality HD video, including the world’s first single-chip HDTV MPEG-2 encoder large-scale integrated circuit (LSI) [1] and HDTV-compatible H.264 encoder LSI [2]. NTT’s VASA single-chip HDTV MPEG-2 encoder LSI has contributed greatly to the spread and growth of digital TV relay network services transmitting video material, and our SARA H.264 encoder LSI has made a similar contribution to digital TV broadcasting and delivery services, including the HIKARI TV digital broadcasting and on-demand service. The accumulation of these technologies has accelerated the development of HEVC hardware encoder configuration technology for compatibility with next-generation 4K and 8K*1 video (Fig. 1).

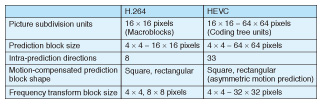

4. HEVC: The latest encoding standardHEVC is the most recent international standard for video coding, the first issue of which (version 1) was published in January 2013 [3]. A second issue (version 2) is currently being drafted. This will include compatibility with high color reproducibility (4:2:2 of 4:4:4 encoding) for high-end professional applications, and hierarchical coding whereby the video quality is changed in a stepwise fashion suited to the available bit rate. The coding performance of HEVC is expected to be capable of achieving comparable picture quality with half the data needed for H.264 (widely used in One-Seg and smartphones) and just a quarter of the data needed for MPEG-2 coding (used for terrestrial digital TV and DVDs (digital versatile discs)). To achieve a practical bandwidth for the broadcasting and delivery of 4K and 8K video, where the amount of video data increases dramatically, it is essential to adopt HEVC with high compression efficiency. Although the basic framework of HEVC coding is similar to that of the prior standards, it uses a much stronger combination of parameters such as block sizes and block shapes when subdividing and encoding an image, so as to efficiently eliminate redundancies contained within the image. A comparison of HEVC and H.264 is presented in Table 1. By adapting to image features, HEVC can encode large regions of uniform color (e.g., blue skies) all at once so as to eliminate unnecessary image subdivision overheads, while in regions with complex movements such as crowds of people, it uses detailed predictive coding to achieve a detailed separation of image regions corresponding to each movement. The use of such methods results in high overall coding efficiency.

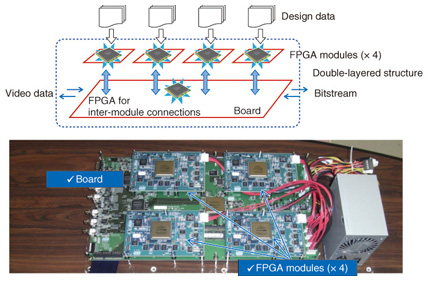

5. HEVC encoder design issuesAlthough HEVC achieves highly efficient coding by using a wide combination of block sizes and block shapes, this places a significantly higher processing load on the video encoding processor and can cause severe loading. To improve the encoder performance, it is essential to find a solution that achieves high coding efficiency with fewer combinations, without loss of coding quality. In particular, to achieve high real-time performance in the design of a hardware encoder, a mechanism for efficiently selecting combinations with high coding efficiency must be implemented while balancing the trade-off between picture quality and the scale of the processing circuitry. 6. HEVC hardware design methodThe development of a hardware encoder usually begins with a software simulation to evaluate the coding performance prior to the design stage. In practice, however, it is difficult to estimate the circuit scale of an actual hardware implementation, which results in problems such as prolonged simulation runs and the difficulties associated with evaluating the quality of large numbers of video images. We are therefore adopting the following two approaches based on the idea of searching for an optimal architecture by making flexible changes to the hardware configuration in order to improve the coding efficiency while evaluating the picture quality in actual hardware as well as in the simulation. (1) SystemC development approach SystemC is a hardware design language that can automatically generate hardware circuits from source code written in C++, a language widely used for software development. Compared with RTL (Register Transfer Level: a conventional hardware design language), the design of typical hardware design aspects such as the arithmetic unit configuration and clock handling is automated so that the designer can concentrate on developing the encoder processing algorithm. When changes are made to the encoding algorithm, a new hardware circuit can easily be generated automatically. (2) Video codec development platform We have developed a video codec development platform that can run hardware circuit simulations written in SystemC (Fig. 2). This platform can accommodate up to four modules based on rewritable hardware circuits in field programmable gate arrays (FPGAs), allowing hardware circuits generated by SystemC to be written and operated in real time. In addition to setting up input and output terminals for the video and encoded data on the main board, we also added the ability to make flexible changes to the connections between modules by mounting a separate FPGA to be connected between the four base modules.

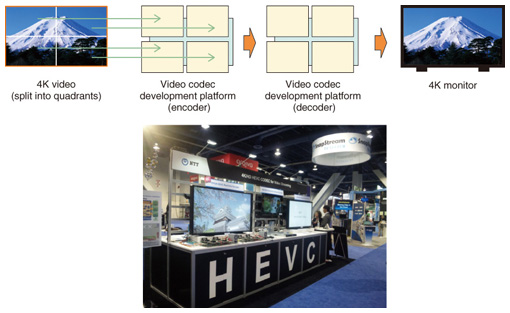

These two approaches are used to successively implement and improve the hardware while striking a balance between circuit scale and picture quality, and as a result, it has become possible to increase the performance of the HEVC hardware encoder while reducing the time needed for design [4]. An example of a real-time 4K HEVC intra codec that was implemented in the video codec development platform is shown in Fig. 3.

This codec performs 4K encoding by splitting the 4K video into four quadrants that are separately input to module circuits configured for real-time HEVC intra-coding*2 and decoding of video sized at HDTV dimensions in each FPGA. In the future, we aim to continue using the same development method to further improve the hardware architectures for HEVC encoder LSIs.

7. Future prospectsHigh-definition video is likely to be used in a widening range of environments as further improvements are made to display technology and camera performance. We expect there will be an ever-growing need for real-time encoding technology with good picture quality and superior compression performance, and therefore intend to contribute to the expansion of high-quality broadcasting and video-on-demand services by continuing with our research and development of encoder technology as a key component of 4K and 8K video services. References

|

|||||||||||||