|

|||||||||||

|

|

|||||||||||

|

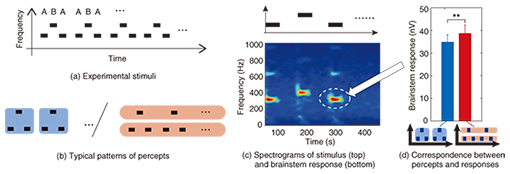

Feature Articles: Communication Science as a Compass for the Future Vol. 13, No. 11, pp. 13–18, Nov. 2015. https://doi.org/10.53829/ntr201511fa3 Biological Measures that Reflect Auditory PerceptionAbstractBrain processes involved in auditory perception are reflected in various physical/physiological responses. Our recent studies indicate that in addition to brainwaves, responses that might seem to have nothing to do with audition—for example, pupillary responses, sounds emitted from the ear, and rhythmic finger-tapping movements—actually provide information about subjective auditory experiences of listeners. These findings may lead to various applications such as designing auditory displays with pleasing sounds adapted to individual listeners and developing novel techniques for diagnosing or compensating for impaired hearing. Keywords: audition, biological measures, cognitive neuroscience 1. IntroductionKnowing how listeners perceive sounds or how sound information is processed in the brain is important not only in understanding basic mechanisms of the auditory system, but also in establishing guidelines for designing effective auditory displays or in evaluating devices for hearing aids. The use of psychological tests is a standard approach to examine perception, but it has limitations. There is no guarantee that the listener will be able to report his/her sensations accurately when the sensation does not have an associated correct answer or cannot be expressed in words or by pressing a button. It can also happen that the sensation varies each time even when the same stimuli are presented repeatedly to the same person. Furthermore, the results of a psychological test do not directly indicate what biological mechanisms underlie the sensation. Biological measures are attracting increasing attention as an alternative approach to probing auditory perception. Recent technological advances in measurement techniques and accumulated knowledge in cognitive neuroscience have brought us new tools to examine how we hear. Biological measures allow us to gain information about the listener’s perception and its underlying mechanisms without requiring the listeners to report their sensations explicitly. 2. Probing auditory perceptual content through neural activityRecent attempts to probe perceptual content have mainly focused on cortical neuron activity. However, we should not forget that what can be observed as cortical neuron activity is only a small portion of the functions of the entire sensory system. We emphasize that the brainstem, often viewed as a physiological component of a low-level processing stage, plays an important role in perception. We are studying the brainstem by focusing on the frequency-following response (FFR). The FFR is a class of auditory evoked potentials, which can be recorded through electrodes placed on the scalp. The FFR waveform is isomorphic to the stimulus waveform (Fig. 1(c)), and is believed to reflect characteristics of brainstem mechanisms for processing stimulus temporal structures. When tone bursts A and B, which differ in frequency, are presented alternatingly (ABA-ABA-ABA-…; Fig. 1(a)), a typical listener reports two patterns of percepts (Fig. 1(b)). One pattern is a single stream in which the short phrase ABA- is repeated. The other consists of two parallel streams with the same frequencies, that is, A-A-A-A- and -B---B---. The perception of those two patterns is not stable; the two patterns switch spontaneously and randomly during a prolonged listening session. Since the physical parameters of stimuli are unchanged, such perceptual switching should reflect what is happening in the individual listener’s brain. Our research group discovered that the instantaneous perceived pattern and the strength of the FFR correlate with each other (Fig. 1(d)) [1].

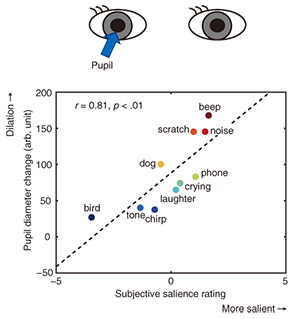

Although the stimuli used in the experiment were simple and artificial, the discovery has marked implications in everyday listening. In natural environments, the sounds around us are not always clear. Nevertheless, the brain silently and continuously explores rational interpretations based on ambiguous auditory information. We consider that the spontaneous switching of the perception of the ABA sequence reflects brain processes involved in this exploration process. Our discovery indicates that supposedly low-level brainstem mechanisms play a significant role in interpreting acoustic information, which is usually regarded as a high-level process. This demonstrates an important contribution of the cross-level neural network in auditory perception. Our achievement also has a marked technological implication; it presents possibilities that continuously changing auditory perceptions within individual listeners can be captured as brainwaves. 3. Reading auditory perceptual content through the eyesElectrical signals from neurons are not the only indicators reflecting brain activity. Our research group is also examining the eyes to study audition. The locus coeruleus, a brainstem nucleus, is known to contribute to controlling alertness and selective attention, and its neural activity appears to be reflected as pupil diameter [2]. We tested whether this mechanism could be used to evaluate the salience (tendency to attract attention) of sounds. We presented various sound samples, including environmental sounds and abstract tones, while recording the listener’s pupil diameter. The results showed not only that the pupil dilates after the onset of a stimulus, but also that the strength of the dilation response correlates well with the subjective salience rating of the sounds (Fig. 2) [3]. The relationships between pupil diameter and cognitive load or attention have already been reported. However, to our knowledge, we were the first to find a relationship between pupil diameter and a basic subjective property of sound. Critical questions as to the acoustic properties of sound and the biological mechanisms that evoke pupil dilation response are yet to be studied. Nevertheless, it may become possible in the future to measure the degree to which listeners are attracted to given sounds by observing their eyes.

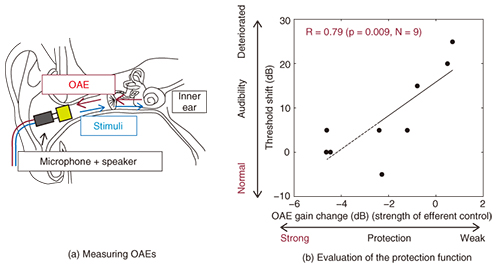

4. The ear’s protection mechanismThe brainstem not only relays auditory signals from the ears to higher-level processing stages but also has downward pathways (referred to as the efferent system) that control the ears. Currently, however, there is as yet no standard view as to what practical role the efferent system plays in a real environment. The inner ear does not simply transmit or convert acoustic signals but actively and mechanically amplifies the signals. This nonlinear amplifier can return the vibrations back to the eardrum, thereby emitting sounds (known as otoacoustic emissions or OAEs; Fig. 3(a)). The OAEs provide information about the amplification gain of the inner ear. It is known that the amplification gain often decreases when an intense sound is presented, which is likely due to the efferent system as mentioned earlier. This mechanism may function to protect the delicate inner ear from damage caused by intense sounds. To test this hypothesis, we designed experiments involving violinists (violin students at a music school). In their daily instrument practice, violinists are exposed to intense sounds emitted from the instruments close to their ears. In fact, we observed significant, although temporary, elevations in their hearing threshold (known as a temporary threshold shift, TTS) after each practice session. The magnitude of TTS varied among individuals. We found a marked correlation between the TTS magnitude and the degree of the individual’s amplification gain adjustment evaluated with OAE measurements (Fig. 3(b)) [4]. The results demonstrate that the efferent system serves as a protection mechanism. Noise-induced hearing loss is becoming a social issue in modern society, in which generations of people from teenagers to the elderly listen to music for many hours a day through the earphones of a portable audio device. In the future, evaluations of the functionality of the efferent system, as was done in the present study, may be used to estimate an individual listener’s risk of noise-induced hearing loss, which would help to prevent hearing impairment.

5. Objective audiometryAuditory detection thresholds in clinical audiometry are simple but very important measures in audiology since they are used as principal data for diagnosing hearing impairment. However, traditional methods for measuring detection thresholds have a critical problem. They are all subjective methods, which means that listeners report their percepts themselves. If the listener cannot report the percepts or deliberately reports them incorrectly, the methods are unreliable. Consequently, objective methods are sometimes used as an alternative. However, existing objective methods are used to evaluate the physiological functions of particular structures such as the inner ear and brainstem, but they do not directly indicate the listener’s perceptions. Our research group has built on our accumulated knowledge and experience in sensory and motor studies in attempting to develop a conceptually novel objective method. Our idea incorporates a so-called sensorimotor synchronization task in which the participant is required to tap their finger rhythmically and synchronously with iso-interval visual flashes. The subject performance in this task is known to be strongly influenced by the timing of simultaneously presented auditory stimuli (e.g., tone bursts). When the timing of the auditory stimuli is slightly delayed relative to that of the visual stimuli, the tap timing tends to shift to the auditory timing, even though the participant does not notice this (Figs. 4(a) and 4(b)). Our study quantitatively indicated that this disturbance by auditory stimuli occurs even with very weak sounds that are close to the detection threshold [5]. This phenomenon is the principle of our objective method; that is, by examining the presence of the auditory disturbance, we can determine whether the sound is audible or not to the participant, without requiring subjective reports. It should be noted that this method involves psychophysical tasks but is nearly feigning-proof. In this task, it is difficult for a participant to pretend that an audible sound is not audible, because the performance of the task would be poorer for audible sounds than for inaudible ones, unlike typical psychophysical tasks (including those adopted in traditional audiometry), in which the opposite would be true. As well as being a feigning-proof test, our new method is expected to be used as a diagnostic tool to reveal hearing problems that have not been sufficiently described by traditional methods.

6. Future perspectivesThe findings described in the present article are exciting, as they open doors to new technologies to measure hearing. They also remind us that our auditory perception involves not only the sensory system but also complex interactions of multiple biological systems such as the motor, autonomic, and endocrine systems. New cognitive-neuroscientific paradigms are demanded in order to understand and make use of such complex mechanisms. Rapidly developing sensing technologies and/or machine-learning techniques may provide solutions. We also should not forget the importance of conducting basic research that disentangles complex systems step-by-step. In this article, we presented new ideas incorporating concepts that would initially appear to be unrelated to audition. Those new ideas would not have been conceived without referring to the painstaking research efforts of many scientists in various fields. AcknowledgmentThe work described in sections 2 and 5 include products of the SCOPE project (121803022) commissioned by the Ministry of Internal Affairs and Communications. The study described in section 4 was performed in collaboration with Kyoto City University of Arts. References

|

|||||||||||