|

|||

|

|

|||

|

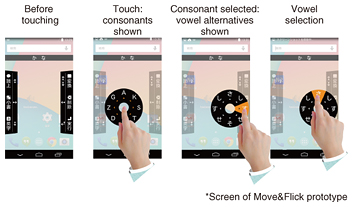

Regular Articles Vol. 14, No. 7, pp. 49–55, July 2016. https://doi.org/10.53829/ntr201607ra2 Move&Flick: Text Input Application for Visually Impaired People—From Research and Development to Distribution of the ApplicationAbstractMove&Flick is a new text input application on smartphones developed by NTT DOCOMO. The application is designed for visually impaired people and has been available on Apple’s App Store since August 2015. NTT Service Evolution Laboratories researched and developed the algorithms to recognize finger motions that are used to operate the application, as well as the application’s basic design, together with NTT CLARUTY, some of whose employees are visually impaired. NTT DOCOMO developed the application based on Apple’s App Store Review Guidelines and has been working with NTT CLARUTY to support visually impaired users so that they benefit sufficiently from the application. This article introduces the process from research and development to the distribution of the application on Apple’s App Store. Keywords: visually impaired, text input, touch screen 1. IntroductionMany visually impaired people are interested in smartphones with touch screens since more smartphone applications are becoming available that are designed for such users, and some users are highly motivated to use devices that have become popular with many people. However, smartphone applications that demand a lot of text input via touch screens pose a challenge for the visually impaired. It is difficult to input text using the standard touch operation providing voice feedback that is similar to the key operation used with the numeric keyboards for feature phones, as the touch screens have no surface indentations. Voice input is standard in smartphones and can be very helpful for people with visual impairments. However, this input is difficult to use in public spaces such as trains or department stores, as visually impaired users are reluctant to provide revealing details that people nearby, who are strangers, can hear. While fluency in the standard operation of smartphones can be acquired with long-term training, most visually impaired people continue to use feature phones due to their ease of use, or they use a feature phone for text input and a smartphone for other applications. There has long been a demand for a new text input interface/application for smartphones that is easy to learn and to use by visually impaired users. 2. Easy-to-acquire gestures on touch screens for visually impaired peopleNTT Service Evolution Laboratories has been researching and developing a new text input method for smartphone screens for visually impaired users. To ensure practicality, we focused on single finger gestures on the screen and attempted to minimize the importance of touch positions on the screen. Of course, multi-touch operations do exist and are already used in various applications. However, there is a lack of commonality, meaning that the same gestures can yield different commands depending on the application, and this tends to confuse the visually impaired. This is a unique problem for multi-touch gestures. We asked the visually impaired staff of NTT CLARUTY to try various single finger gestures for screen input and identified which gestures were easy for the visually impaired to acquire. It is clearly difficult for visually impaired users to learn complicated gestures such as ideograms, as the lack of visual feedback means that they cannot understand what errors were made and how to correct them, and voice feedback makes it difficult for users to understand what errors were made and how to correct them. Even with an encircling gesture, which most people assume to be fairly simple, the finger of the visually impaired person tended to hit the side of the screen. Our investigation showed that they were able to acquire a gesture consisting of two continuous finger strokes with no intermediate finger lift; each stroke was made in one of eight directions, as shown in Fig. 1. The first stroke selects a consonant (or a command group), and the second stroke selects a vowel (or a command of the selected group). A Japanese character is input as combinations of a consonant and a vowel. The name Move&Flick replicates the basic gestures.

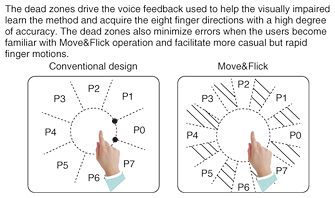

3. Techniques to facilitate learning the basic gestures of Move&FlickMove&Flick is designed to help visually impaired users acquire the gestures with high accuracy and to increase the recognition accuracy of the basic gestures. Move&Flick has an algorithm to detect finger movement direction using radial areas with dead zones, as shown in Fig. 2. The finger movement direction is selected when a finger moves from the circle area into any of the eight radial areas. The selected area is provided as feedback to the user by voice. No movement direction is output while the finger is in the circle area or dead zones. This design helps the visually impaired to understand the ideal finger movement directions and to easily acquire the basic gestures. In addition, the dead zones suppress detection errors even if the operation is rough and quick, as may be expected with more experienced users.

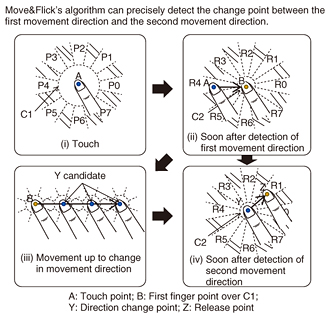

Move&Flick also has an algorithm to accurately detect the change point, which is when the first stroke changes to the second stroke. This is necessary because the distance of finger movements differs with each input of each person, and the accuracy with which the change point is detected affects the accuracy with which the second stroke is detected. The details of this algorithm are shown in Fig. 3. The change point is Point Y, and the point at which the first stroke direction is detected is Point B, which does not coincide with Point Y. The algorithm confirms whether the detected first stroke direction and the direction of the finger motion is the same every time the finger moves a prescribed distance, which is the radius of C2 shown in Figs. 3(ii) and 3(iv), and sets Point Y when the first stroke direction and the direction of finger motion are different. The algorithm reduces the need to be careful about moving the finger the correct distance.

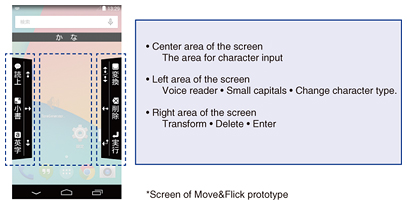

NTT Service Evolution Laboratories developed these algorithms as part of a project organized by the Ministry of Internal Affairs and Communications in the Japanese Government. 4. Design of Move&FlickJapanese text input applications for the visually impaired include not only characters but also options such as delete, kana-kanji transformation, and options such as a voice reader. Various studies have been done on text input, but few text input applications have been released that implement the research achievements. One reason is that the application designs failed to implement all required commands. Japanese characters outnumber alphanumeric characters, and there are several operations such as kana-kanji transformation and kana-katakana transformation that alphabet text input applications do not have. Therefore, NTT Service Evolution Laboratories in conjunction with NTT CLARUTY worked on enhancing the basic gestures of Move&Flick to simplify the input of all commands and effectively map gestures to commands. To increase the level of modality, we focused on motions before the basic gestures. We implemented a means of changing the mode of input by entering a single tap immediately before entering the basic gestures. Most of the visually impaired users were able to learn this single tap operation in a short time. The operation in Move&Flick is used to change the consonant. We also examined how the smartphones were held. The visually impaired tend to hold the smartphone with the non-dominant hand and operate the device with the dominant hand. Visually impaired users can discern the device width since smartphones have touch screens that are basically as wide as the device and the non-dominant hand has physical contact with both sides of the device. Therefore, they can accurately touch the left and right areas, each of which has the width of a single finger (Fig. 4). To input a character, the basic gestures are performed after touching the center area. To input an option, the basic gestures are performed after touching the left or right area.

The mapping of gestures to commands must be easy to remember if the visually impaired are to learn how to operate Move&Flick. NTT Service Evolution Laboratories and NTT CLARUTY carefully refined the assignment of consonants and vowels and the classification of option commands. Since some visually impaired people are already familiar with smartphones such as iPhones*, the mapping was made as universal as possible to avoid confusion. NTT Service Evolution Laboratories had the visually impaired staff of NTT CLARUTY check each mapping change. Thus, Move&Flick was designed using a human-centered approach.

5. Experiment to evaluate the Move&Flick designNTT Service Evolution Laboratories conducted an experiment to evaluate whether subjects were able to input kanji using Move&Flick after one hour of instruction and training. All subjects were visually impaired, and half of them had prior experience in operating smartphones. The results showed that all subjects were able to input kanji. Those experienced in the use of smartphones indicated that they wanted to use Move&Flick. Of the subjects without any experience in using smartphones, 60% also stated their desire to use Move&Flick. These results confirmed the feasibility of Move&Flick as an application for visually impaired users. 6. Preparation for application distributionNTT DOCOMO developed Move&Flick for distribution based on the technical specifications provided by NTT Service Evolution Laboratories. Because visually impaired users tend to prefer the iPhone, the application program was written to ensure compliance with App Store Review Guidelines. In addition, NTT DOCOMO, together with NTT CLARUTY and a software vendor, improved the application based on the opinions of visually impaired people obtained in a survey. Some key steps in the development are given below. • Built-in Japanese dictionary software was added to the application. • A function to change the software keyboard was added to the application. • A function to freely adjust the voice reader speed was added. • The operability of the application was improved. 7. Introduction of Move&Flick for the visually impairedNTT DOCOMO advertised Move&Flick on its website, and NTT CLARUTY advertised Move&Flick in mailing lists and in communities for the visually impaired. NTT DOCOMO also held a trial session at the docomo Lounge, its main showroom in the Marunouchi area of Tokyo, to let a lot of visually impaired people know about Move&Flick, which is a new concept of text input appropriate for people with all levels of visual impairment. Visually impaired people and media representatives attending the session were able to try out Move&Flick. An NTT CLARUTY staff member served as a guide and introduced Move&Flick to the participants as a useful application enabling the visually impaired to input text on a touch screen. NTT DOCOMO, together with NTT CLARUTY, has published audio manuals for Move&Flick based on the Digital Audio-based Information System format so that visually impaired users can learn how to use Move&Flick by themselves. In addition, the company provides a mock-up of Move&Flick (Fig. 5) to help users understand the operation of Move&Flick at docomo Hearty Plaza Marunouchi, a space dedicated to helping all users, including the visually impaired, how to use mobile devices. These mock-ups of Move&Flick have also been used at various exhibitions for NTT DOCOMO staff to show them how to use Move&Flick.

8. Future prospectsNTT DOCOMO is proposing Move&Flick as a new text input application for the visually impaired. Various improvements have already been made to Move&Flick based on feedback and comments received from visually impaired users. While there are several existing smartphone applications that attempt to support the visually impaired, their acceptance is weak due to handicaps in their use. NTT DOCOMO will continue to strive to support the visually impaired with the goal of enabling them to fully enjoy their daily lives. |

|||