|

|||||||||||

|

|

|||||||||||

|

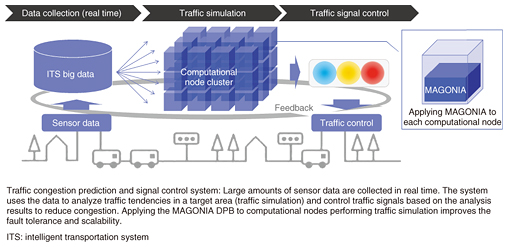

Feature Articles: Initiatives for the Widespread Adoption of NetroSphere Vol. 14, No. 10, pp. 38–41, Oct. 2016. https://doi.org/10.53829/ntr201610fa7 MAGONIA (DPB: Distributed Processing Base) Applied to a Traffic Congestion Prediction and Signal Control SystemAbstractHigh computational capacity and high fault tolerance are required in advanced social infrastructures such as future intelligent transportation systems and smart grids that collect and analyze large amounts of data in real time and then provide feedback. This article introduces application examples of the distributed processing technology of MAGONIA, a new server architecture (part of NetroSphere), in a traffic congestion prediction and signal control system, one of the social infrastructures currently under development at NTT DATA. Keywords: MAGONIA, distributed processing, intelligent transport system (ITS) 1. IntroductionNTT Network Service Systems Laboratories is currently carrying out research and development on MAGONIA, a new server architecture and part of the NetroSphere concept, to quickly create new services and drastically reduce CAPEX/OPEX (capital expenditures and operating expenses) [1, 2]. The distributed processing base (DPB), the core technology of MAGONIA, provides the high fault tolerance, scalability, and short response time that telecom systems need. Since these functions are required in systems other than telecom systems, we aim to create new services and reduce development time by increasing the application range of MAGONIA. To this end, we have been collaborating with NTT DATA in experiments [3] since September 2015 to assess its applicability to a traffic congestion prediction and signal control system [4]. 2. Overview of joint experimentsThe traffic congestion prediction and signal control system analyzes vehicle behavior in a target area from a large amount of sensor data obtained in real time (referred to below as traffic simulation) in order to control traffic signals based on the results (Fig. 1). The system requires high fault tolerance for commercialization. Since the computational power required by the traffic simulation changes with the traffic volume, the system must be scalable to flexibly cope with increases and decreases in load. In addition, a short response time is necessary to feed back the analysis results to traffic signals within the time limit.

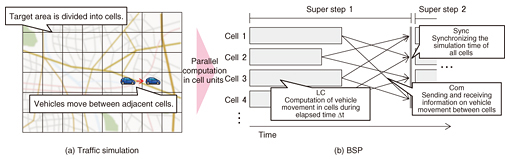

These issues must be solved to enable commercialization of the traffic congestion and signal control system. Therefore, the existing traffic simulation logic was ported to the MAGONIA DPB, and the resulting system was tested to assess its feasibility in terms of fault tolerance, scalability, and response time. 3. Computational model for traffic simulationIn traffic simulation, processing must be completed within the feedback cycle required for the traffic signal control. To satisfy this requirement, target areas for simulation are divided into small areas (referred to as cells), and multiple computational nodes are used to perform parallel processing of cells. Since vehicles are likely to move between cells in the meantime, computations in each cell are not completely independent and require cooperative operation between cells. A model called bulk synchronous parallel (BSP) [5] is used to enable effective processing. BSP consists of repeated processing in a series of super step units that involve the three phases of local computation (LC), communication (Com), and synchronization (Sync). The processing in each phase of traffic simulation is summarized in Fig. 2.

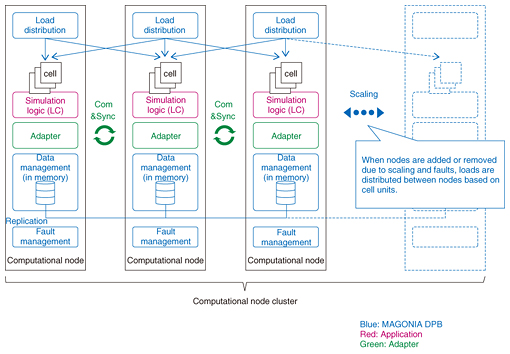

4. Applying MAGONIA DPBThe effectiveness of the MAGONIA DPB has been demonstrated in telecom services. In such services, load is distributed in session units (example: call units). Because sessions are independent and stay independent in distributed processing, the operation characteristics differ considerably from the previously mentioned BSP model. To bridge this gap, new adapters supporting Com and Sync were added to the system (Fig. 3). With this approach, we have successfully implemented the traffic simulation on top of the DPB while minimizing the modification of the existing traffic simulation logic.

4.1 System overviewThe traffic system implemented on top of the DPB (Fig. 3) performs the following procedure. Firstly, the system distributes processing in the computational node clusters based on cell units. Each computational node then executes LC for each cell it is assigned to, and upon completing LC, it continues to the Com phase and sends the data to adjacent cells. When the Com phase is completed, it continues to the Sync phase, after which it starts the next super step. 4.2 Improved fault toleranceTo guarantee fault tolerance, the DPB supports checkpointing. The checkpoint of each cell is replicated to multiple computational nodes, and should a node fail, another node with a respective replicated cell unit will automatically fail over (take over processing). In this way, by replicating checkpoint and distributing failover on a cell-by-cell basis, the system can lessen the increase in the load. In this experiment, it was verified that instant failover made it possible to complete simulation within the feedback cycle, even when a computational node failure occurred during traffic simulation. The capability to minimize the impact of faults to this high degree is a major characteristic of the MAGONIA DPB. Because checkpointing and failover are completed in the computational node cluster, fault tolerance can be achieved without relying on external data storage. 4.3 Improved scalabilityThe DPB supports the addition and removal of computational nodes without interrupting the simulation. To cope with load fluctuations due to changes in traffic volume, the number of computational nodes and the mapping of cells and computational nodes change dynamically to ensure there is a sufficient amount of resources. Through experiments, it was verified that it is possible to dynamically add and remove computational nodes without interrupting traffic simulation. 4.4 Reduced response timePlacing data in the memory of a computational node that executes LC reduces network and disk input/output. Additionally, overlapping LC and Com by assigning different threads to LC and Com minimizes time overheads. We were able to verify in experiments that the high speed of the new infrastructure made it possible to complete the traffic simulation within the feedback cycle to traffic signals. 5. Future outlookIn the future, we will use actual sensing data to assess the system. In addition to the telecom systems and ITS (intelligent transport systems) described here, MAGONIA will also be extended to a wide variety of services that demand fault tolerance and scalability. References

|

|||||||||||