1. Introduction

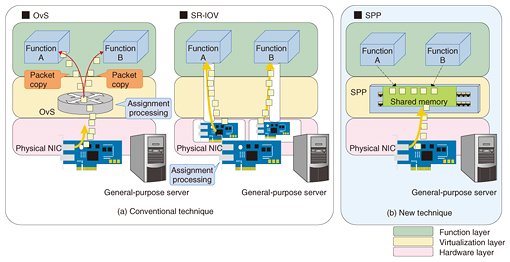

NTT aims to become a catalyst to provide services to a variety of players, changing from the conventional B2C (business-to-consumer) business model based on communication lines. A business-to-business-to-X (B2B2X) business model represents this change of course. The middle B (in B2B2X) will be able to freely interconnect transport service functions such as carrier routers or firewalls and make it possible to create a variety of services, as shown in Fig. 1.

Fig. 1. The B2B2X model NTT aims to achieve.

2. Functions provision and virtualization technology

Transport service functions in a carrier network were formerly provided using dedicated hardware developed for each function. In this type of configuration, functions and hardware were integrated. Thus, a resource seldom used for one function computation could not be made available to another function. This created a surplus of resources, leading to inefficiencies.

Virtualization technology provides a virtualization layer between the hardware and each function in order to conceal the functions from the hardware. Separating the functions from hardware through the use of virtualization technology makes it possible to assign the required amount of computational resources when needed to a function without having to consider the hardware, thus achieving flexible and efficient networks.

For example, in a virtualized network where functions are separated from the hardware, there is no need for dedicated hardware per function, and a single general-purpose server can run multiple virtual machines (VMs) and provide functions for each VM. However, conspicuous degradation of performance is a problem with virtualization. In the following section, we introduce a case of inter-function routing in a virtualized network.

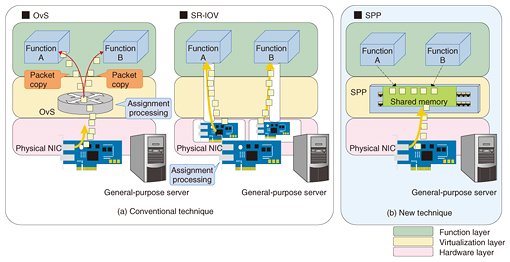

3. Problem with inter-function technique

In conventional networks that are not virtualized, hardware switches are used to connect equipment in order to interconnect multiple functions. In a virtualized network, multiple VMs run on a single general-purpose server. For this reason, it is necessary to create switches to connect VMs on the general-purpose server to interconnect multiple functions. As an example, in OpenStack*1, an integrated virtualization environment, the Open vSwitch (OvS)*2 software switch has become standard for connecting VMs. However, OvS does not provide adequate performance because the software is used to perform processing that was previously performed by hardware switches. As a result, network processing to pass packets from a physical network interface card (NIC) to a VM, or from one VM to another VM, tends to create a bottleneck for the entire system.

Some commercialized technologies avoid this bottleneck by completely ignoring software switching in virtualization and by directly showing the physical NIC to the VM (pass-through technology). For example, single root input/output virtualization (SR-IOV)*3, one example of a pass-through technology, directly shows the physical NIC to the VM and achieves software switch processing using the hardware functions in the physical NIC. However, increasing the number of VMs without adding physical NICs creates bottlenecks in the hardware, and connection switching cannot be performed dynamically. This makes the separation of functions and hardware incomplete, which greatly reduces the advantages of virtualization.

| *1 |

OpenStack: Software developed as open source for building a cloud environment. |

| *2 |

OvS: A virtualization switch developed as open source. |

| *3 |

SR-IOV: A pass-through technology that improves I/O performance in a virtualized environment. |

4. Soft Patch Panel

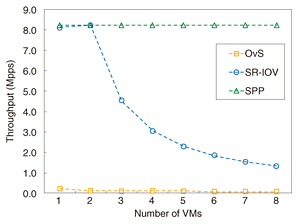

Soft Patch Panel (SPP) is a new technique that enables high-speed and flexible inter-functions to resolve the problems described in the previous section [1]. SPP speeds up processing in a way that differs from the pass-through technology of SR-IOV that directly shows the physical NIC to the VM. In this case, shared memory is provided between VMs, and because each VM can directly reference the same memory area, the copying of packets that creates a bottleneck in a conventional virtualization layer is eliminated (Fig. 2). Moreover, a high-speed technology called the Intel Data Plane Development Kit (Intel DPDK)*4 is used to exchange packets between the physical NIC and shared memory.

Fig. 2. Configuration image of SPP and conventional technique.

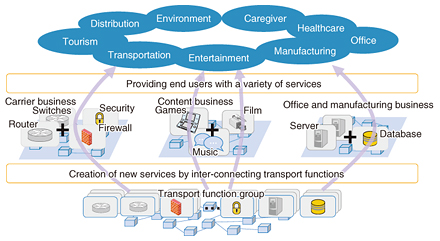

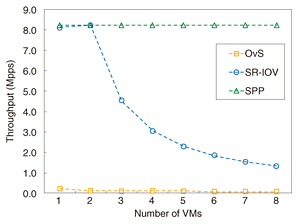

Use of this configuration enables SPP to perform as well as or better than SR-IOV. As stated above, when the number of VMs increases and SR-IOV is used, the physical NIC hardware function performs the packet distribution, which means that a physical NIC must be added to obtain sufficient processing performance. In contrast, SPP provides high speed functionality in a configuration where functions are separated from hardware. High performance can thus be obtained without adding a physical NIC, as shown in Fig. 3.

Fig. 3. Results of SPP performance assessments.

In this type of configuration, input and output destinations of packets can be changed by controlling each referenced VM memory area via software. This means that SPP implements dynamic exchanges between VMs and between a VM and physical NIC. Thus, network operators do not need to be aware of complex configurations such as memory space, and simple terminal operations are sufficient to make connection changes in real time. This type of connection switching used to be performed by patch panels. SPP now makes available a patch panel in a virtualized network, enabling network control via software.

Use of SPP as basic technology in the infrastructure allows network operators and the middle B to flexibly combine transport service functions in a carrier network without degrading performance.

| *4 |

Intel DPDK: High-speed technology that omits packet processing in the operating system kernel. Intel is a trademark of Intel Corporation or its subsidiaries in the U.S. and/or other countries. |

5. Future outlook

SPP is being developed in a collaborative research project with Intel Corporation. NTT and Intel share objectives and directions and discuss product requirements. Then Intel produces a product based on these discussions, and NTT assesses it. The implemented SPP has been made available on the Internet as open source software. Commercialization requires continued comprehensive efforts such as improving security and adding maintenance management functions.

Going forward, we will further improve SPP by utilizing it in various use scenarios and making it even easier to use so that it will contribute to the creation of new services, bring added value, and help to realize the NetroSphere concept.

Reference

|

- Tetsuro Nakamura

-

- Project Team Member, Server Network Innovation Project, NTT Network Service Systems Laboratories.

He received a B.E. and M.E. in applied physics and physico-informatics from Keio University, Kanagawa, in 2012 and 2014. He joined NTT Telecommunication Networks Laboratory in 2014. He is currently studying network functions virtualization. He is a member of the Institute of Electronics, Information and Communication Engineers (IEICE).

|

|

- Yasufumi Ogawa

-

- Research Engineer, Server Network Innovation Project, NTT Service Systems Laboratories.

He received an M.E. in electrical engineering from Osaka University in 2003 and joined NTT the same year. His research interests include distributed systems, network virtualization, and network architectures. He is a member of IEICE.

|

|

- Naoki Takada

-

- Senior Research Engineer, Server Network Innovation Project, NTT Service Systems Laboratories.

He received a B.E. in computer science from Sendai National College of Technology, Miyagi, in 1996. He joined NTT in 1996. From 1999 to 2008, he was engaged in the design and development of mobile phone applications at NTT Software. He moved to NTT EAST R&D Center and then NTT Service Systems Laboratories, where he worked on the design and development of the network control system of the Next Generation Network (NGN). He is currently studying network functions virtualization.

|

|

- Hiroyuki Nakamura

-

- Senior Research Engineer, Supervisor, Server Network Innovation Project, NTT Network Service Systems Laboratories.

He received a B.E. in mathematics from Kumamoto University in 1990. He joined NTT Telecommunication Networks Laboratory in 1990 and studied telecommunication network service systems including a real-time operating system, personal handy phone and Integrated Services Digital Network systems, and software development. During 1999–2002, he was involved in designing and developing software for a VoIP-PSTN-GW (voice over Internet protocol public switched telephone network gateway) SIP (Session Initiation Protocol) server. He then worked on the design and development of NGN. He is currently studying software design for future network service systems.

|