|

|||

|

|

|||

|

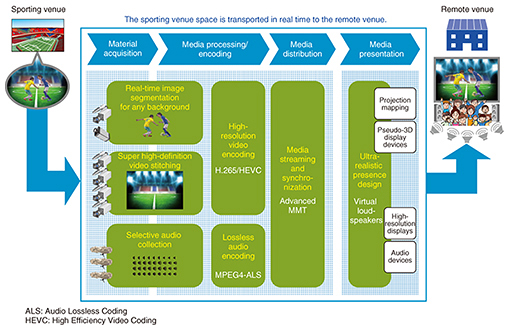

Feature Articles: 2020 Showcase Vol. 14, No. 12, pp. 30–35, Dec. 2016. https://doi.org/10.53829/ntr201612fa5 2020 Public Viewing—Kirari! Immersive Telepresence TechnologyAbstractNTT Service Evolution Laboratories is conducting research and development on an immersive telepresence technology called Kirari! in order to achieve ultra-realistic services that provide an experience akin to actually being at a sporting or live venue to off-site viewers in remote locations. This article gives an overview of technologies supporting Kirari!, including advanced media streaming and synchronization (Advanced MMT (MPEG Media Transport)), real-time image segmentation for any background, ultra-realistic presence design (virtual loudspeakers), and super high-definition video stitching. Initiatives using these technologies are also introduced. Keywords: ultra-realistic, immersive telepresence, media processing/synchronization technology 1. IntroductionDiverse ways of viewing sporting events beyond conventional television coverage have been introduced recently for international competitions and popular domestic sports such as baseball and soccer. These include live distribution over the Internet and public viewings at off-site venues. Further, with the spread of 4K and 8K broadcasts, people around the world are expected to share in the excitement of sporting events in the year 2020 through television and public viewings. To tackle this world-wide diversification in viewing styles, NTT Service Evolution Laboratories announced our concept of Kirari! immersive telepresence technology in February 2015 [1]. Kirari! will implement high resolution and ultra-realistic presence technologies to deliver the sporting venue space in real time throughout Japan and the world. The goal of Kirari! is to enable viewers to experience sporting events as though they were actually at the venue from anywhere in the world. 2. Technologies supporting ultra-realistic servicesTwo main technical issues arose in implementing ultra-realistic real-time transportation of the video space with Kirari! (Fig. 1):

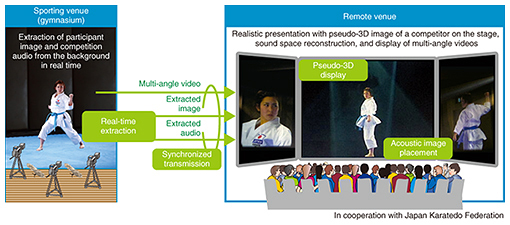

We analyzed the technical elements needed to resolve these technical difficulties and carried out research and development (R&D) on each of them. As a result of this R&D, we were able to present real-time pseudo-three-dimensional (3D) video transmission of athletes giving a karate demonstration at the NTT R&D Forum held in February 2016, successfully achieving an ultra-realistic viewing experience (Fig. 2). An overview of the technical elements developed and comments on the results are given below.

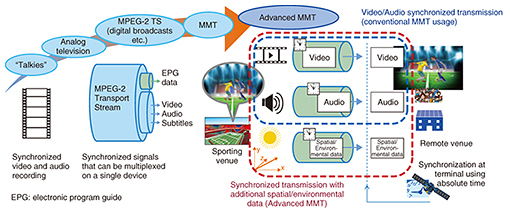

3. Advanced media streaming and synchronization (Advanced MMT)We developed technology to synchronize and transport video and audio, combined with spatial information, by extending MPEG (Moving Picture Experts Group) Media Transport (MMT), which is an optimized protocol for media synchronization. We added definitions for MMT signaling to describe 3D information such as the size, position, and direction of MMT assets (Fig. 3). This technology makes it possible to correlate physical spatial parameters such as the size and position of the display device with asset data (frame pixel data) so that the space can be reconstructed with high realism at the destination at the intended size. Also, transmission of the DMX (Digital Multiplex) signals commonly used in production to control stage lighting and audio devices together with the MMT assets enables realistic presentations that accurately synchronize remote stage equipment with the media.

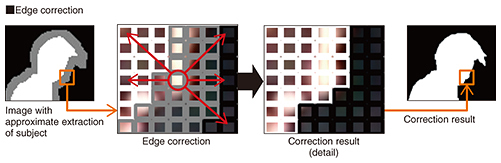

4. Real-time image segmentation for any backgroundTo implement Kirari!, it was necessary to extract the desired subject from the captured video and display it in a way that resembled the real thing. We developed a technology for extracting the desired subject from any background; this technology can be used in situations such as sporting events, plays, and lectures where it is difficult to use a green screen or other special background (Fig. 4). The first step involves extracting an approximate range of the subject using sensor data (trimap generation). The second step is to extract the subject in real time. The technology we developed to achieve this uses a framework that identifies the boundary between the subject and the background and accurately separates them using nearest neighbor search in the color space. It also uses clustering, with constraints to ensure that foreground and background colors do not overlap.

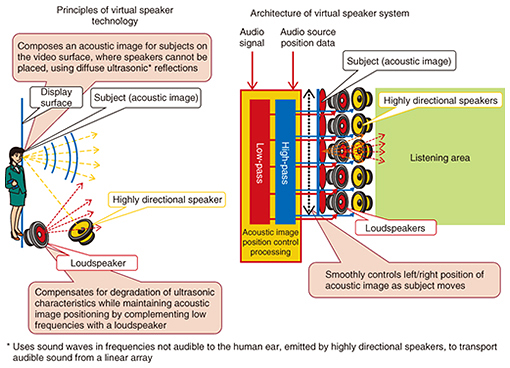

5. Ultra-realistic presence design (virtual loudspeakers)We developed a highly realistic acoustic image placement technology that uses diffuse ultrasonic reflections to position acoustic images within the video image, where speakers cannot be placed. It can position virtual sound sources realistically using fewer speakers and producing a larger listening area than with earlier surround-sound speaker systems (Fig. 5). Degradation of ultrasonic characteristics is handled by using electrodynamic speakers to complement low frequencies. This technology enables virtual sound sources to be generated at any location on large, full-size images displayed on a large screen, so that realistic effects can be generated using a simple configuration such as having voices or competition sounds originate from their source on the screen.

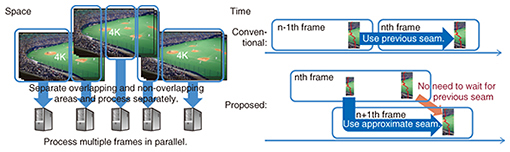

6. Super high-definition video stitchingAs a first step toward achieving a highly realistic service with ultra-wide video not possible with ordinary 16:9 television video, we established algorithms and a system architecture to capture video using five 4K cameras arranged horizontally, and to compose them in real time (Fig. 6). To process the large amount of 4K image data at high speed in real time, the data are partitioned and a mechanism is used that enables successive frames to be processed without waiting for positioning from the previous frame, which is necessary to suppress flicker. These innovations made real-time (4K60p) processing possible.

7. Future developmentWe plan to conduct real-time transportation trials on these technologies, focusing mainly on individual sports. Beyond sporting events, we also intend to expand our horizons to other types of event that have been difficult for many people to appreciate remotely. This will include public viewings of traditional performances, regional festivals, intangible cultural assets, music concerts, and live coverage of lectures. In further R&D, we plan to expand the scope of Kirari! to support competition with multiple athletes (competitions with large overlapping subjects) so that in the future, large numbers of competitors, or multiple subjects moving over a wide area, can be presented. This will be applied to sports such as judo and soccer that are currently difficult to present in this way. We are conducting R&D on elemental technologies needed to provide ultra-realistic services with the goal of making viewing experiences close to actually being at a sporting venue available anywhere in the world by the year 2020. Reference

|

|||