|

|||||||||||||

|

|

|||||||||||||

|

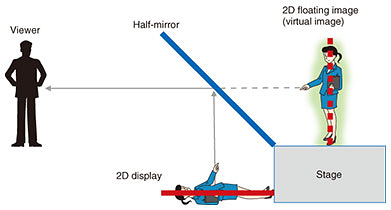

Feature Articles: Ultra-realistic Communication with Kirari! Technology Vol. 16, No. 12, pp. 19–23, Dec. 2018. https://doi.org/10.53829/ntr201812fa3 “Kirari! for Arena”—Highly Realistic Public Viewing from Multiple DirectionsAbstractNTT Service Evolution Laboratories has developed a display system that achieves highly realistic public viewing surrounding the venues of sporting events. We utilize human visual perception to make viewers perceive the subject in space, including a motion in the depth direction, in order to achieve a presentation that appears as though the subject was actually in front of the viewer. This article gives an overview of an approach to present motion in the depth direction by using visual perception, and a display system that can be implemented with a simple configuration and enables viewing from anywhere surrounding the display. Keywords: high realistic, depth perception, floating image 1. Highly realistic public viewingFrom television (TV) screens placed where passers-by on the street can watch them, to public viewing of various events, media that enable viewers to meet in one place and enjoy sports events have made it possible for viewers to share in the excitement of the event by emphasizing the connection between them. We expect that with the spread of 4K and 8K broadcasts in 2020, many more sporting events from around the world will be covered on TV, and such excitement will be shared much more widely, regardless of the location or time, through various viewing styles such as live distribution on the Internet and informal public events in the street. NTT Service Evolution Laboratories is taking this diversification in viewing styles around the world into account while conducting research and development on the “Kirari!” immersive telepresence technology, which implements highly realistic public viewing in real time from any location, giving viewers the sense of actually being at an event taking place elsewhere. Research is in progress on various imaging methods for “Kirari!”; one method uses two-dimensional (2D) floating images and can display life-sized images as though they were actually on the stage in front of the viewer. The method uses virtual images and involves a simple configuration combining an ordinary 2D display with an optical element such as a half-mirror, which transmits and reflects part of the incident light (Fig. 1). The method presents the image in a position separated from the display, so it appears that the image is floating in the same space as the viewer, and subjects in the image are perceived as real objects [1]. This method can be used for public viewing of sports events, giving a sense of reality as though the event was actually happening in front of the viewer.

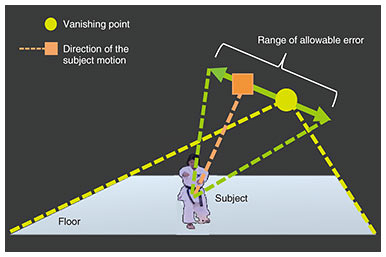

2. Greater reality using floating imagesFor most cases of public viewing, many viewers usually gather at the same location to watch the content at the same time, so the content must be presented accurately in space for a wide range of viewpoints. However, until now, images from the same viewpoint have been presented on TV or on screens to all viewers, so it has not been possible to correctly reproduce different positions and distances in the content. This issue also occurs when using 2D floating images. Many methods have been developed to present spatial positions of subjects for a wide range of viewpoints [2, 3]. However, most of these methods have required many projectors or special optical systems, with large and complex equipment that makes it difficult to present the content as life-sized. Therefore, these methods have been difficult to apply for public viewing. We have instead focused on a method with a simple configuration that uses 2D floating images. It can expand the size of the content and can be easily applied to smartphones and tablets [4]. With 2D floating images, the virtual image plane is at a fixed position in space, so it is not possible to reproduce motion in the depth direction. Prior research has been done on adding motion in the depth direction to 2D floating images [5], but it was limited to a single viewer and required the use of special glasses. To date, there have been no attempts to reproduce the motion of subjects in the depth direction for multiple viewers over a wide range of viewpoints. In this article, we propose a method with a simple configuration to present motion in the depth direction to multiple viewers by implementing psychological depth-perception effects, and we introduce an application of this method to a display system that enables viewing from all around. 3. Perspective and depth perceptionThe concept of perspective has long been known to be a way to express depth based on psychological factors. When the positions and sizes of subjects are drawn as they would appear to the eye, the viewer is able to recognize significant distances, even when drawn in a picture or other 2D fixed surface. It is also possible to present motion in the depth direction by applying perspective to 2D floating images. The position and size of the subject drawn using perspective converge to a point as the distance to the subject increases. Such a point is called a vanishing point. As an example, if the left and right edges of a floor are extended infinitely in the depth direction, they will cross at the vanishing point in an image. If a subject moves straight in the depth direction, its motion will also be toward this vanishing point. If there are multiple viewpoints, the vanishing points will be different for each viewpoint, so subjects must also move in a direction determined for each viewpoint. When 2D floating images are displayed, there are correct vanishing points for each viewpoint, depending on the stage and other real objects, but only one vanishing point for one viewpoint can be set for subjects displayed as floating images. When such images are viewed from different viewpoints, motion in the correct depth direction cannot be represented. 4. Method to present motion in the depth directionAs shown in Fig. 2, even when there is error, and the direction of the subject motion (shown with the square and the dotted line connected to it) does not match the vanishing point of the floor plane (shown with the circle), people can empirically perceive motion in the depth direction. We have experimentally verified and defined a range of allowable error within which this motion in the depth direction is recognized [6]. We have also proposed a method for representing motion in the depth direction for multiple viewpoints by setting this permissible range for each viewpoint and restricting the motion of subjects to within a range common to all viewpoints.

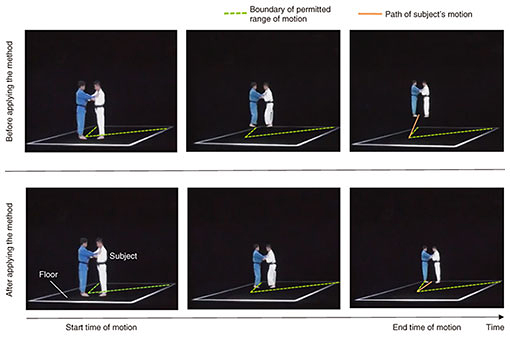

The permissible range of error within which motion in the depth direction is recognized depends strongly on the positional relationship between the subject and the floor plane. If the motion of the subject is within the triangular region formed by the feet of the subject and the left and right endpoints at the back of the floor plane, any perceived strangeness in motion in the depth direction will be reduced. With this in mind, we derived a method for representing motion in the depth direction with 2D floating images as an algorithm that determines the subject position in each frame using information on the subject position and the viewing range. We can also adjust the representation of subjects in the virtual image so that they have contact with the real-space floor surfaces. With this method, we can represent the motion of subjects in the depth direction without any apparent inconsistencies and ensure that they remain connected to the floor. The paths of motion for subjects before and after applying this depth assignment method are shown in Fig. 3. The movement of the subject is shown with the solid line, while the permitted range of motion is the region bounded by the dotted line and the floor surface. In this figure, before the proposed method was applied, the path of motion goes out of the permitted region, but after the method was applied, it stayed within the region. This shows that for life-sized subjects moving within a floor range of 10 m × 10 m, motion in the depth direction with a range of 7.5 to 9.0 m can be represented.

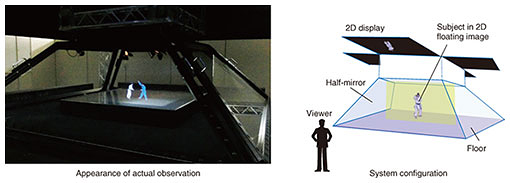

5. Prototype of “Kirari! for Arena”We built a prototype “Kirari! for Arena” system that enables viewing from all around by combining four optical systems consisting of a 2D display and a half-mirror, all sharing a single floor surface. Images of the subjects captured from four directions are displayed on the virtual image planes (Fig. 4). The system takes images of just the subjects, extracted from their respective backgrounds, as well as subject distance information measured using LiDAR (light detection and ranging). The method described here is used to process and display the images in real time. Compared to existing display systems requiring many projectors and special optical devices, this system is capable of enabling viewing from all around using a simple configuration of ordinary displays and half-mirrors. Because it is so practical, we hope to apply it for public viewing of sports and other events, where many people surround the venue while watching.

6. Future workIn this study, we focused on 2D floating images implemented using a simple configuration combining a display and half-mirror. We also proposed a method for representing depth to multiple viewers when subjects being viewed move in the depth direction, by using psychological depth-perception effects. We applied this method in prototyping “Kirari! for Arena,” which provides viewing of content from all around, including its position in space. In the future, we plan to represent the position of subjects more precisely and expand the viewing area by applying shadows or other depth cues. References

|

|||||||||||||