|

|||||||||||

|

|

|||||||||||

|

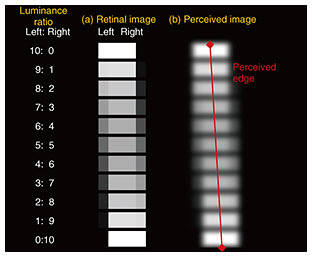

Feature Articles: Ultra-realistic Communication with Kirari! Technology Vol. 16, No. 12, pp. 24–28, Dec. 2018. https://doi.org/10.53829/ntr201812fa4 360-degree Tabletop Glassless 3D Screen SystemAbstractNTT Service Evolution Laboratories has developed 360-degree tabletop glassless three-dimensional (3D) screen technology, which produces 3D images that appear on a table and provides experiences of viewing from the full perimeter of sports venues and other scenes. This article deals mainly with the basic visual mechanism of depth perception and the optical configuration for projector placement used for this technology. Keywords: autostereoscopic 3D, linear blending, smooth motion parallax 1. IntroductionExpectations are rising for autostereoscopic three-dimensional (3D) screens that can achieve smooth motion parallax and natural 3D viewing without requiring 3D glasses or other such mechanisms as the ultimate display system of the future. In particular, tabletop 3D screen technologies, which can display items as though they were actually on the table, are anticipated for a wide range of applications such as viewing live sporting events with an overhead view of the entire field, or for modeling manufactured products. With this system, several viewers can stand around the table and watch the sports event from whatever direction they want. This could dramatically improve the communication environment among viewers. Autostereoscopic 3D screen technology is necessary to create this sort of experience, as it supports 360-degree motion parallax and does not require the use of 3D glasses or other special mechanisms that become an obstacle to eye contact or seeing the facial expressions of other viewers. Autostereoscopic 3D screen technologies with 360-degree motion parallax have been proposed before, including a system that uses several hundred projectors placed at very small intervals that project images onto a special cone-shaped screen [1]. The use of multiple projectors to project viewpoint images in this way is advantageous in that multiple people can view the 3D content without glasses at the same time. However, to switch smoothly between video sources as the viewpoint moves, many projectors at very close intervals are needed, which increases equipment costs and the scale and complexity of the facility. We previously proposed the basic technology for an autostereoscopic 3D screen system that supports horizontal motion parallax using a 50-inch diagonal screen and 13 projectors [2]. This system achieves smooth viewpoint motion requiring just one-fourth to one-tenth the number of projectors of earlier systems by using a perceptual mechanism of the visual system called linear blending, in which the luminance of the image from neighboring viewpoints is composed based on the viewing position, so that the intermediate viewpoint is perceived as visually interpolated. Linear blending is achieved optically using a spatially imaged iris plane optical screen. We are continuing to study the implementation of a tabletop screen by applying this screen in the horizontal direction and have implemented a prototype that is effective in reducing the number of projectors using linear blending [3]. However, in our investigation so far, a screen and projection system developed for wall mounting were simply extended to a tabletop form, so optical design constraints limited the field of view to approximately ±65 degrees of center, which did not achieve 360-degree viewing. In this article, we describe a new optical configuration to expand the tabletop linear-blending autostereoscopic 3D screen system for 360-degree viewing, and a new tabletop type prototype using 60 projectors. 2. Utilization of perception mechanisms of the visual systemFor two images that overlap with only a small offset to the left or right, if the relative brightness of each image is varied, an image with double edges to the left and right is projected onto the human retina, as shown in Fig. 1(a). However, in the human visual system, if the distance between these two edges in the image is sufficiently small, it is perceived as one edge instead of two, with the position of the edge smoothly changing according to the relative brightness, as shown in Fig. 1(b) [3]. This principle, based on using this image edge perception mechanism to blend viewpoint images from adjacent projectors according to the relative luminance, is called linear blending in this article.

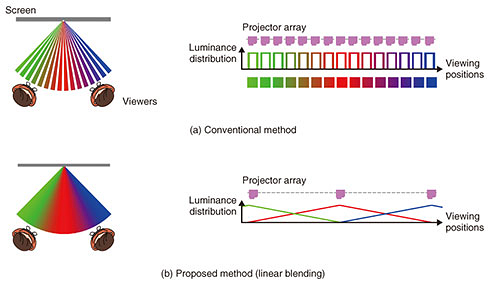

Earlier autostereoscopic 3D screens using multiple projectors had projectors positioned at intervals narrower than the distance between viewers’ eyes, so that binocular and motion parallax would be displayed smoothly. This required large numbers of projectors as the viewing range was increased, as shown in Fig. 2(a). In contrast, with linear blending, parallax is presented by blending images from two viewpoints that have a disparity less than the fusion limit angle, using relative luminance depending on the viewing position. Intermediate viewpoints are perceived as interpolated, so projectors are not needed for those viewpoints (Fig. 2(b)). This enables 3D images to be displayed with smooth binocular and motion parallax, even when using projector intervals that are wider than the spacing between viewers’ eyes.

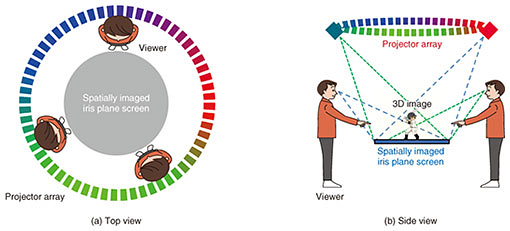

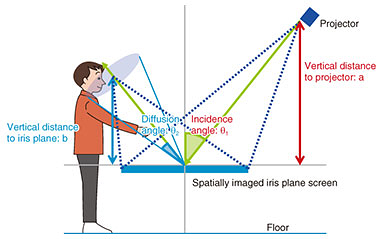

3. Optimization of the tabletop optical configurationThe spatially imaged iris plane screen [2] that uses linear blending is composed of a reflective layer, a fresnel lens layer, and a diffusion layer. Light from the projectors is reflected on the screen, and it focuses in the opposing space on the side of the screen. In this way, the projector iris plane (the part equivalent to the iris in the projector optical system) forms in the space, and the viewer can only perceive the projected image within that range. In tabletop configurations studied earlier [4], a straight-line projector array was used, with projectors in a straight horizontal line facing the spatially imaged iris plane. However, with this straight-line projector array, the farther the viewpoint was horizontally from the center of the array, the more the projected viewpoint images were distorted, so simply extending this by combining linear projector arrays in four directions to create a full perimeter system would not enable presentation of 3D images to all viewpoints. To expand the viewing range to the entire perimeter, we designed an optical system with a circular projector array as shown in Fig. 3; a side view of the positional relationship between the viewer and the iris plane is shown in Fig. 4. The properties of the diffusion layer cause the luminance distribution of the iris plane to peak in the center and also result in attenuation with distance from the center. The intermediate viewpoint images can be interpolated with the linear blending effect by optimizing the design of the diffusion angle θ2 of the diffusion layer and the horizontal interval of projector placement, so that adjacent viewpoint images are composed with luminance corresponding to the viewpoint position.

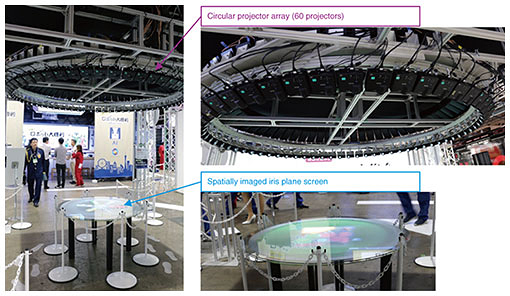

4. Full-range 360-degree tabletop autostereoscopic 3D screen system prototypeWe implemented a prototype full 360-degree, autostereoscopic 3D screen using the circular projector array optical system described above (Fig. 5). The spatially imaged iris plane screen had a diameter of 110 cm and was placed 70 cm from the floor. Additionally, 60 projectors with a resolution of 1280 x 800 pixels and brightness of 600 lm were placed at 6-degree intervals. The viewpoint images projected from each projector were rendered with real-time synchronization on 60 client personal computers (PCs), one for each projector. Each client PC shared the same computer graphics space and rendered the image from a virtual camera in the position of the corresponding projector. Time synchronization of the viewpoint images was done by sending UDP (user datagram protocol) packets from a server PC with information for the 3D model position and the start of animation.

Photographs from five viewpoints around the prototype are shown in Fig. 6. The photographs show how changes in motion parallax with changes in viewpoint are reproduced.

5. Future prospectsWe have demonstrated the feasibility of a natural autostereoscopic 3D screen technology with smooth, full-perimeter-viewpoint motion parallax using a prototype system. However, with the current prototype, there is some degradation in image quality caused by multi-edges from neighboring projectors and some other projectors. We are studying how to reduce the adverse effect of multi-edges using visual effects [5]; We plan to improve image quality in the future by optimizing the optical characteristics of the screen. We will also work toward future implementation of highly realistic viewing of sports competitions and other events around a table for venues such as sports bars. References

|

|||||||||||