|

|||||

|

|

|||||

|

Information Vol. 18, No. 11, pp. 59–64, Nov. 2020. https://doi.org/10.53829/ntr202011in1 Event Report: NTT Communication Science Laboratories Open House 2020AbstractNTT Communication Science Laboratories Open House 2020 was held online, the content of which was published on the Open House 2020 web page at noon on June 4th, 2020. Within the first two days, around 2000 visitors enjoyed 5 talks and 31 exhibits, which included our latest research efforts in information and human sciences. Keywords: information science, human science, artificial intelligence 1. OverviewNTT Communication Science Laboratories (CS Labs) aims to establish cutting-edge technologies that enable heartfelt communication between people and people and between people and computers. We are thus working on a fundamental theory that approaches the essence of being human and information science, as well as on innovative technologies that will transform society. NTT CS Labs’ Open House is held annually with the aim of introducing the results of our basic research and innovative leading-edge research with many hands-on intuitive exhibits to those who are engaged in research, development, business, and education. Open House 2020 was, however, held via our website considering the recent situation against the spread of the novel coronavirus. The latest research results were published with recorded lecture videos on the Open House 2020 web page at noon on June 4th when the event was originally planned to start [1]. The content attracted many views in a month not only from NTT Group employees but also from businesses, universities, and research institutions. The event content is still available. This article summarizes the event’s research talks and exhibits. 2. Keynote speechDr. Takeshi Yamada, vice president and head of NTT CS Labs, presented a speech entitled “I want to know more about you – Getting closer to humans with AI and brain science –,” in which he looked back upon the history of NTT and establishment of NTT CS Labs then introduced present and future cutting-edge basic research and technologies (Photo 1).

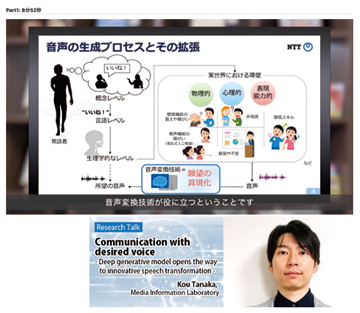

Dr. Yamada pointed out the mission of NTT CS Labs to promote heartfelt communication between people and people or people and computers, by placing particular importance on pursuing artificial intelligence (AI) technologies that “approach and exceed human abilities” and science research to “obtain a deep understanding of people” and “make heartfelt contact.” Regarding today’s situation in which physical contact and face-to-face communication are significantly restricted, the talk pointed out that it has become even more important to identify the essence of emotional contact in heartfelt communication. The talk introduced NTT CS Labs’ recent AI technologies from three perspectives of getting closer to humans with voice and acoustic processing, understanding humans with language, and heartfelt communication with empathy and happiness (shi-awase) then declared to continue pursuing the essence of communication and tackle new challenges boldly and persistently. 3. Research talksThe following three research talks highlighted recent significant research results and high-profile research themes. Each talk introduced some of the latest research results and provided some background and an overview of the research. (1) “Communication with desired voice – Deep generative model opens the way to innovative speech transformation –,” by Dr. Kou Tanaka, Media Information Laboratory Dr. Tanaka introduced many technologies that apply deep learning, which is a common concept in AI, to speech information processing and various speech-to-speech conversions with high quality. NTT CS Labs considers speech information as an important tool for communication as well as the following four points as particularly important requirements for its conversion technology: 1) high quality, 2) learning from a small amount of data, 3) convertible in real time, and 4) covering various feature conversions such as voice characteristics, prosody, and accent. The research and development of such speech-to-speech conversion technology targets real-world application such as assistance for those with vocal disabilities, conversion of speaking style including emotion, and conversion of pronunciation and accent in language learning (Photo 2).

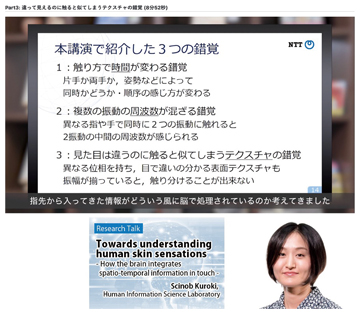

(2) “Towards understanding human skin sensations – How the brain integrates spatio-temporal information in touch –,” Dr. Scinob Kuroki, Human Information Science Laboratory Dr. Kuroki explained information processing of human skin sensations. She introduced a psychophysical experiment in which a human is presented with a tactile stimulus and asked the timing and position of the stimulus. A mysterious phenomenon occurred in which the answers differed from the stimulus given. The brain causes illusions by interpreting physical input stimuli, but by carefully unraveling such phenomena and illusions, she explored the mechanism by which the brain processes tactile information. She also introduced advanced approaches such as designing and generating tactile stimuli using a three-dimensional (3D) printer based on a method inspired by human visual information processing systems and a new direction of haptic science (Photo 3).

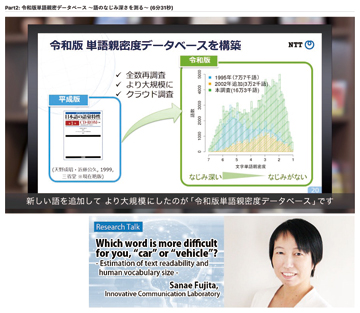

(3) “Which word is more difficult for you, ‘car’ or ‘vehicle’? – Estimation of text readability and human vocabulary size –,” Dr. Sanae Fujita, Innovative Communication Laboratory Dr. Fujita introduced studies of two estimation methods, text readability and human vocabulary-size estimation. The estimation of human vocabulary size is based on the word familiarity database that NTT CS Labs has been creating for over 20 years. The database has recently been updated with more than 160,000 words and the Reiwa version of the vocabulary-size estimation test was published and made available as a demonstration [2]. She also introduced efforts aimed at tailor-made education support such as recommending books suitable for individual learners by combining vocabulary-size estimation and text-difficulty estimation (Photo 4).

4. Research exhibitionThe Open House featured 31 exhibits displaying NTT CS Labs’ latest research results. We categorized them into four areas: Science of Machine Learning, Science of Communication and Computation, Science of Media Information, and Science of Humans. Each exhibit prepared presentation slides with recorded explanation and published on the event web page (Photo 5). Several provided online demonstrations or demo videos to make them closer to direct demonstrations. The following list, taken from the Open House website, summarizes the research exhibits in each category.

4.1 Science of Machine Learning

4.2 Science of Communication and Computation

4.3 Science of Media Information

4.4 Science of Humans

5. Special lectureWe asked Professor Noriko Osumi, vice president of Tohoku University, to give a special lecture entitled “A challenge to scientifically understand ‘individuality.’” It is well known that people with autistic spectrum disorder sometimes make considerable achievements in fields such as art and scientific research. Her research group has regarded atypical development as individuality, and investigated the genetic and non-genetic mechanisms underlying such atypicality using mice as model animals. In particular, she found that paternal aging affected the early development of vocal communication of pups and introduced cutting-edge research topics of molecular mechanisms with changes in epigenome information. “Science of individuality” must be required in a diversity society. The lecture finished with providing a grand perspective from “ideal form of society” to “human evolution.” 6. Concluding remarksUnlike last year, Open House 2020 was not held as an event at a physical venue but instead to present our latest results on a website. The lecture videos were viewed more than 10,000 times in June from various segments of users. Though it was difficult to have lively discussion, the long-term growth of visitors encourages us in further research activities. In closing, we would like to offer our sincere thanks to all the participants of this online event. References

|

|||||