|

|||||

|

|

|||||

|

Feature Articles: Olympic and Paralympic Games Tokyo 2020 and NTT R&D—Technologies that Colored Tokyo 2020 Games Vol. 20, No. 1, pp. 74–79, Jan. 2022. https://doi.org/10.53829/ntr202201fa12 Stage Production for Celebration of Torch Relay × Ultra-realistic Communication Technology Kirari!Keywords: ultra-realistic communication technology Kirari!, Torch Relay, live performance 1. OverviewThe Tokyo 2020 Olympic Torch Relay, which started in Fukushima Prefecture on March 25, 2021, was carried by 10,515 runners over 121 days through all 47 prefectures in Japan. The Torch Relay usually involves the torchbearers running on public roads and a celebration event at the final point of the relay at the end of each day. At the celebration event, the final torchbearer of the day lights the Olympic flame in its cauldron, local residents and sponsors perform songs and dances on stage, and people can have their photos taken with an Olympic torch at the Olympic Torch Relay Commemorative Photo Corner and enjoy other exhibits to add to the excitement of the Torch Relay. As an extended version of the celebration event, “NTT Presents Tokyo 2020 Olympic Torch Relay Celebration - CONNECTING WITH HOPE” was staged by NTT in Osaka on April 13 and in Yokohama on June 30. In addition to the torch being carried by the final torchbearer of the day, GENERATIONS from EXILE TRIBE performed live, junior-high-school students, EXILE ÜSA, and EXILE TETSUYA performed “Rising Sun -2020-,” and calligraphy artist Soun Takeda performed using calligraphy. Initially, we planned to invite a general audience of about 5000 people; however, due to the spread of the novel coronavirus (COVID-19), general audience viewing at both venues were canceled and switched to online streaming instead. NTT research and development (R&D) laboratories have been conducting demonstration experiments of ultra-realistic live broadcasts and stage productions of kabuki, live music, fashion shows, sports events, and other events by using our ultra-realistic communication technology Kirari! (Fig. 1).

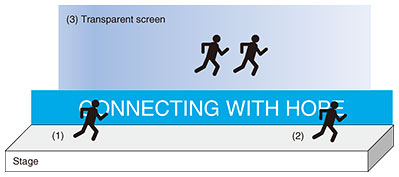

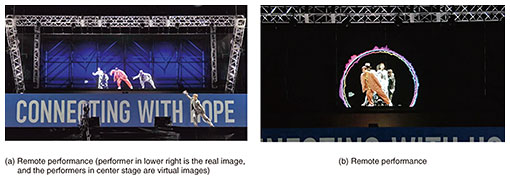

On the basis of the results of those demonstration experiments, for the live performance by GENERATIONS from EXILE TRIBE in the extended celebration event, we created three new stage productions by using Kirari!, i.e., “remote performance,” “remote viewing,” and “remote fan collaboration.” 2. Details of stage productions2.1 Remote performanceDuring the COVID-19 pandemic, it has become increasingly difficult for artists to get together, especially if they are far apart. The purpose of the remote performance in such an environment is to harness the power of communication to create an experience that makes remote artists feel as if they are performing in the same place. For this remote-performance stage production, we created a group dance in tune with the song “Choo Choo TRAIN” by artists in remote locations. Normally, artists at distant venues would collaborate with each other; however, in this case, to simulate the experience, we used two locations on the stage as remote locations and a transparent screen above the stage as a virtual shared space. As shown in Fig. 2, points (1) and (2) on the stage are the remote locations, and the transparent screen (3) is a shared space above those locations. The images of the artists dancing at points (1) or (2) were transferred to the transparent screen. At that time, the background images were cut out by Kirari! and only each artist’s image was transmitted. The images projected on the transparent screen appeared to be three-dimensional, and that appearance created an experience in which the viewer feels that the artists are actually dancing there. Five artists dance in turn, so, each time, the number of artists on the transparent screen increased (Fig. 3(a)); finally, when all artists have finished dancing, the images of the five artists merged into one as if they were dancing in circular motions at the same location (Fig. 3(b)). It is also possible to extract and transmit the images of all the artists dancing at the same time in real time.

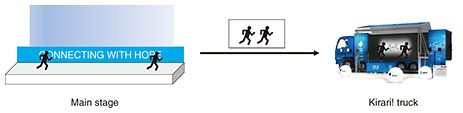

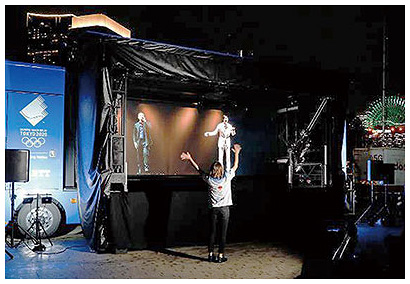

2.2 Remote viewingDuring the pandemic, it has also become difficult for fans to attend live-music concerts, especially for fans in provincial areas wanting to go to concerts in big cities. The purpose of this remote viewing was to harness the power of communication in such an environment to create an experience through which the artist appears to be performing right in front of you. Our challenge was to transmit the video of the artists on the main stage to another remote stage with the fans present. Normally, we would have streamed the images to a remote site far away; however, for the purpose of simulating the experience, as shown in Fig. 4, we transmitted the data to a Kirari! truck set up at a remote location in Yokohama. The Kirari! truck housed a transparent screen similar to the one forming the main stage and, as shown in Fig. 5, the artists transferred by Kirari! were displayed on the screen in a holographic manner to create an experience in which the artists appear to have come to perform right in front of the fans.

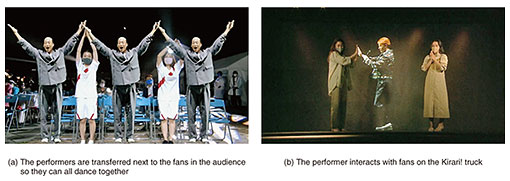

2.3 Remote fan collaborationIt has been difficult for fans and artists to interact with each other during the pandemic. Even if the fans could go to a venue, the experience of shaking hands with a celebrity like before has become impossible. The purpose of remote fan collaboration was to harness the power of communication in such an environment to create an experience in which the artist appears to come and perform with the fans. We faced two challenges for this production. One was to transfer the artist on the main stage and the other was to transfer the fans at the venue next to the artist on the stage. For the first challenge, the images of the artist on the stage were extracted using Kirari! and superimposed next to the images of the fans displayed on a monitor on the stage. As shown in Fig. 6, this process gave the fans in the audience the virtual feeling of being next to the artist. For the other challenge, the images of the artist extracted using Kirari! were transferred to the remotely located Kirari! truck in a manner that created the feeling that the artist had been transferred next to the fans in the truck.

3. TechnologyWe now explain one of the technologies that make up Kirari!, “real-time extraction of objects with arbitrary background” technology [1]. This technology can extract a specific object from a video without the need to use a green background. NTT developed “KIRIE” [2] in 2018, which systemizes object extraction using this technology, and used it for the above-described stage productions. With KIRIE, the object is extracted by switching between two methods: one using only background subtraction and the other combining background subtraction and machine learning. With background subtraction, an image without an object is acquired in advance as a background, and the area in which the object is to appear is identified by taking the difference between the background and image being captured. Using machine learning, in addition to the above-mentioned background image, multiple images showing the object and background at the same time are taken. By learning the combination of background color and color of the object to be extracted as training data, it is possible to determine what area corresponds to the object. The method that only uses background subtraction has the advantage of not requiring prior training; however, it is not easy to extract objects in colors close to the background color. Another disadvantage is that if the background changes even slightly, the extraction accuracy will decrease. In contrast, the method of combining background subtraction and machine learning has advantages such as being able to extract images even when the colors of the background and object are similar and being able to handle changes in the background. However, it requires prior training. For the above-mentioned stage productions, when the performers could participate in the rehearsal the day before and the color of the costume they would wear on the day was decided, we aimed for more-accurate object extraction by using the method of combining background subtraction and machine learning. When the performers could not attend the rehearsal on the previous day, however, we used the method that only uses background subtraction to extract the objects. 4. ResultsThe extended versions of the Torch Relay celebration, which were streamed online, were viewed by many people, with 100,000 views on YouTube Live with a maximum of 12,000 simultaneous connections. We also received a large amount of positive feedback on social networking sites, where over 90% of the responses were positive. Using the above-mentioned technologies for the remote-performance, remote-viewing, and remote-fan-collaboration productions, we could extract objects with high accuracy. Even under the condition that the color of the background tends to change, such as when it was raining during the previous day’s rehearsal but sunny on the next day’s rehearsal, we could extract the object by combining background subtraction and machine learning with an accuracy acceptable for viewing. In some cases, the arrangement of the production suddenly changed on the day, and performers different from those targeted in the previous day’s rehearsal became the extraction target; nevertheless, the method using only background subtraction still produced generally acceptable extraction results. The average processing delay of KIRIE was 166 ms, which indicates that we achieved real-time object extraction with low latency and high accuracy. 5. Concluding remarksFor the extended celebration events of the Tokyo 2020 Olympic Torch Relay, we conducted three stage productions, remote performance, remote viewing, and remote fan collaboration using Kirari!. Although the public viewing was cancelled due to the spread of COVID-19, we could still demonstrate to tens of thousands of viewers the possibility of new remote entertainment during the pandemic through online streaming and received positive feedback from the majority of those viewers. Taking the above-described challenges as a first step, NTT R&D will continue to research and develop technologies toward the creation of a new form of live entertainment suitable for the “new normal” era. AcknowledgmentsWe thank the Tokyo Organising Committee of the Olympic and Paralympic Games, Osaka Prefecture, Suita City, Kanagawa Prefecture, Yokohama City, and our partner companies for their cooperation in promoting this project. We also thank GENERATIONS from EXILE TRIBE and all the performers who graced the stage of the extended celebration.

NTT is an Olympic and Paralympic Games Tokyo 2020 Gold Partner (Telecommunication Services). References

|

|||||