|

|||||||

|

|

|||||||

|

Feature Articles: 2021 International Sporting Event and NTT R&D—Technologies for Making the Event Inclusive Vol. 20, No. 2, pp. 68–72, Feb. 2022. https://doi.org/10.53829/ntr202202fa9 Goalball × Ultra-realistic Communication Technology Kirari!AbstractNTT is promoting research and development to enable people with various physical conditions, including disabilities, to enjoy watching sports. Focusing on goalball, which is a type of parasport, this article introduces a new experience of sports watching that provides a sense of realism as if the spectators were watching the game at the competition venue. This experience is possible by using ultra-realistic communication technology called Kirari! (particularly, highly realistic sound-image localization technology) for producing stereophonic sound. Keywords: sports viewing, ultra-realistic communication, sound-image localization 1. OverviewTo create a symbiotic society through sports, we at NTT laboratories have been researching viewing methods that allow the visually impaired to enjoy sports events. The main method for the visually impaired to watch sports has been through live radio and television broadcasts. However, verbal explanations cannot effectively convey the details of an action (for example, the rhythm of a rally or impact of hitting the ball) or feeling the intensity of play [1]. The visually impaired use sensory information other than sight to watch sports. One such example is using sound. In the system described in this article (called “goalball experienced through sound”), we reproduced the acoustic space of a game of goalball, in which sound plays a leading role, through 100 speakers (Fig. 1) by using our ultra-realistic communication technology called Kirari!. This system enables the visually impaired to enjoy watching goalball by following the movement of the ball via sound only. This provides them with a realistic experience as if they were on the playing court.

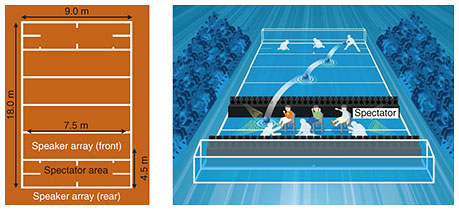

2. Spectating experienceWith “goalball experienced through sound,” we created a spectator area that simulates a full-scale tournament court and enables visually impaired spectators to experience the acoustics where the players are located in front of the goal (team area) (Fig. 2). Two rows of 50 speakers (speaker arrays), one placed in front of and one placed behind the spectator seats, sequentially synthesize the sound of the ball bouncing and the sound of the players’ movements in a manner that reflects the position of the ball on the playing court. This setup allows the spectators to follow the movements of the players and ball by sound alone in such a way that they can experience the powerful sound of the ball as if the ball were flying towards them.

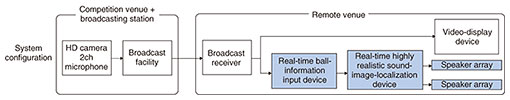

3. Technology3.1 Highly realistic sound-image localization technology: Kirari!Kirari! is a communication technology that enables spectators to experience watching a game as if they were in the competition venue, even if they are far from the venue [2]. With “goalball experienced through sound,” we created an acoustic experience by using highly realistic sound-image localization technology, one of the technical elements of Kirari!. This technology reproduces the spatial and physical wavefronts of sound on the basis of a physical model [3]. It separates the sound collected with a single microphone at the tournament venue and reproduces it as if a specific sound were generated at an arbitrary position. A key point of this technology is a group of speakers (speaker array) closely arranged in a straight line. By adjusting the playback timing and power of the sound radiated from each speaker to focus the sound at an arbitrary position, a sound field is reproduced as if a sound source existed there. The configuration of “goalball experienced through sound” is shown in Fig. 3. Due to operational constraints, we could not set up our own cameras to transmit the event, so we decided to use broadcast video. Video images of the game shot at the venue of a goalball tournament are transmitted to a remote site as broadcast waves via the broadcasting equipment of the broadcasting station. At the remote site, the position of the ball and players as well as the information about the type of ball thrown (acquired from the received broadcast-wave images) are input in real time, and an acoustic space is created on the basis of that spatio-temporal information (Fig. 4).

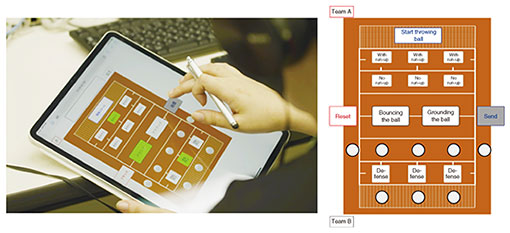

We aimed to extract the locations of the ball and players, as well as information about the type of ball thrown, from the broadcast video. However, the cameras and angle of view change frequently during broadcast video, so we adopted a user interface (UI) through which the ball position is input manually (Fig. 5). Two people, one in charge of team A and one in charge of B, each used a tablet with the UI installed to watch the broadcast video and enter the starting position and time of each team’s attack, type of throw (bouncing ball or ball along the ground), and result of the throw (blocked, goal, or out of bounds) with the push of a button. From the input result, sound information is generated from a sound-source file of the throwing sound prepared in advance. That information is then processed using Kirari! via the network to reproduce the ball-throwing situation in real time.

3.2 Acoustic production with inclusive design(1) Selecting sounds to localize as the sound image To reproduce the acoustic space that the players hear on the court and allow non-players to fully experience the players’ excellent sense of hearing, we used an inclusive design method, which involved goalball players and the visually impaired in the upstream design process. Specifically, we defined and created the requirements for the sound through a process of interviews, observation of ball-throwing situations, and acoustic evaluation (Fig. 6). This process enabled us to understand the various sounds heard on the goalball court, but distracting sounds made by the opposing team (such as the sounds made to obscure the throwing position by hitting the floor) were included but are ignored by the players. In other words, the players always search for the ball by following a series of throwing sounds such as the run-up sound of the player holding the ball and that of the ball hitting the floor in the manner of a single stroke. Accordingly, we decided to focus on the throwing sounds (run-up sound, ball sound, and result sound of ball throwing such as blocked shot or goal) and reproduce those sounds. That is to say, instead of trying to reproduce the acoustic space of the physical tournament venue as is, we attempted to reproduce the sounds that the players selectively perceive from the various sounds that they hear. By doing so, we aimed to allow non-players to experience the simulated exceptional auditory sense of the players.

(2) Simplification of sound-image localization For the path of the thrown ball, the players distinguish the distance from one end of the goal to the other (9 m) in nine segments (each with width of 1 m). However, the spatial resolution of sound of non-players is not as good as that of players, so it is difficult for non-players to determine the route of the thrown ball when the sound image is localized as is. By reducing the spatial resolution in the goal direction from nine segments to three (left, center, and right), we improved the non-players’ recognition rate of the ball position by sound. We also created an introductory content to give a lecture on sound localization to the non-playing spectators so they could get used to sound localization. In preparation for experiencing a game, the sound of throwing the ball along various paths was localized. Non-players commented that they could gradually determine the path of the thrown ball just by listening to the sound. This suggests that listening the introductory content before the game was useful to understand the game. Therefore, by simplifying the path of the thrown ball and creating the introductory content, we were able to create a system that allows non-players to experience the sounds that the players hear during a game. 4. ResultsInitially, “goalball experienced through sound” was supposed to be a spectator event at the international sporting event in 2021 for people with disabilities, but the event was cancelled to prevent the spread of COVID-19 infections. However, during a hands-on evaluation of the experience to prepare the actual event, we received high evaluations from non-players with visual impairments. The evaluation was based on three questions: “Can you understand the state of the play,” “Can you feel the texture of the goalball-like sound,” and “Can you feel the power of a ball and realism.” In response to these questions, the evaluators commented, “The sound texture is realistic. Reproduction of the sound of the ball going back and forth is excellent. It’s like a real game!”, “Unlike listening to television, I could see where the ball was coming from and going to,” and “In a stadium, you hear the sound from outside the court, but this system gave me a more realistic feeling as if I was inside the court.” We also received comments such as, “It would be easier to follow the path of the ball if we could also hear the sound of the players moving with the ball” and “It would be better if we could hear the players talking so we could understand their strategies.” In general, by focusing on the goalball-like sound players hear through the inclusive design method mentioned above, we were able to create an acoustic system that enables non-players to understand the state of play of a goalball game. 5. Concluding remarksWe developed a system called “goalball experienced through sound,” which allows people to enjoy goalball through only sound and evaluated it in a hands-on manner with real spectators (including those with visual impairments). Initially, we planned to reproduce the sound of the ball moving on the court by using Kirari!; however, from the results of the evaluation, we realized that not only the sound of the ball moving but also the sound of the players’ movements and the connection of a series of sounds are important in creating the spectator experience. Although the spectator event at which we were planning to provide this technology was cancelled due to the COVID-19 pandemic, we plan to enable many people to experience the results of “goalball experienced through sound” in conjunction with goalball tournaments and efforts to promote parasports. We will also continue our research and development from an inclusive perspective that allows people with various physical conditions to learn the value of information and communication technology. AcknowledgmentsThe authors thank Akiko Adachi (Leifras Co., Ltd.), the players, and visually impaired participants who cooperated with the acoustic evaluation, the teachers of Yokohama City and Yokohama Municipal Special Needs School for the Blind, the Japan Goalball Association, and the Japanese Para Sports Association for their comments and cooperation in the design of the “goalball experienced through sound” experience. References

|

|||||||