|

|||||||||||

|

|

|||||||||||

|

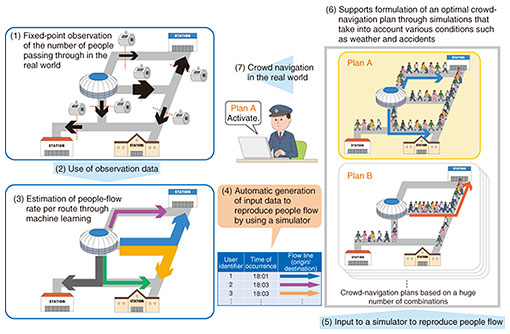

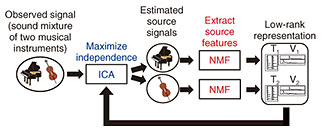

Front-line Researchers Vol. 20, No. 5, pp. 1–6, May 2022. https://doi.org/10.53829/ntr202205fr1  Problem Solving Will Not Reduce the Number of Research Themes—It Will Open up New Research AreasAbstractFrom his extensive research in blind source separation, Senior Distinguished Researcher Hiroshi Sawada has received worldwide acclaim for his work with a domestic research partner on independent low-rank matrix analysis, namely, the development and integration of independent component analysis and non-negative matrix factorization. He has also ventured into the field of neural networks. We asked him about his research achievements and his attitude toward enhancing his research activities. Keywords: blind source separation, independent low-rank matrix analysis, neural networks The culmination of research on audio source separation: ILRMA showcases Japan’s presence in the field—Could you tell us about the research you are currently conducting? I have been researching for a long time on blind source separation, which is a technology that separates mixed sound sources in situations in which a listener with eyes closed cannot tell under what conditions a recording is being made. Expanding a portion of this technology, I have also studied technologies for analyzing spatio-temporal data. More recently, I have also ventured into a new field for me, neural networks, which many people have been researching. I developed blind source separation into a technology for estimating the structure of information sources and observation systems by combining non-negative matrix factorization (NMF), which captures the structure and features of information sources such as data and signals, and independent component analysis (ICA), which estimates how data and signals are observed with sensors through an observation system. NMF has developed into a method for analyzing spatio-temporal multidimensional data sets—by modelling spatio-temporal relationships between multidimensional data—to enable future prediction. It has recently evolved into a method for data assimilation and machine-learning-based crowd navigation (Fig. 1).

To reduce congestion and stabilize communication infrastructure at large-scale events, this data assimilation and machine-learning-based crowd navigation technology uses (i) real-time-observation data to detect incidents, such as congestion, that may occur in the near future through data assimilation and simulation and (ii) machine-learning-based crowd navigation to prevent congestion from occurring and ensure safety in advance. Headed by NTT Fellow Naonori Ueda at NTT Communication Science Laboratories, many NTT researchers, including myself, are working on this technology. We had hoped to demonstrate the technology at the major international sporting event held in Tokyo in 2021; however, the event was closed to spectators to prevent the spread of COVID-19, so we were unable to demonstrate the technology. Led by Hitoshi Shimizu, a research scientist at NTT Communication Science Laboratories, we also work on research themes such as “a model for selection of attractions in a theme park and estimation of model parameters” and “theme-park simulation based on questionnaires for maximizing visitor surplus.” At the same time, by advancing blind source separation, we have been promoting independent low-rank matrix analysis (ILRMA) (Fig. 2), which integrates ICA and NMF. As the result of joint research with Tokyo Metropolitan University, National Institute of Technology, Kagawa College, and the University of Tokyo, the basic technology underpinning ILRMA was announced at an international conference in 2015 and described in a journal in 2016. After we further developed the technology, I gave a tutorial lecture on ILRMA at the International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2018, the world’s largest international conference in the field of audio and acoustic signal processing. After summarizing the content of that tutorial as a review paper in Asia-Pacific Signal and Information Processing Association (APSIPA) Transactions on Signal and Information Processing in 2019 [1], we received the APSIPA Sadaoki Furui Prize Paper Award. I also co-authored a tutorial lecture on further advanced topics for the European Signal Processing Conference (EUSIPCO) 2020.

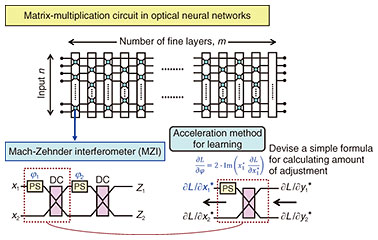

—It is a pity to hear about the impact of the COVID-19 pandemic; even so, you have achieved world-class results. In recognition of these activities and past research results on blind source separation, ICA, and NMF, I was selected as a Fellow of the Institute of Electrical and Electronics Engineers (IEEE), the world’s largest academic organization in the field of electrical and information engineering, in 2018, and as a Fellow of the Institute of Electronics, Information and Communication Engineers (IEICE) of Japan in 2019. In 2020, I received the Kenjiro Takayanagi Achievement Award, which honors individuals who contributed to the promotion and industrial development of the field of electronic science and technology in Japan through outstanding research. In awarding the prize, the committee stated, “ILRMA is the culmination of a series of studies on blind source separation that have been clarified as the original technology from Japan, and it is appealing to the world through an edited book, tutorials at international conferences, and a review paper.” In January 2022, I was named an IEEE Signal Processing Society Distinguished Lecturer, an honor bestowed annually on five individuals in each field. I am proud that we could demonstrate the presence of NTT and Japan as a whole through ILRMA and other technologies. Boost your organization and polish your skills—Neural-network technology is a different field from ILRMA. How did you come up with it as a research theme? While I have been focusing on research on blind source separation and analysis of spatio-temporal data, the third boom in artificial intelligence (AI) started around 2012, since then, neural networks based on deep learning have gradually become used in products and services in our daily lives. Although I had not been directly involved in neural networks, around 2017, like many other researchers, I began to think that neural networks was a very important technology and started learning it, even though I had to catch up with other researchers at first. The first step in taking on the challenge of such a new field is learning about it. First, to boost research activity on neural networks from the perspective of NTT’s laboratories as a whole, I started a colloquium on deep learning and a technical course on machine learning as part of my studies. The colloquium on deep learning is organized by members of NTT laboratories. For about 20 years, we have been organizing colloquiums on themes, such as speech and language, to bring experts together at least once a year who are based at the laboratories in different locations such as Musashino, Yokosuka, Atsugi, Keihanna, and Tsukuba. The colloquium on deep learning is now five years old. Although it has been held online for the past two years due to the COVID-19 pandemic, it has allowed us to see who in the laboratories are pursuing technology in the field of deep learning and facilitated information exchange and discussion. Technical courses are also held for new employees and employees in their second to third year of employment. We have had technical courses on networks, information theory, etc. but not on machine learning, so we took this opportunity to launch one and augment the neural-network content from around 2019. Through these efforts, I have deepened my own understanding of neural networks and am now able to use them in new research projects such as three related studies that I am currently conducting with collaborative researchers. One of these studies, an “acceleration method for learning fine-layered optical neural networks” (Fig. 3) [2], was developed with Kazuo Aoyama, a researcher at NTT Communication Science Laboratories, and a paper on it was accepted to the International Conference on Computer-Aided Design (ICCAD) 2021. In addition to its novelty in the field of machine learning, the team headed by Dr. Masaya Notomi, a senior distinguished researcher at NTT Basic Research Laboratories, which has been collaborating with us in this study, uses the parameters learned with this method in experiments on actual optical devices.

In the Frontier Research and Development Committee of the Japan Society for the Promotion of Science “Strategic plan for industrial innovation platform by materials informatics,” on which I served as a committee member for three years from 2016, we have been discussing how to use machine-learning techniques for developing new physical properties. In a joint research project led by Dr. Yuki K. Wakabayashi, a researcher at NTT Basic Research Laboratories, and Dr. Takuma Otsuka, a research scientist at NTT Communication Science Laboratories, we were able to use machine-learning techniques to derive the temperature and other conditions necessary to create a thin film with outstanding properties [3]. In collaboration with the University of Tokyo and Tokyo Institute of Technology, we have measured the electrical conduction of single-crystalline thin films of SrRuO3 (Sr: strontium, Ru: ruthenium, O3: ozone) prepared—at low temperature with a magnetic field—and were able to announce the first observation of quantum transport phenomena, which is peculiar to an exotic state called a magnetic Weyl semimetal [4]. I have been researching themes in the information field all my life and never dreamed that I would be involved in creating a material with new properties, so I’m very happy to have been able to be involved in this creation, even if only a little. —What else is important to you to enhance your research activities using technology in your area of expertise? Think about what technology is your specialty, how you can use it to play an active role, and what technology can contribute to the development of the field in which you are researching. For example, optical neural networks use complex numbers, and since complex numbers appear in research on ICA (namely, when an audio signal is converted into its frequency components using the Fourier transform), I thought I could make a contribution to that field. It is important to find areas that overlap with your field and areas in which your specialized skills can be used, rather than areas that are completely unknown to you. It might be that the more technologies you are specialized in, the better, but I think one is enough. It is also important to learn and acquire new skills in the field you are new to and to find research colleagues and supporters. For example, the above-mentioned acceleration method for learning optical neural networks worked largely because my colleague, Kazuo Aoyama, was working with me on that project. I was also attracted to the fact that Dr. Masaya Notomi had expertise that I did not have, and I felt a sense of anticipation that synergy would occur between us and something new would be created by combining our experience and skills. You can meet such people at the colloquiums I mentioned earlier and social gatherings, so it might be a good idea to take advantage of opportunities for casual chats at such social gatherings to get a feel for what different fields are like. I’m also a member of the Machine Learning and Data Science Center, which was established by NTT Fellow Naonori Ueda, and the information shared there has led to new research opportunities for me. Incidentally, as research progresses and results are obtained over the years, the number of research themes naturally decreases as problems are solved. Instead of worrying about that fact, I want to approach my research activities with the attitude that there are more things we can do, and new research areas are being opened up. That attitude will perhaps lead you to search for collaborators and colleagues. Because what one person can do alone is limited, I have always valued collaboration with other researchers. Means of collaboration include effectively combining different fields of expertise or mutually understanding, confirming, and deepening each other’s skills in the same field of expertise. However, as a premise, you must be an entity that can be interesting to and trusted by the other party. To gain trust in terms of the expertise that is expected of me, I want to do the things I am required of, such as provide something of value, take charge of my part in building experiments and systems, and present my ideas clearly when discussing issues. Why don’t you just do what you want to do without worrying too much?—How do you plan to lead your life going forward as a researcher? I want to continue my research as long as possible. One reason for that is the pleasure of learning from past research activities. Neural networks provide a good example of such learning. Such research has been continuing for more than 60 years, and looking at past research from new perspectives and techniques can reveal connections between old and new research. Just as I have found a new field of activity in research on neural networks by learning it from scratch, I’m sure that the same thing will happen when I’m pursuing other themes in the future. As a researcher, I’m forever having to learn. It is fun for me to learn new things, so I hope to continue to learn well into the future. I believe that a researcher is a person who creates novelty regardless of whether it is useful to anyone. New is not always good; that is, it is important to produce results that other researchers will find valuable and that they are willing to base new research on. There are many cutting-edge researchers, and many young people are reading new research papers. I want to challenge myself to find something that I’d like to try my hand at. —Now that you have become a researcher with a major impact on the world, what words would you like to say to the person you were when you joined the company? Based on those words, what would you like to say to young researchers? That is a bit difficult to answer, but I suppose I would say, “Why don’t you just do what you want to do without worrying too much?” Looking back on myself, I was not very successful at first and was anxious about whether I would be able to produce results. I’d like to tell young researchers that their hard work will produce results in one way or another. I feel pleasure in explaining difficult things in a simple and easy-to-understand way when I’m giving a tutorial lecture at IEEE, giving a lecture during a technical course, or writing a paper, and above all, when new research results are accepted for publication. In fact, a figure from my paper accepted by IEEE in 2013 appeared on the cover of the journal [5]. That made me very happy. I had worked hard with PowerPoint to create a diagram that would make it easy to understand concepts that would be difficult to understand if written in mathematical formulas. When the diagram was published, I felt that my hard work had paid off. I think young researchers in our organization are working very hard. The field of machine learning, in particular, is currently booming, and many talented researchers are entering the field. It’s very difficult to get your paper accepted by an international conference because you have to overcome high hurdles. Nevertheless, it’s important to publicize your results, so let’s continue improving the quality of our work, publishing our papers on arXiv (an open access repository of scientific research), and aiming for prestigious conferences. Keep in mind that doing so is not easy, and you may feel discouraged if your paper is not accepted; even so, at such times, look to the experience of your seniors: many of them had their results accepted only after years of hard work. Seniors, including myself, can be collaborators and help juniors write papers. It is also helpful to make steady efforts while taking a realistic approach. References

■Interviewee profileHiroshi Sawada received a B.E., M.E., and Ph.D. in information science from Kyoto University in 1991, 1993, and 2001. He joined NTT in 1993. His research interests include statistical signal processing, audio source separation, array signal processing, machine learning, latent variable models, graph-based data structures, and computer architecture. From 2006 to 2009, he served as an associate editor of the IEEE Transactions on Audio, Speech and Language Processing. He received the Best Paper Award of the IEEE Circuit and System Society in 2000, the SPIE ICA Unsupervised Learning Pioneer Award in 2013, the Best Paper Award of the IEEE Signal Processing Society in 2014, and the APSIPA Sadaoki Furui Prize Paper Award in 2021. He was selected to serve as an IEEE Signal Processing Society Distinguished Lecturer for the term 1 January 2022 through 31 December 2023. He is an IEEE Fellow, an IEICE Fellow, and a member of the Acoustical Society of Japan. |

|||||||||||