|

|||||||||||

|

|

|||||||||||

|

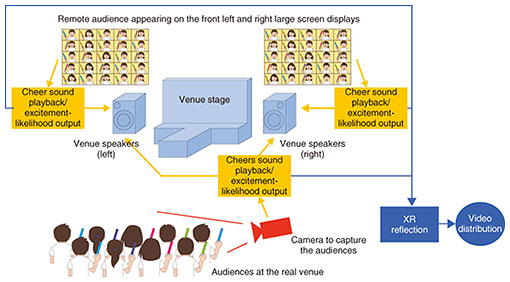

Feature Articles: Research and Development for Enabling the Remote World Vol. 20, No. 11, pp. 33–39, Nov. 2022. https://doi.org/10.53829/ntr202211fa4 Harmonic Reproduction Technology between a Real Venue and Remote AudiencesAbstractNTT Human Informatics Laboratories has been researching and developing technology to reproduce the appearance of an audience enjoying a live-streamed event online remotely from home (remote audience) at the venue where the event is being held (real venue) while harmonizing it with the situation at the real venue. At the 34th Mynavi TOKYO GIRLS COLLECTION 2022 SPRING/SUMMER held on March 21, 2022, a demonstration experiment was conducted to support the excitement of the event by using low-latency video communication and cross-modal sound search to reproduce pseudo cheers at the real venue for both real-venue and remote audience members who could not cheer due to the COVID-19 pandemic. This article introduces the activities of this demonstration experiment. Keywords: two-way video communication, remote viewing, harmonic reproduction 1. The necessity of harmonic reproduction of video and soundNTT has been engaged in research and development of interactive video communications that interconnect multiple remote-viewing environments and deliver high-definition video with low latency with the primary focus on delivering highly realistic video. In the Real-time Remote Cheering for Marathon Project [1], ultralow-latency communication technology with uncompressed transmission and low-latency media-processing technology were used to connect the marathon course in Sapporo, Hokkaido with the cheering venue in Tokyo in real time. This enabled spectators to send their support to the athletes from remote locations, creating a sense of presence similar to that of cheering along the course and a sense of unity between athletes and spectators, thus enabling a new way to watch the race. To develop this initiative for home users, NTT began research on bidirectional video and audio communication between the venue of a live-streaming event (real venue) and home environment. However, there are inconveniences that arise when trying to achieve bidirectional, highly realistic video communication between the home environment and a real venue. For example, in situations such as web conferencing using video and sound between a remote-working home environment and the workplace, the background of the camera image from the home environment may show a room with a lived-in feel, or a family member’s voice may be mixed in with the microphone audio, which can be awkward. In situations such as a live sporting or entertainment event, the audience members who participate remotely (remote audience) want to be present in the video to share the excitement with the audience at the real venue (venue audience) but would like to avoid information they do not want seen or heard from being distributed to the venue and other remote audience. Therefore, it is necessary to suppress unnecessary information and reproduce information that is desired to be reproduced at the real venue with a high sense of presence and harmony. The real venue may also require harmonious reproduction. The COVID-19 pandemic has restricted people’s activities, and even at live concerts, audiences are prohibited from cheering, even if they are wearing masks, to prevent infection. It is an uncomfortable situation for the audience at live music concerts since they cannot cheer for the popular songs that drew cheers before the pandemic, even though there are many people in attendance. It is also difficult for the performers to interact with the audience if they cannot hear the cheers, because it is difficult to understand the audience’s reactions. Therefore, we studied the possibility of reproducing the harmony of images and sound so that the venue audience can feel the same excitement from the cheers as before the pandemic. 2. Joint experiment to enable two-way video communications for the homeIMAGICA EEX, NTT Communications, and NTT conducted a joint experiment to enable interactive high-resolution video viewing for home users at the 34th Mynavi TOKYO GIRLS COLLECTION 2022 SPRING/SUMMER [2] held on March 21, 2022. The experiment aimed to support the excitement of the venue audience, who were unable to cheer due to the COVID-19 pandemic, and create a sense of participation for the remote audience through interaction with the real venue. We constructed a system to reproduce cheer sounds in accordance with the excitement of the venue and remote audiences using low-latency video communication technology and cross-modal sound retrieval technology [3] and verified the reproduction of harmony. Figure 1 shows a conceptual diagram of the entire experiment. Remote audience members remotely participated via NTT Communications’ two-way low-latency communication systems (Smart vLive® [4] and ECLWebRTC SkyWay [5]) using a personal computer (PC) with a camera and appeared on the left and right large-screen displays in front of the stage at the real venue. The cheer sounds were estimated from the left and right images of the remote audience members, as described below, and the corresponding left and right speakers played the cheers in response to the excitement of the event. For the venue audience, the cheer sounds were estimated from the images taken by the camera aimed at the audience seats and played from the venue speakers. The audience could control the cheer sounds so that the cheers became louder when the audience shook their penlights faster and quieter when they shook them slower. The volume of excitement was reflected in the cheer sounds as well as in the extended reality (XR) expression of the live-streamed video, with IMAGICA EEX creating a production in which the amount of light particles changes in accordance with the volume of excitement from the venue audience and remote audience members in the streamed video, allowing the remote audience members to enjoy the excitement of both audiences with sound and images.

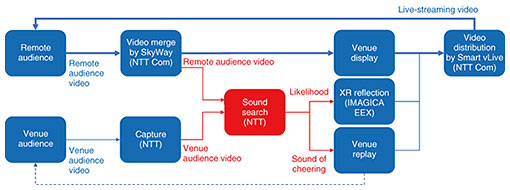

2.1 System configurationThe overall system configuration is shown in Fig. 2. The experimental system consists of a function that lays out images of remote audience members in a tiled format, one that displays the images on a large display at the real venue, one that searches for and plays the cheer sounds from the tiled images and audience images at the real venue, one that expresses the search results in XR, and one that distributes images of the real venue reflecting these results.

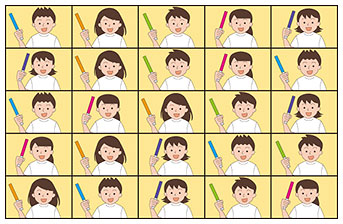

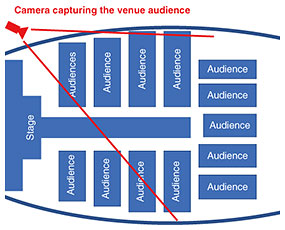

The sound-retrieval system used in this project is based on machine learning technology using NTT Communication Science Laboratories’ cross-modal sound retrieval technology [3] to estimate the cheer sounds from video images of audience members waving penlights. To map the images of the audience waving penlights to the cheer sounds, training data were prepared in advance by pairing the images of the venue and remote audiences waving penlights with the cheer sounds, and a model for estimating the sound from the images was trained. For the remote audience, we input aggregated video images laid out in a 5 × 5 tiled pattern, as shown in Fig. 3, and retrieved and played back the corresponding cheer sounds on the basis of the penlight waving. For the venue audience, as shown in Fig. 4, a camera was set up in the venue to take a video of the audience, and the corresponding cheer sounds were retrieved and played back on the basis of the images of the audience waving penlights in the same way. The sound source for playback was a pre-recorded cheer sound.

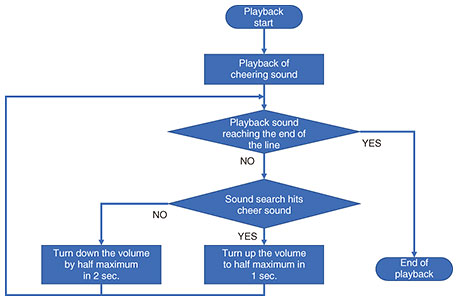

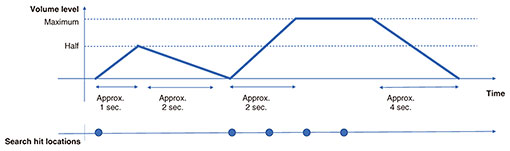

In addition to searching for the cheers using the cross-modal sound retrieval technology, a method of determining the volume of the cheers using the flowchart shown in Fig. 5 was implemented to smoothly change the volume of the cheers. An example of a hit point in the cheer-sound search and corresponding volume change is shown in Fig. 6. With this mechanism, it is possible to control the volume so that it increases when the penlights are continuously waved and decreases when they stop waving, thus enabling intuitive volume changes.

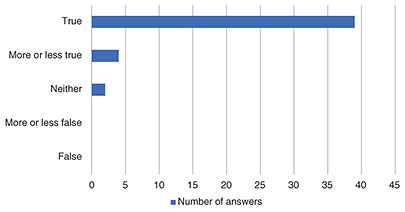

2.2 Evaluation by questionnaire to participantsTo confirm the effectiveness of the harmonic reproduction, in which the cheer sounds are estimated from the audience images and played back at the venue, a questionnaire was sent to those participated as remote audience members during the experiment. In response to the statement, “I felt that it would be better to have a mechanism to produce cheers compared to the usual delivery of just watching the show,” 86.6% of the participants responded positively on a 5-point scale, i.e., “true,” “more or less true,” “neither,” “more or less false,” and “false” (Fig. 7). Thus, the majority of participants had a favorable view of the harmonization reproduction system, confirming the effectiveness of delivering responses from the remote audience to the real venue.

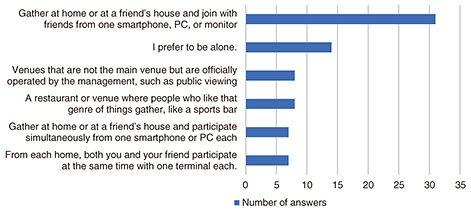

3. Future developmentsRemote audience members were asked to connect one by one from their homes, and a survey was conducted to determine what type of viewing environment they would prefer for remote participation, i.e., “In which of the following viewing situations would you prefer to watch a live webcast remotely?”. More than half (68.8%) of the respondents answered, “Gather at home or a friend’s house and participate with a friend from a single smartphone, PC, or monitor” (Fig. 8). This result may suggest that a viewing style in which good friends gather to participate in a remote-viewing environment and many such viewing environments are connected to the real venue to watch together will be preferred in the future. In a well-developed environment, such as a public-viewing event, there may be no problem in pursuing a high level of realism and rich transmission, including the atmosphere of the venue. However, for home-use, as mentioned earlier, if the video images captured with cameras in the home environment and the sound captured with microphones are transmitted and played back at the venue as they are, problems are expected to arise in terms of production. Therefore, NTT will continue researching and developing two-way video communications that can reproduce images and sounds in harmony so as not to interfere with live transmission by selecting information in the pursuit of reality as well as consideration of what information is to be emphasized or suppressed.

References

|

|||||||||||