|

|

|

|

|

|

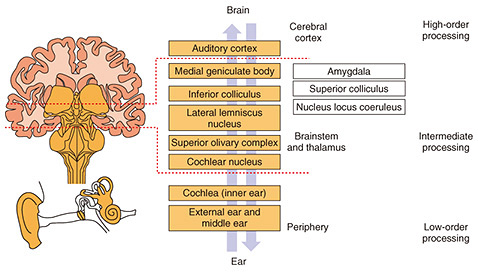

Front-line Researchers Vol. 21, No. 1, pp. 1–6, Jan. 2023. https://doi.org/10.53829/ntr202301fr1  If We Pursue Research Properly and Correctly, We Will Accumulate Knowledge That Will Make a Valuable Contribution to AcademiaAbstractAccording to the World Report on Hearing issued by the World Health Organization, it is estimated that by 2050, approximately 2.5-billion people (one in four people) in the world will suffer hearing loss, which is considered a major risk factor in developing dementia. Hearing is an indispensable information-processing mechanism for understanding the environment and communicating with people and an important component of the sensory world that is directly linked to emotions. We interviewed Shigeto Furukawa, a senior distinguished researcher at NTT Communication Science Laboratories investigating the auditory mechanism, about the progress of his research activities and his attitude as a researcher. Keywords: auditory mechanism, artificial neural network, hearing loss Investigating intermediate processing in the brainstem to elucidate the auditory mechanism—It has been two years since our last interview. Could you give us an overview of the research you are currently working on and how it is progressing? Two years is a very short time regarding basic research activities, and I don’t have much to tell you in terms of progress that I have made in solving research problems all at once over the last two years. Therefore, I’ll report on any progress I’ve have made and new collaborative research I’ve started. I’m researching the mechanisms of sensory perception that support comfortable communication, focusing on the psychophysics and neurophysiology of hearing. I’m involved in (i) measuring, evaluating, and modeling the mechanism and physiological functions of auditory-scene analysis; (ii) determining and evaluating the mechanism of hearing difficulties; and (iii) clarifying neural mechanisms related to sensory perception and mental states. I’m also interested in variations in hearing, namely, how different people hear the same sound differently or how the same person hears the same sound differently according to the situation. The findings of this research will provide the basis for technologies that connect people with others, society, and the environment in a harmonious manner. The mechanism of hearing is often thought of as follows: sound, which is the vibration of the air, is converted into nerve signals by the eardrum and inner ear (i.e., lower-order processing), which are transmitted to the brain, where they are recognized, understood, and interpreted (i.e., higher-order processing), especially in the cerebral cortex. As shown in Fig. 1, the signals received from the ear pass through the brainstem on the way to the cerebral cortex, and in the brainstem, they are subjected to multistage processing (i.e., intermediate processing). For example, it is thought that the brainstem is responsible for extracting basic information, such as the pitch of a sound, and for selecting information that reflects the level of importance of the sound.

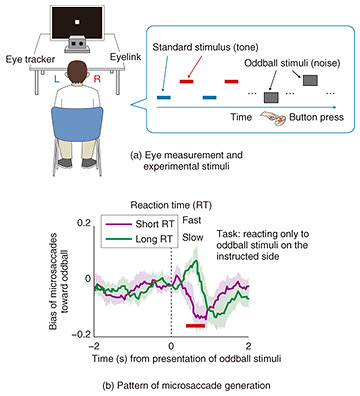

Focusing on the intermediate processing in the brainstem, I’m analyzing brain and auditory mechanisms indirectly by using biometric measurements, such as electroencephalograms and eye movements, and computer-based modeling. For example, when I measured the brain waves of a person who is played a sound, I found the frequency components of a pattern similar to that of the sound played. Since these components are thought to originate in the brainstem, it is possible to investigate activities related to frequency analysis in the brainstem by analyzing them. It is also known that the activity of neuronal groups related to arousal level and attention in the brainstem is linked to the size of the pupil. These measurements and analyses enable us to access the workings of the brainstem without having to insert electrodes into the brain. I expect that combining various measurement methods and modeling will make it possible to confirm from the outside of the body what is happening naturally in the auditory system that we are not consciously aware of. —The topic you mentioned in the previous interview, “attention and hearing,” is unique. Let’s consider a situation in which you are listening to music. Whether one likes a song or not depends on the individual, and the enjoyment of a song may change from time to time, even if the same person listens to it. When we are enjoying music, some phrases may catch our attention with surprising sound/rhythm tricks, and other phrases may attract us without any notable features even though the music appears to be playing smoothly. By measuring biological reactions of a person listening to a song, I want to be able to observe how each person feels at any given moment. There are many ways to perform this task. One would be to measure the pupil to determine which sounds the person is paying attention to. Previous studies revealed that the size of the pupil changes in accordance with the physical brightness in the direction of attention even if the person is not actually looking at the subject. We have found that this phenomenon can be applied to auditory attention as well. It is also known that in addition to the pupil, small and involuntary eye movements called microsaccades also respond to visual and auditory stimuli in a way that is related to attention. We experimentally investigated the relationship between microsaccades and auditory spatial attention and found that (i) the pattern of generation of microsaccades varies with the direction of auditory attention and (ii) this directional characteristic also correlates with performance in an auditory task (Fig. 2). Although many studies have shown a correlation between microsaccades and visual attention, our study also showed a correlation between microsaccades and the direction of auditory attention and information processing in the auditory system.

One of our future research directions is to develop a technology for estimating the attentional state of a person by external observation. It may be possible to visually read information such as which voice a person is paying attention to when many people are having a conversation, such as at a party. We also hope to understand the mechanism by which people allocate their attention in accordance with the situation and to develop technology that uses that mechanism to present appropriate information in a timely manner. Artificial neural networks trained to recognize sounds acquire a brain-like representation of sound—I understand that you are also embarking on new research that will have a significant impact on academia. As I mentioned earlier, in the mammalian brain, features of sound are analyzed in a multistage process—even between the brainstem and the cerebral cortex—from the time a sound reaches the ear until it is recognized (Fig. 1). For example, a relatively slow change in amplitude (amplitude modulation) is an important cue for recognizing a sound. In neurophysiology, researchers have clarified “how” neurons express amplitude modulation in many brain regions in the auditory nervous system. However, for “why” neurons have come to express amplitude modulation in such a way (namely, is it a natural consequence?), we cannot answer that no matter how many times similar experiments are repeated. In principle, it is difficult to ascertain the relationship between the properties of neurons and the evolutionary process by using a general experimental approach. I have studied the properties of neurons in the cortex and brainstem (although focusing on a property other than amplitude modulation), but I was frustrated that no matter how hard I tried, I could only get a glimpse of the answer to the “how” and not the “why.” It has recently become possible to use artificial neural networks to recognize categories of sounds directly from natural and complex sound waveforms. Fortunately, I was able to team up with motivated researchers in the field of neurophysiology, and with this artificial-neural-network technology in hand, we approached the aforementioned “why” question. Among artificial neural networks, deep neural networks (DNNs) have a structure like that of the auditory nervous system (Fig. 1); that is, DNNs consist of many layers of elements, which correspond to neurons in living organisms, arranged in columns. Other than that similarity, however, DNNs do not simulate the specific neural circuits of the auditory system. We trained a DNN on a certain task, namely, classifying natural sounds. We then inputted sounds with various modulation frequencies into the trained DNN and examined the output from each individual element composing the DNN. That is, we applied the paradigm used in neurophysiological experiments on animal brains to DNNs as is. The results of the examination revealed that the DNN exhibits similar characteristics to those reported in previous studies on the auditory nervous system in animals. For example, some elements respond strongly only to specific modulation frequencies, and the response characteristics change regularly as the processing progresses from stage to stage. We also found that (i) the DNN gradually acquires similarity to the brain during the training process; (ii) the more accurately the DNN recognizes sounds, the more similar it becomes; and (iii) untrained DNNs show no similarity to the brain in recognizing natural sounds. These findings suggest that the expression of amplitude modulation observed in animal brains may have been rationally acquired during the evolution of properties suitable for sound recognition. I believe that these results will encourage physiologists who have been steadily studying the properties of the nervous system. For now, we are only seeing the response of the auditory nervous system to amplitude modulation; however, this approach is general enough that we expect it to develop in many directions in the future. —It is wonderful to hear that you have made progress in a field that has been researched for a long time. I also heard that you are conducting collaborative research that has social significance. In 2021, we began joint research with Shizuoka General Hospital on speech and language perception in people with hearing loss and cochlear implants. The purpose of this research project is to understand the mechanism behind individual differences in speech perception and language development by clarifying the nature of the auditory mechanism in people with hearing loss. Digital hearing aids and cochlear implants have recently become popular. It has been demonstrated that children with congenital hearing impairment who receive cochlear implants in early infancy can acquire spoken language just like children with normal hearing do. However, many unknowns concerning the process of sound perception and word recognition have yet to be explained, and the brain mechanisms and developmental processes involved in information processing—from the electrical signals provided by the cochlear implant to word recognition—and vocalization and singing ability have not been understood. Accordingly, there is a great deal of room for research on what should and can be done to maximize the effectiveness of hearing-aid technology, and researchers from both medicine and brain science are expected to collaborate to enhance that research. I believe that it is significant that this medical institution, which has made pioneering efforts regarding newborn hearing screening tests and support for children with hearing loss and has a variety of data on children with hearing loss and experience in dealing with them, has joined forces with NTT laboratories, which are researching the auditory mechanism and the process of language development in infants. The research on pupil and eye movements and modeling that I mentioned earlier could also be useful in this collaborative research. I expect that such multidisciplinary and multifaceted joint research will help explain the essential nature of the human auditory mechanism. On the basis of the scientific evidence acquired from this research, I want to offer support to many people with hearing loss. I want to contribute findings that will be included in textbooks—Please tell us what you have realized through your research activities over the past 30 years, including your post-doctoral period. In the previous interview, I mentioned that when I was a child, I wanted to be a doctor (who has a Ph.D.) when I grew up. At the time, I thought a doctor could answer any questions and resolve problems, but now I realize that is not the case. For example, since human cognition and behavior are based on the accumulation of various mechanisms, hearing-related knowledge alone does not necessarily cover all the problems faced by the hearing impaired. Even if I have some knowledge and can identify the problem, it does not mean that I can immediately solve the problem of the person who is suffering from hearing loss. Truly solving the problems of the real world involves many elements including politics and education. In that sense, I learned that a broad perspective is necessary. That is why I need to have a horizontal network of researchers in various fields as well as the perspectives and knowledge I can gain from them. Of course, I want to contribute to the network if my expertise is useful. Research is a never-ending process. You may be able to see that each aspect “might” be explained this way or that, but it is unlikely that you will be able to understand everything. Naturally, we pursue it to know the unknown but rarely find the answer immediately. I realize that I am not so naive as to believe that a little bit of research can truly clarify anything. Therefore, I believe that research requires steady and constant effort. Sometimes I feel limited and try to change my focus toward different research. Sometimes changing focus is a step in the right direction, but at other times, I feel that it takes us away from the essential problem. To be honest, even now, I’m researching while worrying about that outcome. Despite this constant sense of inadequacy, as a basic researcher, I have a desire to conduct research that has a long-term impact. “Long-term impact” can take many forms, but in a nutshell, I want to do research that will be written up in textbooks. Although I want to deliver surprising discoveries, I also think that research that builds a single system over a long period is just as important. —Your strong conviction as a researcher is there for all to see. What do you always keep in mind during your research activities? And what would you like to say to the younger generation of researchers? As for my research activities, I don’t think we have to dramatically win from behind. Even if people say to you, “He’s still doing the same thing…,” if you pursue your research properly in a manner that answers essential questions, the knowledge you accumulate will be a valuable academic contribution. Research that is published in textbooks and cited in papers for a long time might be the result of such steady work, and I respect researchers who can do it. I believe that an essential question has more than one form, that is, it differs in accordance with one’s position and background. When I am not sure which is better or worse, I sometimes change my point of view by thinking, for example, “If I pursue this, who would be pleased?” This “who” could be a fellow researcher by your side, an outstanding professor at an academic conference, a great person in history, or even a person in need in society. I previously had two positions: a researcher as a senior distinguished researcher and a manager as the head of Human Information Science Laboratory. I was sometimes asked if it was difficult to reconcile my position as a researcher with that as a manager. There is certainly a conflict between the researcher, whose ideas are the standard, and the manager, who thinks about how others behave and how the organization is run. However, some research themes came to light through my management position, and those themes enabled me to expand my world. As a result, a synergy between my two roles was created, so it was a good opportunity to expand my research. Finally, let me say some words to the younger generation of researchers. Make good use of your seniors. I hope that rather than simply treating your superiors as mentors or simply receiving guidance from them, you will use the knowledge, experience, and connections of your seniors as a means of improving yourself and your team. ■Interviewee profileShigeto Furukawa received a B.E. and M.E. in environmental and sanitary engineering from Kyoto University in 1991 and 1993, and Ph.D. in auditory perception from University of Cambridge, UK, in 1996. He conducted postdoctoral studies in the USA between 1996 and 2001. As a postdoctoral associate at Kresge Hearing Research Institute at the University of Michigan, USA, he conducted electrophysiological studies on sound localization, specifically the representation of auditory space in the auditory cortex. He joined NTT Communication Science Laboratories in 2001. Since then, he has been involved in studies on auditory-space representation in the brainstem, assessing basic hearing functions, and the salience of auditory objects or events. As the group leader of the Sensory Resonance Research Group, he is managing various projects exploring mechanisms that underlie explicit and implicit communication between individuals. He is a member of the Acoustical Society of America, the Acoustic Society of Japan, the Association for Research in Otolaryngology, the Japanese Psychonomic Society, the Japan Audiological Society, the Japan Neuroscience Society, and the Japanese Society for Artificial Intelligence. |

|