|

|

|

|

|

|

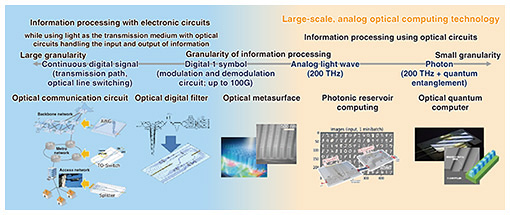

Front-line Researchers Vol. 23, No. 1, pp. 1–6, Jan. 2025. https://doi.org/10.53829/ntr202501fr1  Aiming to Build Neural Networks and Quantum Computers through Analog Manipulation of Light WavesAbstractAs data traffic on networks continues to rapidly increase, high-speed, high-capacity, low-latency communications using light will make it possible to address such huge growth in data traffic. The Innovative Optical and Wireless Network (IOWN) will not only further enhance these features of optical communications but also reduce energy consumption. Optical communications use the properties of light as a wave to transmit digital signals. Although the world of information processing is digital, manipulating light waves in an analog manner makes it possible to process information faster while using less energy. Toshikazu Hashimoto, a senior distinguished researcher at NTT Device Technology Laboratories, is endeavoring to build neural networks and quantum computers by manipulating light waves in an analog manner. We spoke to him about his research on optical device technology for computing using light and its applications as well as his thoughts on the importance of collaborating with people outside one’s organization to be stimulated, learn, and take on a difficult challenge. Keywords: photonic reservoir computing, optical metasurface, optical quantum computer Overcoming the limitations of large-scale computing by using light—Would you tell us about the research you are conducting? The theme of my current research is optical device technology for computing using light. Continuing the research that I talked about in my last interview (January 2022 issue), I’ve been researching and developing a technology that accelerates information processing and reduces its energy consumption by manipulating light as a wave in an analog manner. Although the content of my work has not changed much since the interview, the demands on computational technology have become much higher. Regarding generative artificial intelligence (AI) or large language models, a scaling law stating that their performance improves with increasing computing resources has been discovered, and that law has led to a race to expand the scale of computations to achieve better performance. Such a large-scale computing requires enormous amounts of energy, so reducing electricity consumption is becoming a social issue. As Moore’s law comes to an end, to cope with the increase in the computational complexity of an AI model, technologies that transform computer processors are also required. To address these issues, we at my research group are striving to create large-scale computing technology for executing computations by manipulating light waves in an analog manner—a different way from conventional digital computing. The Innovative Optical and Wireless Network (IOWN) concept aims to reduce energy consumption of computing systems, including those used for communications, through photonics-electronics convergence (PEC) technology. Our research aims to develop a future computing technology from a slightly different perspective from those based on PEC technology. Large-scale analog optical computing, which executes computations by manipulating light as a wave in an analog manner, is ultimately a technology that fully uses the frequency and time domains of light and even the degrees of freedom of photons, which cannot be controlled using current electronic circuit technology. To use up the properties of light, which are difficult to manipulate with electricity, the basic principle is to use the properties of light itself to control them. The application areas of information processing with conventional electronic circuits (where light is used as the transmission medium and optical circuits are used to input and output light carrying information) and that of large-scale analog optical computation technology with respect to the granularity of the computation and information handled are shown in Fig. 1. We are focusing on three technologies: photonic reservoir computing, optical metasurfaces, and optical quantum computers.

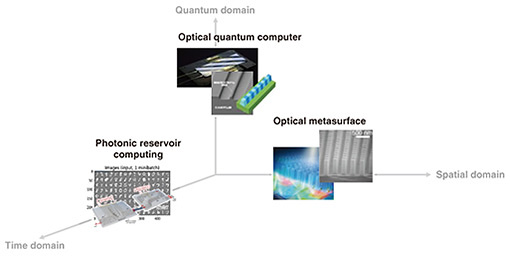

Pursuing the possibilities of light along the spatial, temporal, and quantum axes—How will the technologies you are developing help overcome the limitations of large-scale computing? The approach to scaling computations in analog optical computing technology can be defined in regard to three axes: the time domain, spatial domain, and quantum domain. Photonic reservoir computing, optical metasurfaces, and optical quantum computers are application examples of large-scale optical computing corresponding to each axis (Fig. 2).

The photonic reservoir computing that we are developing is a computational method for dividing an optical pulse carrying information into smaller pulses in time slots and mixing these sub-pulses by giving a time delay comparable to that of the original optical pulse to generate complex correlations. The desired output is then obtained from the combination of these sub-pulses. Such time-domain signal processing, namely, generating sub-pulses and mixing them by giving a slightly different time delay that of the original optical pulse, which is difficult to do with electronic circuits, is possible with optical circuits. An optical metasurface is a plate-shaped device, which is made by covering a transparent plate (substrate) with a microscopic pattern of dielectric columns, that controls the wavefronts of the light being transmitted through the plate. When the incident light passes through each dielectric column and is emitted, the phase of the light changes in accordance with the width of the column. By exploiting this phenomenon, a light beam with the phase shifted according to the width of the column is used as a wave source, and by intentionally having several such beams interfere with each other, an image as a wavefront can be formed. The dielectric columns can be placed in narrower intervals than the wavelength of the incident light, and it becomes possible to line up hundreds of millions of columns on a single plate. The image emitted from the optical metasurface is a mutual interference pattern composed of light beams with different phases generated by the columns, and the pattern contains information equivalent to the sum of squares of hundreds of millions. This suggests that an optical metasurface can execute enormous computations in the split second that light passes through the plate by using the spatial domain. All that remains is to determine (i) what kind of information manipulation is equivalent to changing the phase of light by changing the width of the dielectric columns and (ii) how to extract meaningful information from the image of interference pattern. If we can gain knowledge about these two questions, we will know how to carry out ultra-fast, large-scale computing using the spatial domain. Optical quantum computers enable large-scale computing by using quantum superposition and quantum entanglement. By creating quantum superposition states, forming quantum correlated states from the superposition states (i.e., quantum entangled states), and executing operations on those states, it is possible to obtain the same results as if operations were executed on many states or combinations of states at once. This possibility applies to quantum computers in general and is one of the reasons that computations that are difficult to execute using conventional classical computers become possible by using the quantum domain. We aim to scale the quantum domain by forming quantum states in optical pulses on the time axis and generating large quantum-entangled states. Benefit of large-scale optical computing—What specifically has been achieved thus far? These research themes are being led by young researchers and have produced outstanding results. Regarding photonic reservoir computing, we showed that a large-scale sum-of-products computation is possible in the form of a neural network and created a machine-learning algorithm suitable for analog computation. Reservoir computing involves weighting the output signals from a reservoir and optimizing the weights to obtain the desired output. The reservoir can be a fixed, complex network, and many physical reservoir computers have been proposed that use physical media with complex dynamics. We applied device technologies (e.g., optical fibers and optical circuits) that NTT has developed in the field of optical communications to build the world’s largest photonic reservoir computer capable of executing 1013 product-sum operations per second per wavelength. This was achieved by splitting several-dozen-Gbit/s-class pulse signals into 16 optical loop circuits, and further dividing each pulse into 32 sub-pulse signals to create 512 nodes (neurons) in the time and spatial domains. We have also built a multi-layer photonic reservoir computer by increasing the number of layers in this photonic reservoir. In collaboration with the Nakajima Laboratory at the University of Tokyo, we proposed a computational method called augmented direct feedback alignment (DFA), which does not require error back propagation, making it suitable for analog computation. By combining augmented DFA with a multi-layer photonic reservoir computer, we have achieved the world’s highest performing physical analog computer. We have shown that augmented DFA can be applied to general neural networks, suggesting that it is possible to train neural networks by using analog computations, which was previously thought to be difficult. We have demonstrated that an optical metasurface can increase the sensitivity of color image sensors. We created an optical metasurface—in place of lenses and color filters—that separates light into red, green, and blue components and focuses each component onto a separate photodetector. We thus demonstrated that this optical metasurface enables image sensing that is more than three times more sensitive than with the conventional method, which uses color filters to remove light of unnecessary wavelengths for color separation. We have also demonstrated that hyperspectral imaging is possible by attaching a lens made of an optical metasurface to an ordinary camera. This was achieved by combining the research results by NTT Computer and Data Science Laboratories—namely, reconstructing images from compressed image data by using mathematical optimization including AI—with lens technology using optical metasurfaces. This technology involves developing a lens that can change the way it focuses light for each color (i.e., wavelength), treating a single image taken through that lens as compressed image data, and generating images for each wavelength using the image-reconstruction technology. In other words, we have created a camera that reconstructs images for each wavelength from a single image on the basis of the difference in the level of defocus for each wavelength. This is a world’s first technology that enables hyperspectral imaging by simply attaching a lens with an optical metasurface to an ordinary camera. Optical metasurfaces have a wide range of applications, and I expect to see many more in the future. In collaboration with Furusawa Laboratory at the University of Tokyo, RIKEN, and others, we are working toward building an optical quantum computer that uses quantum information processing based on continuous-variable quantum states. With this computer, quantum information is processed by carrying information on the amplitude and phase of light. Optical communications also transmit a large amount of information by carrying it on the amplitude and phase of light. While optical communications typically use laser light as a carrier wave, optical quantum computers use a special state of light called squeezed light to achieve entanglement and superposition of quantum states. We use NTT’s optical device technology for communications to generate squeezed light. For over 20 years, NTT has researched and developed ultra-low-noise optical amplifier technology for optical communications that uses a nonlinear optical element called periodically poled lithium niobate (PPLN). By using this element in a slightly different way, we can halve the frequency of light to create squeezed light. NTT has developed PPLN-based amplifier technology that achieves the world’s highest performance. By leveraging this technology, we have developed a squeezed-light generation module that has a terahertz-class bandwidth and achieved the world’s highest performance in terms of squeezing level, i.e., an index of quantum nature, of over 8 dB. By using the squeezed-light generation module, we have also demonstrated the basic elements of an optical quantum computer, including its applicability to large-scale cluster generation and generation of quantum states using high-speed optical pulses. We plan to assemble these elements to build the first optical quantum computer. Quantum computers, including optical quantum computers, are vulnerable to noise, and researchers worldwide are striving to build quantum computers that are resistant to noise. Our goal is to build a fault-tolerant optical quantum computer by around 2030 and an all-optical optical quantum computer integrated on a chip by around 2050. With these computers, we hope to address social issues by enabling complex, large-scale computations that have been difficult with conventional computing. Although the above results may seem disparate and unrelated in terms of immediate application, the idea underlying them is to use light to accomplish tasks that cannot be done with electricity. That is, ultra-high-frequency and time-domain signals, which are difficult to process using electronic circuits, are processed using optical circuits, optical image information is handled as light, and highly quantum states are created using nonlinear optical elements. I believe that these technologies will become interrelated in the future in a way that enables information processing using light on a larger scale, enabling computations that are difficult with current computing technologies. I’m also focusing on two points regarding our future work. The first point is that it is difficult to process everything using light with current technology. Electrical and electronic circuit technology is required to superimpose information onto light or extract information from light. Even though light can compensate for tasks that are difficult for electricity to process, many tasks are conversely difficult for light to process, so those tasks must be processed by electricity. I thus believe that if we get electricity and light to complement each other, we can achieve optical computing. The second point concerns scientific interest. The key to achieving large-scale optical computing is to use a large number of degrees of freedom of photons, and I hope that doing so will lead to the emergence of unexpected phenomena as properties of physical systems. In the case of a lens consisting of an optical metasurface with a spatially discretized phase pattern, if spatial periodicity of the pattern is larger than the wavelength of the input light, noise called diffracted light will be generated. However, by making the periodicity shorter (finer) than that of the wavelength, it is theoretically possible to completely suppress diffracted light in the same manner as a smooth spherical lens. This phenomenon is not “unexpected” because it is an already known; even so, it is one example of how increasing the number of degrees of freedom can lead to a qualitative change. It has also been mathematically proven that neural networks can approximate any function if the degrees of freedom of the number of neurons are infinitely large. Increasing the degrees of freedom of a system reduces constraints and may make optimization of the system easier using continuous approximations. Because statistical properties of systems with large degrees of freedom becomes dominant, I hope that it may be possible to create new properties that cannot be obtained with a small number of degrees of freedom. By collaborating with people from outside, we can be stimulated, learn, and take on difficult challenges—What do you keep in mind as a researcher? In the previous interview (January 2022 issue), I talked about leaving the judgment to natural phenomena (experimental results and people’s reactions) and focusing on things that I find interesting and fun to avoid my unconscious assumptions or thinking habits becoming limiting factors to my research. I believe this approach holds true not only for individuals but also for teams. Since I have more experience than the other members of my research group, I often feel the urge to offer a comment on what they are doing under the guise of offering advice. At such times, I must be careful that one comment will not halt the momentum built up by the researcher in question. I believe that it is more important for members to experience failures than to avoid failures by telling them about my experiences. Although I’m trying to restrain myself from making a comment to encourage members to do what they really want to do, I’m not sure that I am doing so. Another thing we try to do is to have each member create their own team outside of my research group. Although we have set a general theme for the research group, each researcher’s theme is so varied that we are often teased as “a collection of individual shops.” I believe that it is important to expand our research by visiting universities and companies related to each person’s theme, finding people who are willing to work with us, and creating a collaborative system for complementing each other’s technologies. By collaborating with people outside the company, we can identify areas of strength (or lack thereof) that we would not have noticed on our own, complement each other’s technologies, and turn our research into something more valuable. I’m most looking forward to the members of our research group being stimulated through collaboration with outside researchers. By recognizing that there are many people who have different technologies and work methods from us and by experiencing firsthand what they are doing, we will be able to proceed with our research and development with a broader perspective without being confined to our own shell. I particularly want young researchers to avoid limiting their research to small scale, instead approach it with a long-term perspective. —What is your message to future researchers? I believe that a researcher is someone who continues to take on challenges of solving difficult problems, but sometimes we may struggle with how to solve problems and feel limited. While it is important to continue thoroughly investigating such problems, it is also important to broaden one’s horizons from time to time. Although broadening one’s horizons means increasing one’s input, it also means changing one’s perspective or the way one organizes problems. To broaden your horizons, it may be a good idea to attend technical conferences or just to get away from your desk and go to places you’ve never been to, e.g., you could enjoy looking at cityscapes. Even though it may be a bit inconvenient to the other party, you could also make up an excuse to visit a business partner and meet people whom you’ve only been in contact with via email. Simply doing this may help you put the problems you are tackling into different perspectives and reveal new ways of looking at them. I hope that by consciously looking for experiences that differ from your norm, you will be stimulated and develop an interest in different things, reexamine your own research, and effectively incorporate the knowledge as well as the perspective you gain from these experiences in your research. ■Interviewee profileToshikazu Hashimoto received a B.S. and M.S. in physics from Hokkaido University in 1991 and 1993 and Ph.D. in engineering from Kyushu University in 2022. He joined NTT Photonics Laboratories in 1993 and has been researching hybrid integration of semiconductor lasers and photodiodes on silica-based planar lightwave circuits and conducting theoretical research and primary experiments on the wavefront-matching method. He is a member of the Institute of Electronics, Information and Communication Engineers and the Physical Society of Japan. |

|