|

|

|

|

|

|

Rising Researchers Vol. 23, No. 3, pp. 14–19, Mar. 2025. https://doi.org/10.53829/ntr202503ri1  Cutting-edge Algorithms to Overcome Combinatorial ExplosionAbstractIn recent years, the increase in communication speeds has accelerated the development and use of applications that process large amounts of data. This has led to a growing collective need for high-speed, large-volume data processing. In response to such collective challenges, we spoke with Distinguished Researcher Masaaki Nishino, who is researching algorithms that compress and process data according to patterns to make data processing faster and more efficient. Keywords: algorithm, combinatorial algorithm, compression Making the most of computer capabilities by making basic algorithms more efficient and faster—Could you please tell us about your current research? I am researching algorithms. Research into algorithms involves operations similar to mathematics, which considers how to perform calculations using a computer. Artificial intelligence (AI) has recently become popular. When using it, the AI’s functions are represented inside the computer by endlessly repeating large matrix multiplications and additions. Each application has its own unique algorithm, a typical example being a “sorting algorithm” that uses a computer to rearrange data according to certain conditions. In addition to these unique algorithms, there are also “basic algorithms.” Basic algorithms are the foundational parts that perform calculations behind the scenes of applications, and are responsible for performing arithmetic operations, counting numbers, and finding specific combinations (Fig. 1).

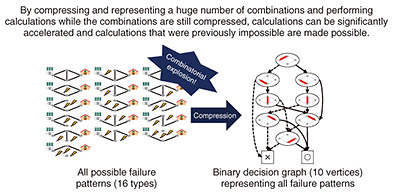

I am researching basic algorithms, and am working on improvements and new ideas to handle data more efficiently. These basic algorithms are used widely in apps and other computing applications, so even small improvements can lead to wide-ranging performance improvements. That is what makes it so fascinating to study these algorithms. Another appealing factor is that algorithm research is highly effective. Thanks to improvements in semiconductors and other factors, computer processing speeds are advancing at an incredible speed. A new laptop was recently announced that was touted to be four times faster than models released three or four years ago. But algorithms are evolving even faster than that. By using the best algorithm from among the many computational methods available for solving a problem, or by developing a new algorithm, it is possible to solve a problem not just several times but tens of thousands of times faster. This leads to major advances, where problems that would previously have taken decades to solve can now be calculated in just a few days. I think this is one of the exciting aspects of researching basic algorithms. Furthermore, the reduction in the amount of calculations reduces energy consumption, which has a positive impact on the environment. In recent years, with the spread of AI, the large amount of electricity required to run AI has become an issue, and the need to reduce its environmental impact has become a hot topic worldwide. In this regard, our research can reduce electricity consumption through the increased efficiency from high-speed calculations, which we believe could help resolve the issue of environmental impact. —What prompted you to start researching algorithms? When I first joined the company, I was involved in analyzing lifelogs, which involves analyzing log data related to people’s behavior, such as location information, and extracting information that would be useful to each individual. This was around 2008. Then, about three or four years after I had joined the company, my boss told me, “There’s some research into algorithms that can make data smaller. Interesting, right? Do you want to look into it?” That’s what prompted me to start researching algorithms. Since I was originally analyzing data to find something, I thought that if there was research into breaking down huge amounts of combinatorial data into smaller pieces and then searching for or extracting something, it could also be used with lifelog data. When I actually carried out this research, I found that it was a very basic subject, and when used in applied problems, I was able to do things that I couldn’t do before. I now believe that this research is very fascinating and important to the world. —Could you tell us about your research team? Algorithms are highly general and can be used in many situations, so research into them has been divided into smaller parts. Some people are researching decision graphs, some are using them in circuit design, some are using them in AI, and some are researching optimization techniques known as operational research. They are developing new technologies as they move forward with their respective research. There are algorithm specialists in the department where I work. They do a variety of things, including creating structures, researching improvements to decision graphs, and theorizing and analyzing the degree of generality of an algorithm. In addition to that, we also have a team that uses algorithms for other applied problems so that the company can contribute in some way. We also place importance on finding and collaborating with research partners, both within the company and beyond. Novel algorithms to overcome “combinatorial explosion”—What are the unique strengths of your research? I am researching combinatorial algorithms, which are ways of efficiently solving huge amounts of combinatorial calculations. If you are simply choosing three sweets from a selection of ten, the number of possible combinations is not that large. However, if you are choosing 3 sweets from a selection of 100 or 1000, the number of combinations rapidly increases, leading to a problem known as “combinatorial explosion” or “exponential explosion.” This is research into algorithms that can be used in situations where you need to find the optimal combination from such a huge number of combinations, or find out how many combinations there are that meet certain conditions. Combinations are also relevant when designing networks, which are NTT’s main asset. Consider structural patterns for a fault-resistant network connecting buildings and homes. Given increasingly complex conditions, such as finding the optimal structure within a budget, combinatorial and exponential explosions occur. In order to avoid combinatorial explosions in such cases, research is needed to make our calculations more efficient and faster. My research involves finding similar combinations from among multiple combinations, comprehensively grouping them together, and resizing the whole thing; this is called “compression.” No data is discarded when compressing the whole, and it can be used like a database, allowing us to understand the properties of the combinations and solve other complex problems. Furthermore, by performing calculations on the data in a small, compressed state, calculations can be made more efficient and faster. A major feature of my research is thus that it can find more accurate answers than the general “methods that cut corners in calculations” (Fig. 2).

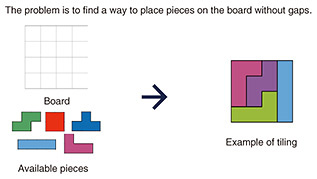

Algorithms are also a field that deals with how quickly a computer can solve problems that match with specified conditions. To begin with, compression itself is a difficult problem, but we have succeeded in making the compression process extremely efficient, achieving high speeds through a new calculation method called “compress and solve.” When we actually performed measurements on a tiling problem, we found that things that were not usable with existing methods were now usable, and the calculation of 190 solutions was reduced from 16,475 seconds to 0.88 seconds (Fig. 3).

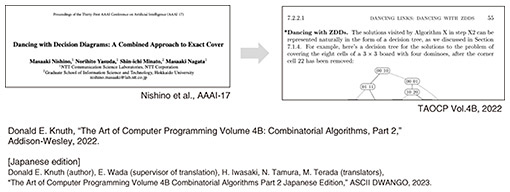

Here we used an 8x8 grid, but the more grids there are, the more combinations there are, and the greater the time gap becomes. The more conditions you add, the more problems you have. We are continuing our research while thinking about how we can use compression technology to solve these problems. Aiming for use in a wide range of applications, including AI control—Could you tell us about the results of this research and its future prospects? It is important for the algorithms to be used in practical applications, but their recognition by academia (universities and National Research and Development Agencies such as RIKEN) and internationally would also be valuable. In that regard, our combinatorial algorithm was presented over several pages in the Art of Computer Programming (TAOCP), a world-renowned specialized textbook on programming and algorithms. After that, my research on algorithms was also introduced in the Japanese edition, which made me realize that I had made a contribution to basic research (Fig. 4). We are also working on applications of our algorithms, and our research has been published in journals in various fields, so I believe there is recognition we are doing interesting things academically as well.

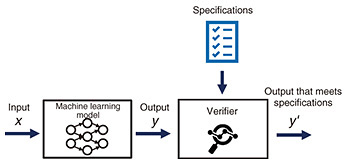

This research is about a universal issue that is timeless, so I believe it is necessary to continue to deepen our understanding of its fundamental aspects. There is still a lot to be done, such as figuring out how fast and compact the combinations can be represented, and what problems it can be used to solve. We also believe that our algorithms can be used in a wide range of applications. For example, when you input a question into an AI or other machine learning system, it will return an output, but the basis for that answer may be unclear or the answer may be harmful. While it is convenient, there have been concerns about its dangers, and there is talk around the world that a system to control AI is needed. In Japan, too, the government is providing funding for such research. We also aim to create trustworthy AI, and are researching mechanisms that, when given specifications and rules, cross-check the specifications against the output to see whether the output is appropriate (Fig. 5).

This type of control can also be utilized in the field of language, and even with large language models, a certain degree of control is possible if they are designed to prevent hallucinations, a phenomenon in which AI generates information that is not based on facts. To do this, the output must be optimal and satisfy the rules, and we believe that our compression technology can be utilized for this. If end users know that hallucinations will not occur, they will be able to use AI with confidence, so we are working with university professors who are researching machine learning, rules, and symbolic reasoning (deriving logical conclusions from prior knowledge and assumptions expressed in logical formulas and making correct decisions) to focus on developing AI that can be used with confidence. In addition, in order to put this “machine learning with verifier” to practical use, we believe it is also necessary to be able to change the rules and specifications for each user. Because developing AI is very costly, there are only a limited number of places where learning models can be created, so users end up using ready-made products from someone else, such as a big tech company or NTT, that is currently developing AI. In that case, there will definitely be a demand for technology that allows you to set your own specifications and black list and control the system according to your settings. For that reason, even in our model with a verifier, we assign a problem and theoretically analyze what would happen if we added specifications to the model to be trained. Whenever various phenomena or problems arise in my daily life, I unconsciously try to find out if it’s a combinatorial problem or a graph problem. I’ve been interested in and researching the combination of machine learning and algorithms for 10 years plus. Back then, no one was talking about reliability, and it was great if it just worked. Now it has moved beyond that phase and is being used all over the world. As a result, we are now in an era where guarantees are finally required, and a concept that has been in the cards for a long time is becoming a reality. I think this project was started because I am always looking at the technology I have at hand with the hope that it can be used in the world.

—What are the challenges and key points in your research? What problems need to be solved? When you start doing research, it’s tough going. At the beginning of my research into algorithms, I would make some progress only to run into a pitfall, and it felt like I wasn’t making much progress. There were many times when we thought compression would work but it didn’t, and processing speeds did not increase. However, as we continued to run into these pitfalls, we learned to design algorithms that could discern the properties of the data. Also, every time you step into a new field, you will need to study that field. Even there, there are pitfalls, and recent new research has shown that it is difficult to judge whether something has already been released to the public, or whether it can really be written up in a paper and accepted, and so on, so there is a process of trial and error. You get used to whatever if you do it a lot, but I experienced how everything is difficult at the beginning. In addition, it is important to talk to experts about things other than algorithms, have them evaluate and make judgements. On the other hand, algorithms can solve problems that were previously thought to be unsolvable, so I think that as we come to an understanding, we will develop something new. —Finally, do you have a message for students, young researchers, and business partners? Our business partners and university professors have always been so helpful to us. Our research is possible thanks to their collaboration with us, so we are very grateful. I hope to continue to work with them in the future and move our research forward together. I believe that students and young researchers will have many opportunities to listen to research presentations. You can just listen to the presentations, but I always try to ask questions. The key is not to ask a question because something caught your attention, but to listen from the beginning with the intention of asking a question, and this habit is useful in my current research. If you don’t understand what the other person is saying, you won’t notice the strange or interesting parts. When you make the effort to ask questions, your concentration will naturally improve and you will also be able to imagine what you would do if you were in a situation, or perhaps the reason why you wouldn’t have done something. If you ask a good question, it may help other people to gain new insights and liven up the atmosphere in the room, and the presenter will also be happy to receive questions from interested people. I think that if you get into the habit of asking questions consciously, you will find it useful to your research. NTT has many extremely talented researchers in a wide range of fields. The people on my team have been researching algorithms since their student days, so they have a strong foundation, and being able to exchange ideas with such talented people in the course of one’s work is one of the attractions of doing research at NTT. They also have a very understanding attitude towards research, so it’s a very easy environment to work in. We are also collaborating with NTT DATA Mathematical Systems to implement algorithms, and because we are in the same group, we were able to move forward quickly with the collaboration. NTT DATA Mathematical Systems is known for its advanced technology in areas such as AI and mathematical optimization. I believe that forming a group company and collaborating smoothly with such a company is something you can only do at NTT. ■Interviewee profileMasaaki Nishino completed a master’s program in intelligent science and technology at the Graduate School of Informatics, Kyoto University in 2008. In the same year, he joined Nippon Telegraph and Telephone Corporation (NTT). In 2014, he completed a doctoral program in intelligent science and technology at the Graduate School of Informatics, Kyoto University and obtained a Ph.D. in information science. He served concurrently as a JST (Japan Science and Technology Agency) PRESTO researcher from 2020 to 2023. In 2017, he received the Yamashita SIG Research Award from the Information Processing Society of Japan. He is engaged in research into combinatorial algorithms, data structures, and their applications, as well as machine learning. |

|