|

|

|

|

|

|

Feature Articles: Technical Seminars at NTT R&D FORUM 2024 - IOWN INTEGRAL Vol. 23, No. 4, pp. 19–27, Apr. 2025. https://doi.org/10.53829/ntr202504fa1 Next Generation AI

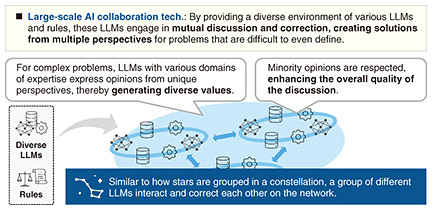

AbstractThis article presents a panel discussion on the limitations and future prospects of current large language models, which is based on a technical seminar conducted at the °»NTT R&D FORUM 2024 - IOWN INTEGRAL°… held from November 25th to 29th, 2024. Keywords: large language model, AI constellation, multimodal AI, reasoning 1. IntroductionMatsushima: This session was initiated with the participation of WIRED at the “AI Constellation Round Table” hosted by NTT. On the basis of the concept of “Implementing Futures,” WIRED is using the term futures (plural) rather than future (singular) to emphasize that not one, but many futures are possible. It is hypothesized that artificial intelligence (AI) will continue to evolve and surpass human capabilities at a point called the “singularity.” However, current generative AI also generates “hallucination,” i.e., information that differs from facts. Accordingly, we must continue to discuss how AI will fit into human society. Regarding this point, we will hear from each panelist about the next generation of AI and discuss the major challenge of how AI can accommodate cultural and regional differences of our complex human society and achieve the plurality of technology. 2. PresentationsApproach for Next Generation AI: AI ConstellationTakeuchi: I am leading a group researching and developing AI algorithms. Today, I’d like to introduce the “AI constellation,” which is the concept we launched in 2023. Since the emergence of ChatGPT at the end of 2022, current AI has been dominated by large language models (LLMs), and it is impossible to imagine AI without LLMs. Current LLM-based AI acquires general knowledge through large amounts of open data. In the business world, efforts to use the closed domain and in-organization data are underway. While LLMs are scaling up by using large amounts of data, the increase in their power consumption and computational costs is becoming a serious problem. It is also a concern that they will lose their individuality, and companies cannot be differentiated from competitors by using them. For these reasons, LLMs in business are moving away from giant LLMs that know everything to reasonable LLMs with specialized knowledge. Various companies are already developing original LLMs specialized in medicine, law, manufacturing, railways, and other fields. I believe that the future trend will be to use multiple LLMs with specialized knowledge in combination. In 2023, NTT launched the concept called “AI constellation,” which combines low-cost LLMs—with expertise and individuality—to solve problems. The AI constellation is a large-scale AI-collaboration technology that enables AIs to discuss problems and correct each other’s answers in a manner that solves problems from diverse perspectives, respects minority opinions, and enhances the quality of the discussion. The name “AI constellation” comes from how a group of AIs, similar to a constellation of stars, would collaborate (Fig. 1).

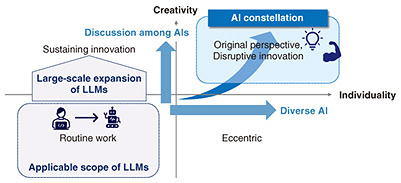

Let us think about the capabilities that the AI constellation should have based on human creativity and individuality. AI should first be able to execute routine tasks. When creativity is added to that capability, sustainable innovation is born, and when individuality is added, disruptive innovation is born. The current application of LLMs is routine tasks for replacing human work with AI, and it is expected that the area of their application will expand through the scaling-up or increase in generality. In contrast, the AI constellation is expected to acquire individuality by incorporating diverse AIs and increase creativity through discussions among AIs. We believe that supporting humans rather than replacing them is one of the capabilities that AI should have (Fig. 2).

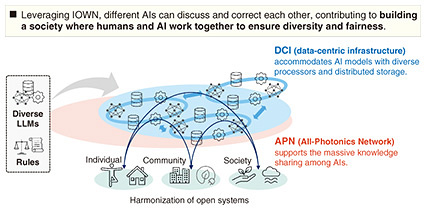

We currently envision two use cases (clearly defined user requirements and purpose of use) of the AI constellation. The first is expanding creativity and individuality of a person. When planning or deciding something, we imagine the future then work backwards. If the AI constellation provides information from diverse perspectives, it can be expected to broaden a person’s horizons. The other use case is activating community discussions and improving the quality of the discussions. For example, it is very difficult to broaden or deepen a discussion in a meeting, but adding diverse perspectives through the AI constellation can deepen the level of knowledge of participants and the discussion. At NTT R&D Forum 2024, we demonstrated multiple LLMs engaging in a discussion and introduced a workshop called “Conference Singularity” held in Omuta City, Fukuoka Prefecture, which aimed to enhance community discussions using AI (Fig. 3). During this workshop, AI was deployed to a meeting of residents for discussing actual local issues. At first, multiple AIs debated the issues with each other, then the residents debated among themselves while considering the perspectives of the AIs. The residents described the benefits of this process, e.g., “The discussion started smoothly thanks to the ideas generated by AIs from different perspectives” and “It was easy to criticize AI’s opinions, and this helped me to clarify my own opinions.”

To make the AI constellation a reality, we need to address challenges such as how to enable multiple AIs to cooperate, how to improve AI’s learning and operation, and how to reduce costs. Although current LLMs can understand a range of natural languages, they are not yet capable of understanding other types of real-world information, so improving AI so that it can understand non-linguistic information is also necessary. We want to contribute to society by providing a service environment in which people and AI can work together by using the network and computing infrastructure of the Innovative Optical and Wireless Network (IOWN) (Fig. 4).

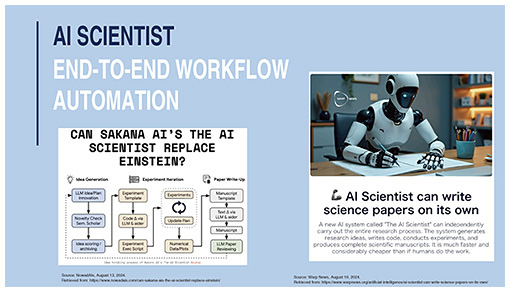

Next-generation AI: Evolutionary Model MergeIto: In March 2024, Sakana AI announced “evolutionary model merge,” which combines AI models. I believe evolutionary model merge embodies the AI constellation in terms of how to construct models. We believe that the next generation of AI will combine multiple small models to solve problems, achieve performance comparable to that of larger models, and calibrate (adjust) the models properly by enabling them to communicate with each other. I will discuss what kind of AI should be created and how the concept of the AI constellation represents the next generation of AI with some examples. Some companies, such as Mistral in France, can construct a model from scratch 20% to 30% more efficiently than OpenAI. To achieve 99.999% efficiency, instead of starting from scratch, it is better to combine the strengths of current models. However, it is not possible to construct models surpassing human knowledge with this method. Therefore, we use a method called “Frankenmerging,” which connects different layers from multiple models to construct a new model. We constructed 10,000 different models using this method, selected the 10 models with the best performance, and discarded the rest. We then combined these 10 models to construct another 10,000 models, which became the second-generation models. By repeating this process for up to 999 generations, we were able to construct a model with performance equivalent to that of GPT-3.5 in just 24 hours with 24 dollars. This achievement was an interesting and significant realization for us. Constructing a model by simply training it with data is limited, and even if the model’s performance improves by learning more data, that improvement will not be worth the cost. Therefore, there is a growing trend to construct models in a more sustainable manner using a technique called “reasoning,” which enables models to communicate with each other to refine their accuracy and decision-making. Current AIs, such as ChatGPT, do not have the accuracy to solve all problems instantly; in fact, it can execute only some translation and summarization and reduce the workload of a call center, for example. However, some practical AI technologies that can bring about the innovative future depicted in WIRED or science-fiction stories have emerged. One of them is “workflow automation,” which is a technology for automating a task divided into multiple processes all at once. We applied this workflow automation on the task of writing a scientific paper. In the usual steps for completing this task, a professor advises a junior researcher by saying, “Try writing a paper like this one.” The researcher then thinks of 100 interesting ideas and goes to a library to investigate them. The researcher discovers that 95 of the ideas have already been verified, so the researcher verifies the remaining 5 ideas, creates figures and diagrams, and writes the paper. We demonstrated that the whole paper-writing procedure can be done by AI in a paper “The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery” published on August 5, 2024 (Fig. 5). This paper was the first on AI to be featured in the journal Nature. As for the method we used, we input 100 questions to 100 different foundation models and calibrated the obtained 100 ideas by using an agent function. Going forward, we will continue to challenge ourselves to construct and use interesting models while using the idea of the AI constellation.

NextGen AI and Digital Game AI—Building a Smart City with Three Types of Game AIMiyake: I will discuss the gaming industry and digital game AI. The gaming industry is still considered new. It has started attracting attention in 2000, and I entered the industry around 2004. I would first like to talk about the three types of game AI, i.e., meta AI, character AI, and spatial AI, each of which has the following roles.

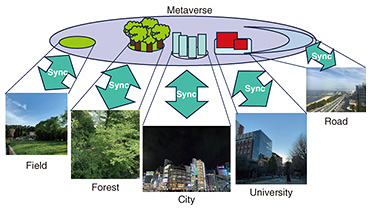

Meta AI can be combined with generative AI, character AI can be combined with language AI, and spatial AI can be combined with spatial computers. To apply these three types of game AI to real space, we, at the University of Tokyo, are constructing a system of a smart city (a city that aims to optimize and improve the efficiency of urban functions by using cutting-edge digital technology and information) by combining these three types of AI: meta AI that governs the entire city, character AI that operates within the city, and spatial AI that understands the spatial conditions in the city. I will now explain spatial AI, which will become key in the future, and meta AI. Spatial AI acquires spatial information at specific locations in real space and passes it to various AIs or sends it to a digital-twin metaverse (a virtual space in which digital twins are constructed) (Fig. 6). Other techniques for embedding AI into the environment are available. In games, objects, such as doors, are AI and assist the movement of characters, and we are creating a smart-city system by building on these types of AI.

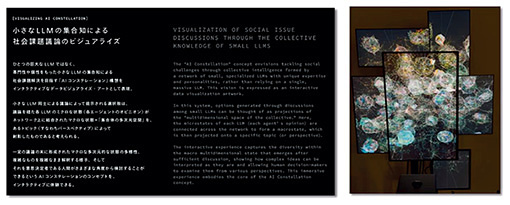

Meta AI attempts to understand humans. For example, by attaching various devices to users and acquiring their biometric information, meta AI can determine their psychological state. This applies not only to games but also to real space. Meta AI can also create games such as 3D Dungeon. Until now, 100% of game content has been created by humans. If meta AI creates 80% of it with the help of generative AI, and the remaining 20% is created by humans according to their intent, it will be possible to create a wide variety of content and games. To change the game space and real space with the above-mentioned three types of AI, it is first necessary to simulate human behavior, product flow, and nature in the virtual space then feed them back to the real space. The future role of meta AI will be to combine the real and virtual spaces and use the metaverse as AI. Agents (who integrate data) for connecting systems and humans will also be necessary, and I believe that we will see a future in which agents can converse with AI, the same as with the AI constellation. 3. DiscussionUsing the metaverse to bring game AI back into the real space and the potential of the AI constellationMatsushima: I think that one of the challenges in AI research is how AI interacts with physical space and humans. How do you think we can bring things from the game space into the real world? How do you see the possibility of the AI constellation in overcoming this challenge? Takeuchi: When we were initially thinking of the AI constellation, we focused on LLMs, so it was a general concept of the world that is understood and expressed in natural language. However, when we actually tested the AI constellation in the field, as we did at the Conference Singularity held in Omuta City, AI often could not deal with all the information we gave it, and even when we gave it numerical data, it could not understand them. For example, if an AI proposes to create a café, since it cannot handle spatial information, it cannot incorporate aspects such as people’s movement in the real world into its proposal, so the proposal might not be valid. I think that both virtual space and LLMs have a common issue, that is, it is difficult to deepen a discussion without grounding the models with correct knowledge (through the intersection of knowledge). Ito: I think that Mr. Miyake’s discussion on digital twins is a method of the future that does not jump straight to physical space. AI has no physical elements, so it is easier to implement it when all the answers exist in the computer. For example, automating mortgage processes at financial institutions can be done by AI calculation. However, when we create AI for building an airplane, if there are physical processes involving human craftsmanship, such as planishing the wings by hammering, current AI cannot handle such tasks. To enable AI to come up with a solution that takes into account the physics of the real world, an intermediate step between real and virtual spaces is needed. Digital twins are essential for handling that intermediate step. A digital twin is the only way to create a loop with which robotics and self-driving cars can understand the space around them and store knowledge to deal with physical obstacles and feed it back to AI. A learning loop that brings that knowledge back into the real space is also important. Because returns on the amount of data input to an LLM is diminishing, models for modalities other than language, such as models for time-series data and signal comprehension, are also needed, and I believe that combining such models for various modalities will produce outstanding results. Miyake: It is not an exaggeration to say that the AI constellation have great potential. Part of my job involves participating in numerous meetings, which requires a lot of energy, and the conclusion of those meetings often depends on the members present and how the day unfolds. I’ve always felt uncomfortable about this state of affairs, and I think that we should actually hold different versions of a meeting, for example, by changing or omitting certain members, and that approach is the solution that company management wants. By applying AI with this approach, we can hold a meeting and gather 1000 diverse opinions, discard 999 conclusions from those opinions, and keep the best conclusion, which is what we really need. I feel the AI constellation has great potential to enable us to obtain what we really need subconsciously or vaguely. Expanding the possibilities of meetings by incorporating multimodal AIMatsushima: When we want to know the results of 1000 meetings, wouldn’t it be better to consolidate them into a constellation rather than a single LLM? Could the inclusion of multimodal elements lead to even greater possibilities? Takeuchi: LLMs can still do many things. When multiple LLMs discuss problems in a meeting, they can come up with solutions from a variety of perspectives by creating a large number of branches of discussion that humans cannot. Reasons that meetings may not go well include lack of time or data or inability to gather the necessary stakeholders. Future social issues require future stakeholders, but LLMs can be used to some extent to replicate those stakeholders. However, when an issue of marine resources is discussed, for example, it is necessary to consider the perspective of the organisms that live in the ocean, and that perspective cannot be reproduced with current LLMs. Opinions, including those from the perspectives of time-series data analysis and spatial data analysis, must be collected logically. Going forward, I think that in addition to LLMs, information from multimodal sources will be necessary. Matsushima: LLMs are good at executing routine tasks, but the AI Scientist needs to demonstrate creativity and generate new knowledge to write a scientific paper. To what extent will it actually be possible to achieve these capabilities by merging models or using multimodal AI? Ito: The Conference Singularity in Omuta City is a very good way to use AI. What’s interesting about it is that even if we didn’t gather 1000 stakeholders, AI could generate 1000 different, multifaceted ideas and simulate the reactions of the stakeholders to those ideas. LLMs are very good at giving plausible answers. I’ll explain this capability using a bell curve. The basic mechanism of an LLM is that 1000 answers form a bell curve, on which the majority of answers are clustered around the median. Both the expected and unexpected answers returned by ChatGPT are around the median on a bell curve. In the Omuta City case, the 1000 solutions given by the LLMs are predictable ones concentrated around the median. This mechanism is also effective in preventing hallucination, and I believe that it is more effective to construct a model with a large parameter set and use a value near the median on the bell curve as the solution rather than developing AI with few hallucinations. Regarding the AI Scientist, an agent function that integrates answers calibrate the median position on a bell curve, but human intent is also incorporated into the calibration. Specifying a solution that is not in the median can yield unexpected and interesting responses. Changing the median position on a bell curve is the essence of AI calibration and one of the points we are aiming for. I think it would be interesting to repeat discussion between LLMs while shifting the median position of the solution on the bell curve then giving feedback to the discussion. Takeuchi: Inspired by Audrey Tang’s idea that “AI should be ‘assistive intelligence’ rather than ‘artificial intelligence,’” I envision a future in which AI helps people express their individuality and creativity. From the perspective of the AI Scientist, how will AI assist human actions? Ito: At the moment, AI is used to help people with routine tasks rather than achieve innovation. Its future direction is not yet clear regarding the AI Scientist. I think AI should not be developed by imagining a dream like a science-fiction movie; instead, AI should be developed realistically with our feet firmly on the ground. It is important to spread more-advanced and realistic uses of AI worldwide than simply copying and pasting responses from ChatGPT and enable people to recognize the effectiveness of using AI. Therefore, for now, I think we should dig deeper into executing routine tasks with AI. Matsushima: Can the knowledge of a game in which the aforementioned three types of game AI work in unison to create one major turning point every day to keep the players from getting bored be applied to meetings? Miyake: Meta AI is based on the concept of turning the game into an AI, so the game changes as it understands the human players. To use a meeting as an analogy, meta AI can play the role of a moderator that moves the discussion forward by, for example, offering a breakthrough when the discussion stalls or reminding the participants that they are re-discussing the same topic. The relationship between AI and humans in a future in which AI is all around usMatsushima: Since today’s theme is the next generation of AI, I’d like to ask the three of you how you see the relationship between AI and humans developing. Takeuchi: AI is diverse and is similar to the Shinto belief of “eight million gods,” each with its own spirit. System of systems (technology that links multiple independent systems) that has been envisioned in the information-system industry is now becoming a reality through the power of generative AI. I feel that a paradigm shift—through which more realistic and advanced services can be provided all at once by using generative AI—is about to occur. Ito: In current practical use cases, AI makes people’s lives easier. Starting from that point, AI has become capable of reasoning. I think the next step is for AI to become a partner for human brainstorming by providing inspiration. Beyond that, there might be a future in which everything is automated by AI; however, I think AI should first make the world more convenient. AI then can become a partner for human brainstorming; this is an interesting division of roles between humans and AI. Miyake: Some games enable the players to look back on their journey to the end with a flowchart. In the real world, if participants reach a branch point during a meeting, for example, once they choose a branch, they cannot go back; however, if they have a flowchart and can go back to the branch point, they may be able to come to a different conclusion. If AI holds 1000 meetings before people hold a meeting, it can suggest people a certain direction toward a conclusion. Conducting a meta-simulation in this way will lead us to a better future. I think that this capability will help avoid a future in which we have no choice but to make decisions on the basis of the circumstances that arise on that day. Matsushima: I’ll now summarize this discussion, AI makes vague ideas a reality, reasoning is key, and AI assists a pluralistic future, and I believe that the discussion has revealed the true form of the next-generation AI that should go beyond the buzzword of generative AI. We are currently in the middle of shaping the next generation of AI, so we will not reach any conclusions today, but I believe it is important to continue having these discussions. Experience the future of AI through a work of artTakeuchi: The concept of the AI constellation is shown as a work of art that depicts AIs integrating each other (Fig. 7). A very complex social issue is located in the middle, and since each stakeholder has a completely different opinion, the issue is first divided into several sub-issues, which are discussed by multiple AIs.

I believe that it will become increasingly necessary for us to look at issues deeply by looking at them from various perspectives. I hope that while experiencing this work of art, we think about the next generation of AI together. |

|