|

|||||||||||||||||||

|

|

|||||||||||||||||||

|

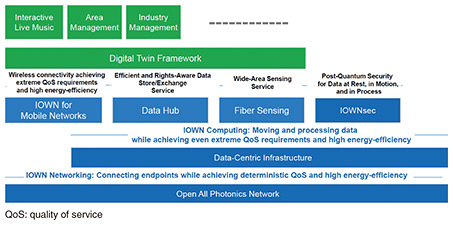

Feature Articles: Frontlines of IOWN Development—Development of IOWN Hardware and Software and Social Implementation Activities Vol. 23, No. 7, pp. 36–42, July 2025. https://doi.org/10.53829/ntr202507fa4 Data-centric Infrastructure for Enabling Practical Use of IOWNAbstractNTT Software Innovation Center (SIC) is working on implementing the concept known as the Innovative Optical and Wireless Network (IOWN). In this article, SIC’s initiatives in regard to developing data-centric infrastructure (DCI) are introduced, focusing on the DCI exhibits at NTT R&D Forum 2024 and FUTURES Taipei 2024 as well as the DCI reference implementation models that were documented and published at the IOWN Global Forum. Keywords: IOWN, data-centric infrastructure (DCI), DCI cluster reference implementation model 1. IntroductionNTT has been working to implement the concept known as the Innovative Optical and Wireless Network (IOWN), and NTT Software Innovation Center (SIC) is focusing its efforts on implementing data-centric infrastructure (DCI), which is one of the essential foundations in the overall architecture of IOWN. In this article, the DCI exhibits at NTT R&D Forum 2024 and FUTURES Taipei 2024 are introduced, and the DCI reference implementation models that were documented and published at the IOWN Global Forum are explained. 2. Background of initiativesAt the IOWN Global Forum, DCI was proposed as an information and communication technology infrastructure that uses the high-speed and low-latency characteristics of the IOWN All-Photonics Network (APN). In the overall architecture defined by the IOWN Global Forum, DCI is positioned as a foundation layer that enables highly efficient data processing in distributed datacenter environments and heterogeneous computing environments (Fig. 1). SIC led the discussions at the IOWN Global Forum to document reference implementation models, and those discussions led to the publication of a document in March 2025 [1].

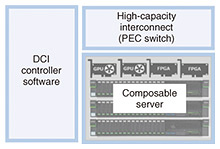

NTT defines DCI as consisting of three elements: DCI controller software, photonics-electronics-convergence (PEC) switches, and composable servers, and we have been building an ecosystem in collaboration with vendors of composable server systems and devices (Fig. 2). The results of our integration and demonstration experiments with composable server products to date were exhibited at NTT R&D Forum 2024 [2] and FUTURES Taipei 2024 (a public event hosted by the IOWN Global Forum) [3] and have helped to strengthen engagement with IOWN Global Forum members toward building an ecosystem.

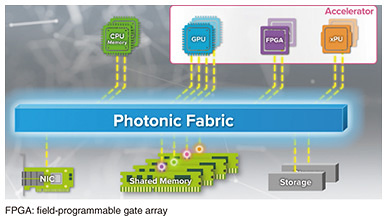

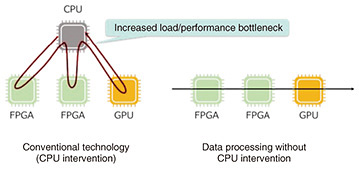

NTT has previously conducted proof-of-concept (PoC) tests using DCI in the use case of video analysis in cyber-physical systems, and the PoC results indicate the effectiveness of a data-processing pipeline using accelerators [4] and the usefulness of DCI-as-a-Service [5]. We have also released a reference implementation demonstrating the concept of DCI controller software as open-source software [6]. One of the advantages of DCI is that it enables highly efficient data processing with accelerators. While conventional data processing is mainly software processing on the host central processing unit (CPU), high-computational-cost data processing uses accelerators that are appropriate for the data and workload. Typical examples of accelerators are the use of graphics-processing units (GPUs) for artificial intelligence (AI) and video processing and the use of smart network interface cards (SmartNICs), infrastructure-processing units (IPUs), and data-processing units (DPUs) for network communications. Efficient use of these accelerators can make data processing more efficient; however, in conventional computing infrastructure (which uses servers as the basic unit), effective use of accelerators depends on the data and workload, and that dependence results in waste. To address this issue, the basic concept of DCI is to achieve high-efficiency data processing by appropriately controlling PEC switches and composable servers with DCI controller software [7]. A feature of composable servers (which handle data processing) is that they use PEC and disaggregation technology to configure a computing-resource pool that goes beyond the server frame, and that configuration enables data processing using accelerators suited to the data and workload (Fig. 3). By designing and implementing autonomous communications and a series of data-processing operations that do not involve the CPU, it becomes possible for the accelerator to take charge of data processing in a manner that leads to faster processing speeds and improved power efficiency (Fig. 4).

3. Initiatives for strengthening engagement to build an ecosystemComposable servers that execute data processing in DCI consist of resource pools and compute servers composed of Peripheral Component Interconnect Express (PCIe)/Compute Express Link (CXL) fabric switches, PCIe/CXL expansion boxes, and accelerators that apply PCIe and CXL, which are commercially available technologies. We believe that enabling composable servers to be configured with commercial products from multiple vendors implemented with commercially available technologies is important from the perspective of using advanced technologies and ensuring continuous operation. We are therefore strategically promoting the creation of an ecosystem in collaboration with external research institutes and system/product vendors. We have also strengthened our engagement with system integrators and device manufacturers by exhibiting the results of our efforts at NTT R&D Forum 2024 and FUTURES Taipei 2024. 4. NTT R&D Forum 2024“NTT R&D FORUM 2024 - IOWN INTEGRAL” was held for five days from November 25 to 29, 2024, and the results of NTT’s initiatives concerning DCI were exhibited under the title “Efficient utilization of accelerators and connection technologies with DCI.” To put DCI into practical use, NTT has been strategically promoting the adoption of DCI by multiple vendors (diversifying vendors) at each level of composable-server systems and components (devices). To demonstrate the results of building a DCI ecosystem in collaboration with system and device vendors, we exhibited a mock-up of a PEC switch as well as two actual composable-server systems—one of which was composed of a combination of commercially available products from multiple vendors. A mock-up of a PEC switch equipped with PEC devices (PEC-2) was also exhibited (Fig. 5). Most optical transmission equipment has a large number of pluggable optical transceivers arranged on the front panel, and that arrangement poses an issue of increased transmission loss on the motherboard when signal speeds increase. By placing PEC devices near the switch ASIC (application-specific integrated circuit) instead, it is possible to reduce total power consumption of the system and accommodate more optical fibers on the front panel.

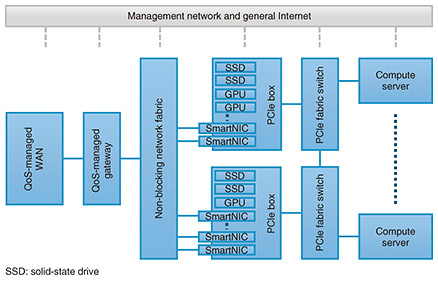

NTT aims to implement DCI that introduces PEC devices in preparation for “IOWN 2.0,” the roadmap of which started in 2025. In the keynote speech, it was revealed that at the NTT Pavilion at the Expo 2025 Osaka, Kansai, Japan, a server for reducing power consumption to 12.5% of the current level will be installed, and visitors will have the opportunity to experience how the system works at the venue. A roadmap to ultimately reduce power consumption to one percent of the current level—through a gradual evolution that includes commercialization in 2026, optical inter-chip communication in 2028, and optical communication within semiconductor chips from 2032 onwards—was revealed. 5. FUTURES Taipei 2024Regarding composable servers, we place importance on building an ecosystem in collaboration with system and device vendors both in Japan and overseas. At FUTURES Taipei 2024 [3], for example, a public event held in conjunction with the IOWN Global Forum member meeting, we exhibited a composable server jointly designed and verified by Taiwan’s Industrial Technology Research Institute (ITRI) and NTT, and introduced it through video and poster presentations. We will continue to share the results of our studies on architectural design and specifications of composable servers through exhibitions and community activities in a manner that strengthens engagement with system and device vendors and promotes the construction of an ecosystem. 6. IOWN Global Forum activities related to DCIAs mentioned above, we explained our efforts to build an ecosystem of composable servers that handle data processing on DCI. However, some issues remain that require consideration, namely, connection to wide area networks (WANs), such as NTT’s APN, scaling out, and geographical distribution, which are functions that will be necessary for DCI in the future. These higher-level functions are also being considered at the IOWN Global Forum [8], where SIC is leading the discussions. On the basis of the discussions at the IOWN Global Forum, a document was compiled and published that outlines four implementation models for compute clusters [1]. Hereafter, each of the implementation models summarized in the document are introduced. 6.1 Model 1: Configuring logical servers from physical devicesWith the model shown in Fig. 6, it is assumed that servers are logically configured from physical devices (accelerators and storage) stored in a resource pool. This server-configuration technology is also used in the composable-server system introduced in this article. A fabric that transmits bus frames is introduced to configure the logical servers. A separate quality-controllable Ethernet network enables communication between logical servers or between servers and gateways by applications. High-performance SmartNICs are located in the resource pool and can be assigned to servers on demand for connections across WANs or local connections using the bus fabric.

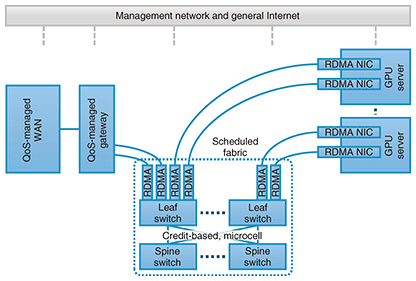

6.2 Model 2: Scheduled fabric for quality of serviceThe model shown in Fig. 7 focuses on the configuration of the network between the WAN connection gateway and server. Communication between GPUs in a large-scale GPU cluster for AI training and communication by GPUs across the WAN is also assumed. The network is divided into two layers: the first (leaf) layer involves switches directly connected to each GPU, and the GPU communication rate is controlled using a common remote direct memory access (RDMA) protocol. These leaf switches break down each packet into cells, which are then passed through switches in the second (spine) layer for load balancing by packet spraying. Managed by a separate in-fabric control protocol, this process ensures that incast congestion does not occur at the packet destination.

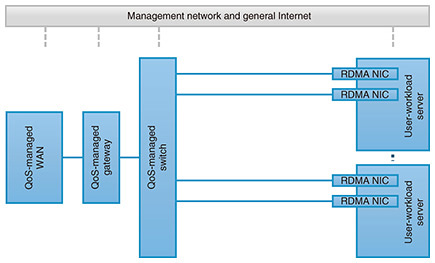

6.3 Model 3: Scaling down to a few servers and one switchThe model shown in Fig. 8 is a configuration in which a small number of servers are connected to a WAN with quality of service (QoS) management. The main envisioned use case is long-distance and short-time migration of virtual machines. The model uses the local network and RDMA-capable hardware required for the virtual machines and outlines the protocols that are expected to be adopted. It also suggests candidate transceivers and cable types for local communication.

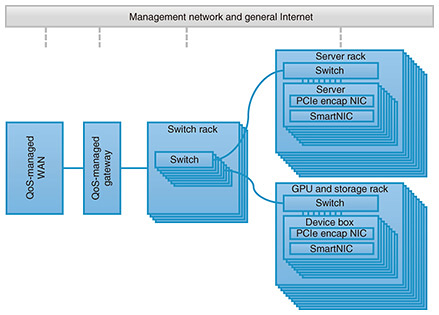

6.4 Model 4: Scaling up to large clustersThe model shown in Fig. 9 provides a huge resource pool that can combine logical servers on demand by using a fabric consisting of Ethernet switches and NICs specialized for encapsulating bus communication in Ethernet packets. The network for encapsulated bus-frame forwarding and general Ethernet communication by applications can be either separated or integrated, and in the latter case, QoS-management functions are required for each switch. A dedicated NIC for encapsulation must be installed in each server, and physical devices to be dynamically allocated to servers are stored in dedicated device boxes.

7. Future developmentsIn this article, our efforts to implement DCI toward the actualization of the IOWN concept were introduced with a focus on our results exhibited at NTT R&D Forum 2024 and FUTURES Taipei 2024 and on the DCI reference implementation models that were documented and published at the IOWN Global Forum. We will strengthen research and development to implement DCI-2 [9], which we aim to commercialize in 2026. Specifically, we will promote the use of suburban datacenters that use the APN, another technological element of IOWN, and will develop DCI controller software and propose system references to the IOWN Global Forum with the aim of expanding various operational functions of DCI. We will also work on applying the technology of DCI to mobility use cases that require AI and video processing as well as use cases other than video processing. References

|

|||||||||||||||||||