|

|||||||||||||||||||

|

|

|||||||||||||||||||

|

Feature Articles: Exploring Humans and Information through the Harmony of Knowledge and Envisioning the Future Vol. 23, No. 10, pp. 78–82, Oct. 2025. https://doi.org/10.53829/ntr202510fa11 Children Perceive Minds in Robots—Learning Companion Robots for the Future of Early Childhood EducationAbstractAdvances in technology are expanding the role of robots in children’s lives. In the near future, robots are expected to serve as learning companions that support early childhood education. However, how children perceive robots is not fully understood. This article presents findings from our group’s experimental psychological research exploring whether children can learn from robots and how social interactions with robots influence children’s behavior. It also outlines how these findings can contribute to the design of social robots as learning companions and promote the advancement of early childhood education. Keywords: developmental science, child, robot 1. Children’s learningIt is believed that children deepen their learning through communication with caregivers. Our group’s research has demonstrated that parent-child interactions significantly influence children’s learning [1, 2]. For example, caregivers’ involvement, such as eye contact and gestures as social cues, as well as speech style, affects children’s knowledge acquisition and language development. Our goal is to scientifically elucidate the mechanisms of children’s learning and establish educational-support technologies that integrate empirical evidence and artificial intelligence (AI). We aim to apply these research findings to practical settings such as education, parenting, and medical support. The nature of learning has become increasingly diverse, and educational approaches using AI and robots have garnered growing attention. Opportunities for children to acquire knowledge and skills from robots are expected to increase. To enable more effective learning support, our vision is the implementation of early childhood education using companion robots. Robots are expected to complement some of the roles of caregivers, such as supporting language development, reading picture books, and emotional education, and foster children’s spontaneous learning. However, how children perceive and accept robots remains insufficiently understood. With the anticipated spread of AI robots to younger age groups, there is a growing need to examine the child-robot relationship from a scientific perspective. Such insights can inform the design of robot-assisted education and contribute to the improvement of early childhood education. This article introduces experimental psychological studies that explore whether children can learn from robots and how they perceive them. 2. Learning from robotsAs interest in AI- and robot-assisted education grows, it is important to clarify at what stage in development children can begin to learn knowledge and information from robots. Infants typically learn from humans, such as caregivers, but can they also learn from robots? To address this question, we conducted a study comparing how infants learn from humans versus robots [3]. In the experiment, we observed how 12-month-old infants learned about objects using only gaze cues from a human or robot (Fig. 1). The results indicate that infants followed human gaze, remembered the objects the gaze was directed at, and showed a preference for those objects. Although infants followed the robot’s gaze, there was no evidence of object memory or preference, suggesting that the robot had a smaller impact on learning compared with a human.

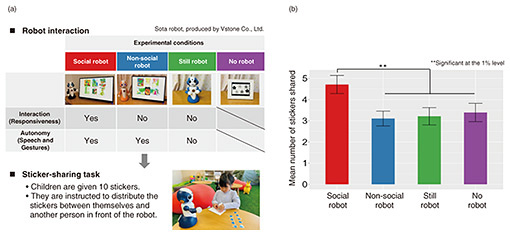

What happens if infants experience the robot as a communicative being? In another experiment, we added a speech cue in which the robot called the infant’s name. As a result, infants more accurately followed the robot’s gaze and were able to remember the objects it looked at [4]. Since robots are unfamiliar entities, infants may not initially know how to engage with them. Demonstrating that the robot is capable of communication appears to promote infants’ learning. These findings indicate that 12-month-old infants can follow a robot’s gaze, and that speech cues from the robot can facilitate their learning. This suggests the potential for using robots in educational support starting as early as infancy. 3. Impact of interaction with robots on children’s prosocial behaviorIn early childhood, children begin to engage in verbal interactions with others. At this stage, how do children perceive robots? As children grow, they acquire various forms of sociality, beginning with empathy. By around the age of five, strategic sociality begins to develop [5]. Five-year-old children become sensitive to being observed and tend to present themselves in a positive light when they are being watched. For example, they may display more prosocial behaviors, such as sharing more with others or refraining from cheating when someone is watching. However, it has remained unclear how children behave when the observer is a robot. Therefore, we examined whether five-year-old children behave more prosocially when being watched by a robot and how prior interaction with the robot may influence their behavior [6]. A total of 112 five-year-old children participated in one of four conditions. The children first had a face-to-face encounter with a robot (Fig. 2(a)). In the social robot condition, children interacted with a robot capable of speech and gestures. In the non-social robot condition, children were faced with a robot that only replayed pre-programmed speech and movements periodically. In the still (stationary) robot condition, children were presented with a non-moving robot. A no-robot condition was also included, under which no observer was present. To measure prosocial behavior, we used a sticker-sharing task. In this task, children were given ten stickers and told that those stickers belonged to them. In front of the robot, they were then asked to divide the stickers between themselves and another person. In other words, the robot observed how the child shared the stickers. The results indicate that five-year-old children who interacted with the social robot shared more stickers with others in its presence (Fig. 2(b)). That is, they displayed more prosocial behavior when being observed by a social robot. In front of the non-social and still robots, however, the children’s behavior did not differ from with no-robot; they shared fewer stickers. These findings suggest that social interaction with a robot promotes prosocial behavior in five-year-old children. In other words, children strategically alter their behavior in the presence of the social robot.

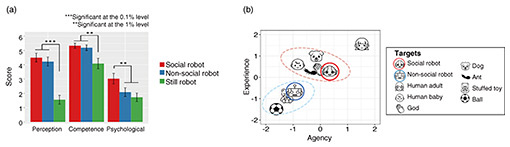

4. Feeling that a robot has a mindTo clarify which characteristics of robots influence children’s behavior, we investigated what impressions robots make on them. After facing the social robot, non-social robot, or still robot, five-year-old children were asked three questions: “Can the robot see things?” (perception), “Is the robot smart?” (competence), and “Can the robot feel happiness or sadness?” (psychological). For each question, children responded using a seven-point scale ranging from “strongly agree” to “strongly disagree.” The results showed that the children rated both the social and non-social robots similarly high in terms of perception and competence, with no significant difference between them. However, for the psychological attribute, the children rated the social robot significantly higher than the non-social and still robots, showing that children perceived the social robot as having a mind more than they did the non-social and still robots (Fig. 3(a)). This suggests that interaction with a robot leads children to perceive it as having feelings such as happiness and sadness. It was thus experimentally confirmed that five-year-old children perceive social robots as having a mind more than they do non-social or still robots. To further investigate how children’s perception of a robot’s mind compares with their views of other beings or objects, we conducted an additional experiment. Previous studies have shown that adults perceive “mind” along two dimensions: agency (i.e., the perceived capacity for intend to act) and experience (i.e., the perceived capacity for sensation and feelings) [7]. We examined how five-year-old children position robots within this two-dimensional framework by comparing them with a variety of entities, including a human adult, a human baby, God, a dog, an ant, a stuffed toy, and a ball [8]. The results revealed that children attributed more mind to social robots than to stuffed toys and saw them as more similar to living beings such as a baby, a dog, or an ant (Fig. 3(b)). In contrast, non-social robots were perceived similarly to stuffed toys. When the same study was conducted with adult participants, the distinction between social and non-social robots was minimal; both were viewed as closer to inanimate objects such as stuffed toys. These findings indicate that adults are less likely to attribute a mind to robots, whereas young children are more inclined to feel that robots possess a mind.

5. Future of early childhood education with robotsThis article outlined emerging findings suggesting that social robots may serve as valuable learning companions that support children’s learning. These insights are expected to become an important foundation for designing early childhood education that incorporates AI. In the near future, robots are likely to become embedded in our daily lives as learning companions that support early education. To enhance their educational effectiveness, it is essential to understand how children perceive and accept robots. We are engaged in both fundamental research to scientifically understand children’s learning mechanisms and applied research to develop effective support strategies that facilitate learning through companion robots. It is crucial to consider children with diverse characteristics, such as developmental disorders or hearing impairments, as well as those who have experienced adverse events in early life. For instance, some studies suggest that children with autism spectrum disorder may feel more comfortable with robots than with humans, highlighting the importance of considering how best to engage with such children. Long-term interaction with children is another key factor in supporting learning. By applying these findings to design appropriate support methods and interaction styles that facilitate children’s learning, we hope to contribute to the advancement of AI-assisted early childhood education. We are currently developing Pitarie Touch, an AI system that uses a social robot to recommend picture books tailored to each child’s preferences and developmental stages [9] (Fig. 4). We are also developing a system that enables children to share their impressions of picture books through spoken conversations with a social robot, aiming to enhance children’s reading activities.

We will continue to explore child-robot interactions to deepen our understanding of how children learn, and to develop more effective, personalized learning support methods. Our goal is to implement learning companion robots capable of effectively supporting early childhood education. References

|

|||||||||||||||||||