|

|||||||||

|

|

|||||||||

|

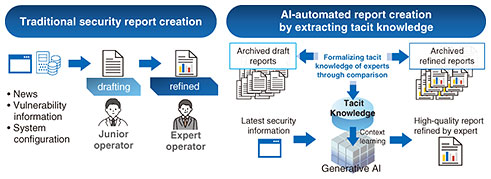

Feature Articles: Security R&D for a Better Future Vol. 23, No. 11, pp. 59–65, Nov. 2025. https://doi.org/10.53829/ntr202511fa7 Collaboration between People and AI to Evolve Security Activities into a New Form for PeopleAbstractCyber-attacks targeting systems are intensifying yearly, and the shortage of security personnel is becoming serious. Therefore, a fundamental review of security operations based on automation using artificial intelligence (AI) is required. In today’s information society, it is difficult to maintain people’s autonomous decision-making due to misinformation and disinformation, and the object of security is expanding from systems to human cognition. In this article, we introduce innovative cybersecurity operations using generative AI and cognitive security that supports autonomous human decision-making. Keywords: cybersecurity, cognitive security, generative AI 1. Future vision of human-AI security activitiesThe proliferation of vulnerabilities, a major cause of cyber-attacks, has reached a level that cannot be addressed with conventional methods. According to the National Police Agency in Japan, there was a sharp increase in the number of reported cases of ransomware and phishing, as well as suspicious access suspected to be vulnerability searches for cyber-attacks in 2024 [1]. New threats such as cyber fraud, social engineering, and misinformation and disinformation spread on social media are also emerging that target people rather than systems and affect their perception and decision-making. It is not easy to increase the number of personnel in response to the increase in risk and diversification of security systems, and the psychological burden on personnel is increasing daily, resulting in exhaustion. Thus, conventional security activities are facing limitations. According to the Cybersecurity Framework of the National Institute of Standards and Technology in the United States, security-related activities are classified into identification, defense, detection, response, recovery, and governance, and various activities are required for each. We are conducting research on all these activities with the aim of breaking the aforementioned serious situation by evolving them into new security activities in which humans and artificial intelligence (AI) cooperate. We call our research concept “Cycle-Ops.” In Cycle-Ops, AI compensates for the weak points of humans, and humans take on the weak points of AI, thus achieving a form in which both humans and AI perform each activity while taking advantage of each other’s strengths, and promoting the evolution of security activities that would have been difficult with only humans. This evolution will reduce the psychological burden on all people involved in the activities (security staff, system staff, end users, management, etc.), provide a better experience of security activities, and bring true security and safety that could not be achieved from the viewpoint of economic rationality alone, such as risk reduction and efficiency improvement. Although knowledge is generated daily in each security activity, it is only accumulated in the brains of the individuals in charge of each activity, and it is difficult to fully use knowledge in the overall security system. Therefore, Cycle-Ops aims to enable security activities that grow continuously and cyclically by facilitating both the flow of using knowledge from one activity for another and the flow of sharing and inheriting knowledge between people through the mediation of AI. There are two key components for Cycle-Ops. The first is the formalization of tacit knowledge. Security activities require a wide range of knowledge, and that knowledge depends on experts in security in the form of expertise and tacit knowledge, so it is not easy to inherit and reproduce that knowledge. Therefore, in each security activity, which is currently human-centered, the tacit knowledge of humans (security experts) is formalized and incorporated into AI to enable cooperation between humans and AI. The second is cognitive security. The target users of Cycle-Ops’ sustainable cyclical-growth-type security activities include not only those in charge of systems and security but also end users facing security issues. In many cases, the weakest part of security is not the system but the maintainers and users of the system. If people and their groups are not properly aware of the information they are facing, for example, the threat of misinformation/disinformation mentioned above may occur, making it difficult to maintain security. Therefore, it is important to take an approach to protect human cognition by ensuring autonomous decision-making in individuals and groups while using methodologies such as cognitive psychology as well as information science. We make this approach actionable with the way humans and AI work together in Cycle-Ops. Cycle-Ops combines these two components and aims to evolve traditional security activities, which were dependent on only by the manpower of specialized human resources, into a new form by highly cooperating AI with various people involved in security activities (in charge of systems, security, end users, etc.). In the following sections, we introduce our research on formalization of tacit knowledge and security to protect human cognition with use cases. 2. Formalization of tacit knowledge for security report preparationAs mentioned in the previous section, one of the key components of Cycle-Ops is the formalization of tacit knowledge. We started our research by focusing on the creation of threat information reports (security reports), which are essential for overall security activities. The person in charge of creating security reports is faced with a large amount of serious threat information every day, and the collection and analysis of such information requires accuracy and efficiency. Because readers have various attributes and roles, such as those at the management level and those in charge of systems, it is necessary to create a report that describes the points that each reader wants to know. Those in charge of security reporting perform these tasks on a daily basis on the basis of their expertise, and we believe that formalizing this tacit knowledge is essential as the first step in achieving Cycle-Ops. In the traditional process of creating a security report, expert security personnel design the report’s table of contents by taking into account not only the content of the obtained threat information but also the positions and interests of the readers; furthermore, to tailor the report to the actual conditions of the organization, they conduct a detailed analysis on the basis of the network configuration and response status within the organization and classify the results according to the readers. This classification requires a deep understanding, in other words, tacit knowledge, of the culture within the organization, including concise summaries of information needed for managerial decisions for management, and technical details for system personnel. Large language models (LLMs) have begun to be used to create security reports to a limited extent, but the tacit knowledge of experts has not been fully used. In response, we have divided the tacit knowledge involved in the process of creating a security report into two main categories. The first is how to design the report’s table of contents taking into account readers’ positions and interests, and the second is how to analyze the risk to the local system for the threat information described in the report. By enabling an LLM to learn implicit knowledge of these two points, we developed a technology to automatically generate high-quality reports adapted to the culture of the organization and the characteristics of readers. The next section describes the details of this technology. 2.1 Knowledge formalization method for designing a report’s table of contents considering readers’ positions and interestsSimply asking a traditional LLM to produce a report would produce a table of contents that was of no interest to the reader. For example, a report for executives might contain excessively technical content, resulting in general content that is not clearly relevant to the organization. We developed a method for extracting tacit knowledge automatically from past reports prepared by experts. This method formalizes the viewpoint of the report’s structure as tacit knowledge, the same as the traditional task of manually creating a security report, such as the task of creating a draft by a new security staff member and reviewing it by an experienced security staff member. We first extract topics and target readers from the expert’s past reports then let an LLM generate a report with the same conditions. In this case, the LLM is regarded as a new security person who can only write generic content. By analyzing the difference between the expert’s past report and the LLM’s one, we can extract tacit knowledge, such as “a vulnerability report for executive-level is focused on the impact on the company,” from the difference that the expert report has a table of contents according to the executives’ interests but the LLM report does not. When generating a report, RAG (retrieval-augmented generation) technology is used to generate a draft table of contents while referring to similar topics and tacit knowledge for each reader, thus improving the accuracy of the table of contents in line with the target readers. A questionnaire survey conducted when this method was applied to report creation in actual cybersecurity operations revealed no negative opinions when compared with reports created by experts in the past, confirming that reports of the same level were generated (Fig. 1).

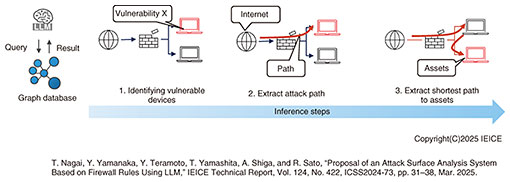

2.2 Knowledge formalization method for risk analysis for a local system based on the threat information described in the reportTo generate a security report that is more consistent with the reader’s needs, one needs not only externally published security information but also information about internal systems and related risk information. This requires complex analysis that dynamically combines diverse information such as network configuration, device configuration information, and vulnerability information. If this process, which was traditionally conducted by security personnel, was simply done with an LLM, LLM inference would be required for each conditional branch. If any step failed, the final result of risk determination would be different, preventing correct risk determination. We thus put all this information into a graph database and structured it so that a single graph query can retrieve all the information needed for risk analysis. In accordance with the use case of determining the possibility of exploitability from outside, vulnerability information and network device settings are associated with nodes and can be acquired in a batch in relation to network routes. This enables an LLM to convert an instruction such as “Obtain network routes and related information that can be reached from the Internet to a terminal with vulnerability X” into a graph query, form the information obtained in a batch from the graph database into a prompt, and use the LLM to execute risk analysis all at once. Therefore, the number of LLM inferences is reduced, and high-precision risk judgment is achieved considering comprehensive information. With this method, we achieved the same accuracy of about 80% as that of previous research that only considered network configuration but achieved the same accuracy even under more complicated conditions (including firewall and network device settings) (Fig. 2).

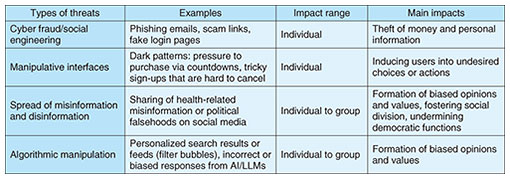

3. Security to protect human cognitionIn today’s information society, we are exposed to various types of information on a daily basis to make decisions. With the advent of social media, video distribution services, and generative AI, the speed and volume of information generation, sharing, and consumption have increased dramatically, enabling users to have unprecedented convenience and freedom of information access. Despite this convenience, there is a risk that people’s perception and decision-making may be influenced or interfered with in various ways (Table 1). For example, cyber fraud and social engineering use human cognition to deceive users, thus defrauding them of money and personal information. Manipulative interfaces called dark patterns can lead users to make unconscious choices and cause them to behave in ways that are not intended. The spread of misinformation and disinformation on social media not only affects the beliefs of individual users but also divides the opinions of society as a whole. The output of generative AI cannot be ignored as a factor that influences people’s cognition. A typical problem is hallucination, in which generative AI outputs information in a realistic manner that does not actually exist. Another problem is algorithmic manipulation, whereby factual but biased information is presented as if it is neutral and accurate. Such outputs are less uncomfortable for recipients and thus accepted uncritically. This can result in risks of cognitive bias and erroneous decision-making.

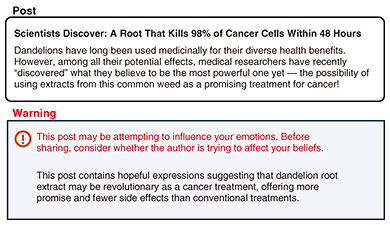

4. Cognitive security and Cycle-OpsThe above-mentioned cognitive threats arise at both the individual and group levels and are intertwined, affecting society as a whole. In such an environment, it is important to focus on not only how information is handled but also how information is perceived. Our perceptions and decisions can become targets of external influence and manipulation. To counter this threat, we are conducting research on the basis of a new security framework called “cognitive security.” Cognitive security is an approach to protect individuals, groups, and societies from the risks of information environments that target cognition and decision-making. This approach is difficult to implement with conventional system-centered security technologies. Therefore, we have positioned cognitive security as a core element of new security activities to be implemented through cooperation between humans (in charge of systems, security, end users, etc.) and AI in Cycle-Ops. In addition to reducing the cognitive risk of individuals by intervening closely with end users, by capturing such interactions between AI and end users (people) from a broader perspective, it becomes easier to understand the cognitive risk of a group and decide how to respond to it, and system and security personnel can provide effective end-user support. In the following sections, we introduce our efforts to combat the spread of misinformation and disinformation, which is one of the threats targeted by cognitive security. Unlike cyber fraud, misinformation spreads from individual to individual consecutively. Therefore, Cycle-Ops is effective, and we are working to develop technologies targeting both individuals and groups. 4.1 Intervention for individuals: Intervention to promote attunement to emotionally manipulative expressions [2]We introduce an intervention technology that focuses on emotional language as an approach for individuals. It is known that emotional expressions, such as anger and fear, affect the spread of misinformation/disinformation on social media. We created an intervention that included messages to encourage people to notice their emotional expressions in response to health-related misinformation posts and tested the effects of the intervention through a questionnaire survey. It was confirmed that the intention to share the posts was significantly suppressed. Although this study focused on misinformation, interventions that promote awareness of emotionally disturbing information are more general. Whether the information is true or false, a sober perspective on overly emotional expressions can help the recipient make decisions. In the context of the attention economy, where the value is to attract the user’s attention, it is important to design an environment that enables the user to keep their thoughts autonomous. Mechanisms such as this intervention can be a practical means of supporting the quality of decision-making in daily information contact (Fig. 3).

4.2 Intervention for groups: Network intervention to reduce the spread of misinformation [3]As a countermeasure technology for groups, we are developing a technology to optimize the allocation of prebunking interventions to minimize the spread of misinformation in social networks. Prebunking, just like a psychological vaccination for misinformation, is an intervention that encourages people to build their cognitive resistance to misinformation by proactively providing warnings and counterarguments against impending misinformation before people are exposed to it. Although many studies have demonstrated the effectiveness of prebunking, it is difficult to provide interventions to all people due to cost, and interventions must be allocated efficiently to minimize the spread of misinformation. We formulated the intervention assignment problem as a combinatorial optimization problem and developed an algorithm to identify the optimal intervention target. Through numerical experiments using real data, we found that when interventions are given to 200 random people, the spread of misinformation can hardly be suppressed, but when the allocation of interventions is optimized using our technology, misinformation can be reduced by up to 40% compared with no intervention (Fig. 4).

Our goal is to create an environment in which individuals can make autonomous decisions and society as a whole can make decisions while respecting diverse values. It is thus necessary to elucidate the mechanisms by which technology and the environment affect people’s cognition and design and implement effective interventions through collaboration among various fields such as psychology, human-computer interaction, network science, and sociology. We also need to pay attention to the methodological challenges of the research. For example, the fact that participants in user research are biased towards a certain cultural area can be a factor that restricts the reproducibility and applicability of results. To address these issues, our group is also working on research that captures differences on the basis of demographic factors [4]. Sustainable efforts are required, including not only technology but also institutional design, ethical standards, and literacy improvement through education. Going forward, we will continue to research and implement cognitive security as the foundation that supports people’s autonomous decision-making, thus contributing to the construction of a healthy society. 5. Future prospectsTo respond to increasingly severe and sophisticated cyber-attacks, it is necessary to approach not only companies but also everyone including end users. Through the research and development of Cycle-Ops, which focuses on the formalization of tacit knowledge and security to protect human cognition, we will evolve security activities into a new form through highly effective collaboration between people and AI. This will bring about a better experience of security activities, such as risk reduction and efficiency improvement as well as a true sense of security and safety that could not be achieved only from the perspective of economic rationality. References

|

|||||||||