|

|||||||||||||||||||||||

|

|

|||||||||||||||||||||||

|

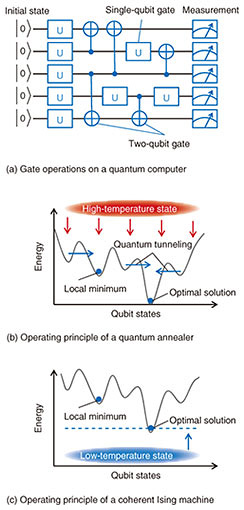

Feature Articles: Toward New-principle Computers Vol. 19, No. 5, pp. 12–17, May 2021. https://doi.org/10.53829/ntr202105fa1 Activities toward New-principle ComputersAbstractNon-von Neumann-type computers, such as quantum computers and Ising machines that operate on principles different from those of present-day computers, are attracting attention. They are particularly powerful when applied to specific types of problems such as combinatorial optimization problems, quantum chemical calculations, and prime factorization, and since solutions to problems such as these can have a significant impact on society, research of new-principle computers is moving forward at a vigorous pace. The following Feature Articles in this issue introduce theoretical and experimental activities regarding new-principle computers at NTT laboratories while describing recent advances in this field. Keywords: quantum computer, coherent Ising machine, quantum annealer 1. New-principle computersIn today’s semiconductor industry, there is an empirical rule called Moore’s law that states, “the number of transistors on an integrated circuit doubles every 18 months.” In truth, the miniaturization of semiconductor devices has been progressing in accordance with this law, and computer performance has been increasing yearly. If this empirical rule continues to hold as it has up to now, transistor size will become extremely small and approach its limit at the size of atoms. Before that, however, it is said that quantum effects will appear and that laws applicable to conventional electronic circuits will no longer hold constituting a quantum mechanics barrier. In the world of quantum mechanics, there are mysterious properties, such as the superposition state and wave-particle duality, not seen in classical mechanics. A computer that actively uses these properties and that operates on principles different from those of conventional computers is the quantum computer. In current information processing, calculations proceed using bits that can take on either of two values, 0 or 1. In contrast, a quantum bit (qubit), the basic device of a quantum computer, can achieve a superposition state of both 0 and 1. Given N qubits, it will be possible to achieve a superposition of 2N states, which means that increasing the number of qubits will increase the number of superposition states in an exponential manner. This holds the possibility of massively parallel computation by executing computer operations against this huge number of superposition states. It is also a factor affecting the speed of quantum computers. A quantum computer, moreover, allows the wave property of a qubit to be used so that the desired solution can be found from the results of massively parallel computation in accordance with the phase-interference effects between qubits. In contrast to von Neumann-type computers based on conventional sequential computation, non-von Neumann-type computers, such as the quantum computer, that operate on new principles, have been recently attracting attention. The following Feature Articles in this issue introduce activities related to new-principle computers at NTT laboratories from both theoretical and experimental perspectives. New-principle computers can be broadly divided into two types: gate-based quantum computers and quantum annealers. The former prepares multiple qubits in their ground states and executes calculations by repeating single-qubit-gate operations and two-qubit-gate operations, as shown in Fig. 1(a). Quantum algorithms for prime factorization, large-scale searching, etc. have been discovered, and it has been theoretically proven that they are faster than classical algorithms. However, qubits are fragile in the face of external noise and errors are frequent, so redundant qubits for error-correction purposes, i.e., a massive number of qubits, are needed. For example, a theoretical estimation has shown that 20,000,000 qubits would be needed to execute prime factorization of a 2048-bit number [1]. It is extremely difficult to manufacture qubits on such a scale with current technology. The latter type, i.e., quantum annealers, superposes all states of multiple qubits, in other words, prepares high-temperature states, and finds the state with the lowest energy (solution) by gradual cooling, as shown in Fig. 1(b). It is said that the qubit states can escape from a local minima during this process due to a quantum tunnel effect, therefore reach a solution faster than classical annealing algorithms. Achieving higher processing speeds through quantum properties has not been rigorously proven, but approximate solutions to combinatorial optimization problems greatly needed by society can be obtained, so quantum annealers have been attracting attention. They execute calculations while slowly changing stable states, which makes them robust to noise. When implementing an optimization problem in an actual physical system with a quantum annealer, a physical model that expresses a mutually interactive spin system called an Ising model is used, so this type of computer is also called an Ising machine. 2. Ising machinesIn the research on gate-based superconducting quantum computers, achievements such as observation of an entangled state among three qubits and the demonstration of double-qubit-gate operation were reported in 2010 and 2011, respectively. In 2011, however, exciting news came out of the Canadian venture company D-Wave Systems. They announced the world’s first commercial quantum computer in the form of a quantum annealer (D-Wave One) implementing 128 qubits. This was an Ising machine that executed calculations using superconducting qubits. Compared with the aluminum qubits used in gate-based superconducting quantum computers, the D-Wave One qubits using superconducting niobium have an overwhelmingly shorter storage time for quantum information, so at the time, there was some doubt as to the quantum properties of this computer. However, subsequent demonstration experiments confirmed that the high-speed performance of the computer was due to quantum properties. Since then, the 512-qubit D-Wave Two was announced in 2013, 1000+ qubit D-Wave 2X in 2015, 2048-qubit D-Wave 2000Q in 2017, and 5000+ qubit D-Wave Advantage in 2020. Companies around the world are now using cloud services offered by D-Wave Systems, and research and development on the business use of quantum Ising machines is progressing. However, for actual business applications, Ising machines of even larger scale are needed. Furthermore, since superconducting qubits are solid-state devices on a chip, there is the constraint that coupling between qubits is limited to neighboring qubits. Consequently, when implementing an optimization problem in a quantum annealer, many qubits beyond the size of the problem are needed. NTT laboratories developed a coherent Ising machine (CIM) that can implement all-to-all spin couplings by using degenerate optical parametric oscillators (DOPOs) as artificial spins and confining multiple DOPO pulses within a fiber ring cavity. This scheme makes it possible to increase the number of artificial spins by extending the total length of the fiber ring cavity, so scaling up is relatively easy compared with solid-state devices. A CIM searches for a solution from the low-temperature side, as shown in Fig. 1(c), by gradually increasing the intensity of DOPO pumping light. The solution is found when system energy reaches its optimal solution. Studies are still being conducted on how the quantum properties of DOPO contribute to computational performance, but in a classical combinatorial optimization problem called the maximum-cut (MAX-CUT) problem, it was shown that a CIM is faster than a computer using a classical annealing algorithm [2]. The Feature Article “Performance Comparison between Coherent Ising Machines and Quantum Annealer” [3] introduces a comparison experiment between CIMs and the quantum annealer from D-Wave Systems.

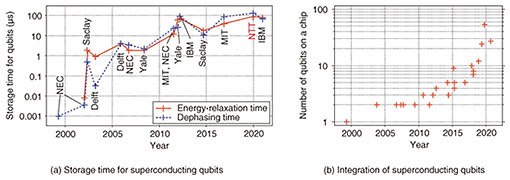

3. Gate-based quantum computersHow best to achieve a qubit—the basic device of a quantum computer—has been a problem for the last 20 years. A quantum two-level system is needed to achieve a 0 and 1 superposition state in a qubit, but what kind of physical system should be used for achieving this has been under discussion. Atoms or electrons for which the storage time of quantum information is long are difficult to integrate, but semiconductor or superconducting qubits, though having a short storage time, are solid-state devices, so semiconductor integration technology can be used. Optical qubits, meanwhile, have good affinity with optical communications, and a variety of candidates have been studied. This discussion continues to this day, and each of these physical systems has been developed while expanding its strengths. Superconducting quantum computers, ion-trap quantum computers, and semiconductor-dot quantum computers have achieved a level of integration of 50–70 qubits, 32 qubits, and 2–3 qubits, respectively. In particular, the announcement by Google in 2019 that the superiority of their 53-qubit superconducting quantum computer over existing supercomputers was a “proof of quantum supremacy” became a major topic [4]. Setting quantum computers to solve problems deemed to be their specialty does not prove that they are superior in all problems; however, this achievement was nevertheless a significant milestone. The development of superconducting qubits toward this milestone is shown in Fig. 2. In 1999 when operation of a superconducting qubit was demonstrated for the first time, storage time was short at 1 ns, making for extremely difficult experiments (Fig. 2(a)). Around 2012, however, this was extended to approximately 100 μs, an improvement of about five orders of magnitude. There has been no lengthening of storage time on that scale since then, but the number of qubits on a chip has increased dramatically (Fig. 2(b)). The reason for this is thought to be that the minimally required storage time toward higher integration had been reached at that time; as a result, the direction of research at many research institutions shifted from extending storage time to increasing integration. Therefore, Google, IBM, and Intel developed 50-qubit superconducting quantum-computer chips that reflect an evolution to the point of quantum supremacy.

At NTT laboratories, research has been focused on a type of superconducting qubit different from the qubits integrated on current superconducting quantum computers. Making use of this difference, this research has involved a variety of activities from basic physical research, such as testing of macroscopic quantum superposition states [5], to applied research with an eye to local high-sensitivity magnetic field sensors [6]. The Feature Article “A Long-lived Tunable Qubit for Bosonic Quantum Computing” [7] introduces NTT’s achievement of recording the world’s longest storage time with this superconducting qubit (Fig. 2(a)) and discusses the possibility of applying this qubit to quantum computers. 4. Toward fault-tolerant quantum computersOn hearing the news that quantum supremacy had been demonstrated, one might think that the development of a practical quantum computer was soon at hand. However, the path to a working quantum computer is long. Current general-purpose quantum computers have a level of integration of about 50 qubits. In addition, quantum information is lost over time due to noise effects and gate operation is accompanied by errors, so the execution of complex calculations involving numerous repetitions of gate operations cannot be executed. Therefore, a quantum computer that is limited in terms of functions and scale is called a noisy intermediate-scale quantum (NISQ) device. This type of device, despite its limitations, can also exhibit quantum supremacy, so it can teach us much about the potential of quantum computers. However, executing complex quantum calculations on a practical scale will require fault-tolerant quantum computers. To meet this requirement, the approach is to configure a single qubit (logical qubit) that is error correctable and robust to noise by preparing multiple qubits (physical qubits) to provide redundancy. It will also be necessary to prepare a complex layered structure consisting of a layer for controlling physical qubits, one for controlling the logical qubit, one for executing algorithms above those layers, etc. The Feature Article “Designing Quantum Computers” [8] introduces the results of a theoretical study on what type of layered structure a fault-tolerant quantum computer should adopt. More specifically, constructing a logical qubit requires not only the integration of multiple physical qubits but also the development of software. For example, after evaluating the characteristics of individual physical qubits, a program would be needed to calibrate the control system with good efficiency. The design of error-correcting operations and a feedback circuit would also be required. To evaluate this design, a quantum circuit simulator would be essential. The Feature Article “Fault-tolerant Technology for Quantum Information Processing and Its Implementation Methods” [9] introduces the research and development of a software platform toward fault-tolerant quantum computation. As explained at the beginning of this article, it is said that 20,000,000 physical qubits would be needed for a fault-tolerant quantum computer. Although qubit integration technology and control technology are evolving rapidly for this purpose, there is still a need for breakthroughs. As a near-future target, studies are being conducted on combining a high-performance NISQ device that does not execute error correction with a conventional computer and applying such a hybrid computer to quantum machine learning, quantum chemical calculations, etc. At NTT laboratories, research is moving forward on making maximum use of the capabilities of NISQ devices on the basis of knowledge of computational theory and information theory. The Feature Article “Theoretical Approach to Overcome Difficulties in Implementing Quantum Computers” [10] introduces methods of improving the performance of NISQ devices* such as eliminating noise by limiting the means of operation or making effective use of uninitialized qubits by using a high-speed algorithm. The above-mentioned article “Fault-tolerant Technology for Quantum Information Processing and Its Implementation Methods” [9] also describes quantum error mitigation that, while increasing computational cost, has no need for increasing the number of qubits as a technique for compensating for NISQ device noise.

5. Future outlookTwenty years ago, research was focused on implementing a single qubit, and it was said that quantum computer technology was still 100 years off. Then, ten years later, a double-qubit gate was achieved and research focused on how the number of qubits could be increased. At that time, some people were voicing the opinion that a quantum computer was now only 50 years away. Currently, quantum computers consisting of several tens of qubits are in operation and quantum supremacy is being demonstrated. Ising machines having several thousand bits have also been developed, some of which have been commercialized. Furthermore, the development of a fault-tolerant quantum computer 30 years from now has been proposed through a Japanese national project. Therefore, the research on new-principle computers has been accelerating, number of researchers has been increasing, and range of research has been expanding. That being said, the path to a large-scale quantum computer is undoubtedly long. For experimental researchers, a breakthrough is desperately needed to develop such a quantum computer. It may be necessary to apply high-yield process technology such as modern large-scale integrated circuits, integration technology including control systems, or network technology toward distributed quantum computing. Theoretical researchers, meanwhile, anticipate breakthroughs such as quantum error-correcting codes that do not consume resources and new quantum algorithms. There is also the possibility that an extraordinary scientist somewhere will propose a completely novel idea that can suddenly provide a breakthrough to problem solving. In any case, these are without doubt challenging themes for which no one knows what will happen, so from here on, we would like to keep a close watch on developments in this field. References

|

|||||||||||||||||||||||